Note: This blog post is superseded by our 2017 blog post on Docker, which covers best deployment practices with Docker swarm mode.

Containers are changing the way applications are architected and deployed. Whether you are using a service or microservice-like architecture, your application still needs the persistence provided by a Database. So how do you architect and orchestrate your deployment when existing database solutions are inflexible or require manual configuration to set up and run? Enter Aerospike, with its shared-nothing architecture, Smart Clients (™) and integration with the Docker ecosystem that enables automated scaling up and down and self-healing of the persistence layer, and integrates with Compose, Swarm and other key Docker technologies. But first, let’s take a step back.

Why Containers?

Containers are seen as a simple way to:

Encapsulate the dependencies for the process you want to run, e.g., the packages required, the frameworks that need to be present, the code you want to execute, etc.

Provides isolation at runtime, enabling containers (each with different dependencies, O/S Kernel version, etc.) to co-exist on the same physical host without the bloat of traditional Virtualization solutions

Application architectures are also evolving, especially with the adoption of microservice-like architectures. Your application is no longer one humongous, static binary or set of packages; it starts to look like a series of discrete services that are brought together dynamically at runtime. This is a natural fit for Containers. But how does this mesh with services that require persistence or require long running processes?

Why Aerospike and Containers?

Aerospike is naturally suited to container deployment thanks to its attributes such as:

Shared-nothing architecture: there is no reliance on a single node for configuration, state management, etc.

Automatic hashing of keys across the cluster (using the RIPEMD-160 collision-free algorithm) with Smart Partitions™

Automatic healing of the cluster as nodes enter and leave the cluster, including automatic rebalancing of the data

Automatic discovery of the cluster topology with Aerospike Smart Client™ for all the popular languages and frameworks

Automatic replication of data across nodes with a customizable replication factor

This enables you to:

Scale the persistence layer as needed, both up and out

Eliminate reconfiguration of the application and database tiers as Containers enter and leave the Database Cluster

Utilize Containers on your own dedicated infrastructure or public cloud providers

Swarm, Compose & Multi-host Networking

In any real-world deployment, you need to deal with an application deployment across a number of physical servers. You need to be able to, as needed, easily deploy – and then scale – the services that support the application. Docker provides a number of technologies that can assist with this, namely:

Swarm: clustering of Docker hosts

Compose: orchestrating of multi-container applications

Multi-host Networking: enables creating custom container networks that span multiple Docker hosts

Nice Theory, But Show Me the Money!

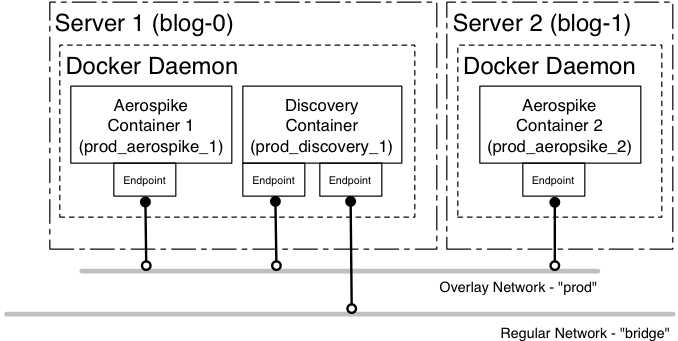

We want to deploy an Aerospike Cluster across multiple Docker hosts (see Figure 1). In order to achieve this, there are a number of steps we need to complete:

Provision the two servers (blog-0 and blog-1) and run a Docker Daemon

Define the Compose file for the services we want to orchestrate

Establish the multi-host network

Start the orchestrated services

Scale the Aerospike cluster

Figure 1 – Deployment of Aerospike Containers

Prerequisites

The following examples assume you are using:

docker 1.10.0-rc1 and above

docker-compose 1.6.0-rc1 and above

docker-machine 0.6.0-rc1 and above

The code examples below can be found in this Github repository along with the Compose files, etc.

Start the Swarm Cluster

We will create three new Docker Hosts. One will be used for Service Discovery by the Docker’s networking systems (blog-consul), and the other two will be used to run the Aerospike processes (blog-0 and blog-1).

ENGINE_URL=https://get.docker.com/builds/Linux/x86_64/docker-1.10.0-rc1

ENGINE_OPT="--engine-install-url=$ENGINE_URL"

B2D_URL=https://github.com/tianon/boot2docker-legacy/releases/download/v1.10.0-rc1/boot2docker.iso

B2D_OPT="--vmwarefusion-boot2docker-url=$B2D_URL"

DRIVER=vmwarefusion

docker-machine create

--driver $DRIVER

$ENGINE_OPT

$B2D_OPT

blog-consul

docker $(docker-machine config blog-consul) run

-d

--restart=always

-p "8500:8500"

-h "consul"

progrium/consul -server -bootstrap

docker-machine create

--driver $DRIVER

$ENGINE_OPT

$B2D_OPT

--swarm

--swarm-master

--swarm-discovery="consul://$(docker-machine ip blog-consul):8500"

--engine-opt="cluster-store=consul://$(docker-machine ip blog-consul):8500"

--engine-opt="cluster-advertise=eth0:2376"

blog-0

docker-machine create

--driver $DRIVER

$ENGINE_OPT

$B2D_OPT

--swarm

--swarm-discovery="consul://$(docker-machine ip blog-consul):8500"

--engine-opt="cluster-store=consul://$(docker-machine ip blog-consul):8500"

--engine-opt="cluster-advertise=eth0:2376"

blog-1

eval $(docker-machine env --swarm blog-0)Create the Multi-host (Overlay) Network

We will create the multi-host network prod which will attach the Aerospike nodes.

docker $(docker-machine config --swarm blog-0) network create --driver overlay --internal prod

Service Definitions

We want to define two services:

aerospike

This service represent the Aerospike daemon process encapsulated in a Container

discovery

This service will monitor for new Aerospike containers and add them to the Aerospike cluster

Let’s look at those service definitions in detail.

aerospike:

image: aerospike/aerospike-server:3.7.1

volumes:

- "$PWD:/etc/aerospike"

labels:

- "com.aerospike.cluster=awesome-counter"

environment:

- "affinity:com.aerospike.cluster!=awesome-counter"

net: prodNotes:

An explicit Aerospike version (3.7.1 in this case); it is useful, especially in production deployments, to pick a concrete version rather than just use latest

An external volume $PWD is mounted into /etc/aerospike allowing any Aerospike configuration to be overridden

A label is defined; this ensures that any new Aerospike containers will join the same named cluster

An affinity rule to ensure that only a single Aerospike container is deployed per Docker host

A declaration to use the network prod

discovery:

image: aerospike/interlock:latest

environment:

- "DOCKER_HOST"

- "constraint:node==blog-0"

- AEROSPIKE_NETWORK_NAME=prod

- AEROSPIKE_CLUSTER_NAME=awesome-counter

volumes:

- "/var/lib/boot2docker:/etc/docker"

command: "--swarm-url=$DOCKER_HOST --swarm-tls-ca-cert=/etc/docker/ca.pem --swarm-tls-cert=/etc/docker/server.pem --swarm-tls-key=/etc/docker/server-key.pem --debug --plugin aerospike start"

net: bridgeNotes:

Uses the aerospike/interlock image to trigger Cluster reconfigurations

Various environment variables indicating that:

The container is constrained to a specific Docker Host,

blog-0

The name of the cluster new Aerospike containers will join

An external volume mount, to enable sharing of keys for TLS communication

A command to start the interlock container

A declaration to use the network bridge. This ensures that the discovery process can connect to the Swarm cluster to receive Container events.

Start the Services

The above services can be encapsulated in the docker-compose.yml file, and then started thus:

docker-compose up -dJoin the Discovery Container to prod Network

The discovery service initially joins the bridge network so that it can receive container start and stop events from the Swarm cluster using the Docker Remote API. But it also needs to join the prod network in order to send configuration messages to the Aerospike nodes provisioned on that network. Using the network command, we can add the discovery container to the prod network.

docker $(docker-machine config blog-0) network connect prod blog_discovery_1Connect to the Aerospike Server

We can now use aql tool to log onto the Aerospike server and create some data. We are going to run aql in a container as well.

docker run -it --rm --net prod aerospike/aerospike-tools

aql -h blog_aerospike_1

aql> insert into test.foo (pk, val1) values (1, 'abc')

OK, 1 record affected.The hostname blog_aerospike_1 comes from the name of the container. Docker Compose combines the project name (by default, the directory name) with the service name and an incrementing integer to ensure the name is unique. With multi-host networking, these hostnames can be considered concrete, so it’s simple to build scripts relying on these names. The same script can then be used across multiple deployments of the same Compose file. The hostname will be the same; each network will simply provide a different IP address based on the network settings. This enables multiple developers to deploy the same set of Containers and run the same scripts in development, testing or production environments.

Scale the Aerospike Cluster

We can now use Docker Compose to scale the Aerospike service:

docker-compose scale aerospike=2So how does this work? The Interlock framework listens to Docker events such as container creation, destruction, etc. It then forwards these events to a plugin, for example HAProxy. A plugin has been created for Aerospike by Richard Guo. It uses these events to:

Automatically add new Aerospike nodes to a Cluster when a container starts

Automatically remove Aerospike nodes from a Cluster when a container stops

In the discovery service defined above, there are two environment variables that control the network and Aerospike cluster membership:

AEROSPIKE_CLUSTER_NAME

AEROSPIKE_NETWORK_NAME

In order to control which Aerospike cluster the new Aerospike container should join, the plug-in compares the value of AEROSPIKE_CLUSTER_NAME with the value of the label com.aerospike.cluster from the container. If there is a match, then the AEROSPIKE_NETWORK_NAME is used to obtain the IP address information from the correct network so that the existing nodes can be contacted and informed of the new node.

View the Changed Aerospike Cluster Definition

Using the asadm tool, we can view the changed Aerospike cluster definition:

docker run -it --rm --net prod aerospike/aerospike-tools

asadm -e i -h blog_aerospike_1Clearly, as you start and stop Aerospike containers, the resulting changes in cluster topology will be reflected by the output of this command.

Conclusion

Docker makes the orchestration of complex services simple and repeatable. Aerospike is Docker’s natural complement as the persistent store for the application you build and deploy with Docker. It is well-suited to Container deployments, and its shared-nothing architecture makes deploying and responding to changes in the container topology simple. Aerospike is focused on Speed at Scale. This means that Aerospike is not just great to start with; it will also scale seamlessly as your application grows.

Where to Get More Information

For more information about Docker and Aerospike, please refer to the following:

The full deployment guide for Docker and Aerospike can be found in this documentation

The scripts and code for the above can be found on GitHub

Aerospike Interlock Plugin on Docker Hub and source code

Interlock framework by Evan Hazlett