Aerospike Database 5.5: XDR Convergence

Aerospike is pleased to announce Aerospike 5.5, which is now available to customers.

XDR Convergence

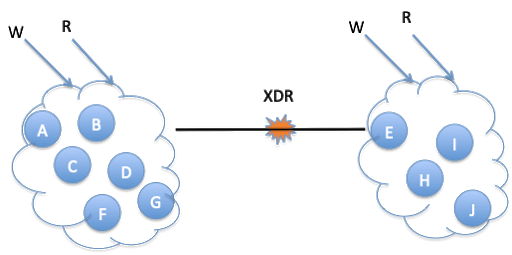

Aerospike 5.2 introduced an improved algorithm for shipping modified bins that guarantees that only the exact set of changed bins is shipped in most situations. When Aerospike clusters are connected to each other using XDR, as illustrated in Figure 1, they run in a permanent split-brain mode where application reads and writes are allowed in both systems. In such a situation, concurrent updates to replicas of the same bin of a record in multiple clusters could result in the bin’s data becoming permanently out of sync.

Figure 1: Active-Active XDR is a permanent split-brain system

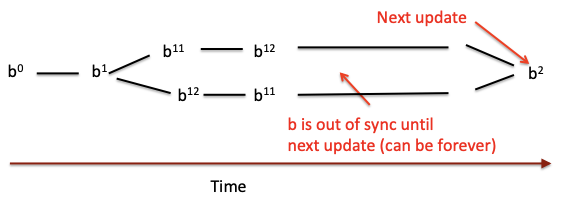

In the example shown in Figure 2, writes b0 and b1 are far enough apart from each other that the asynchronous replication across clusters completes before the next write. However, writes b11 and b12 occur close enough to each other that the replication of these writes crosses over on the network with the values being exchanged, and the bin values become out of sync on the two clusters. This out-of-sync situation will continue until a subsequent update to the same bin, b2, will bring it back in sync. This kind of unpredictable behavior is quite undesirable for most applications using the database.

Figure 2: Out of sync bin values

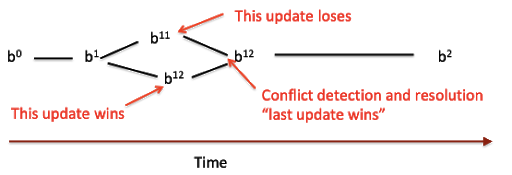

In Aerospike 5.5, we introduce bin convergence support by using a combination that prevents concurrent bin updates from causing bin values to become out of sync indefinitely. To provide bin convergence functionality, Aerospike uses a conflict detection and resolution strategy based on a combination of last-update-time (LUT) and source id (src-id), representing the location where the update happens.

Bin convergence uses a ‘last write wins’ model. Key components of the scheme include:

Every update to a bin generates a bin level timestamp (LUT), storing the timestamp in the record’s meta-data.

Changes to the bin, along with meta-data, including the LUT, are shipped across XDR links.

By comparing the LUT stored with the bin of the local copy of the record with the LUT shipped with the updated bin from the remote copy of the record, the destination uses a last-write-wins strategy to determine whether to override the current data or keep the current stored one. The clock time stored as LUT is the basis for the decision; all clusters will resolve to the same value, thus making the same value “win” in all locations.

Note that due to the asynchronous nature of XDR replication, reconciliation of concurrent writes may not occur before subsequent reads or writes to the bin. Therefore, it is possible for an application to read the value from a recent write that later maybe overwritten during reconciliation. Additionally, the repair process is asynchronous and not tied to an application read or write operation. The repair is guaranteed to happen in a finite time as long as the XDR links are functional.

With convergence enabled for the bin update scenario (Figure 3), the value b12 wins over b11 and the new value b12 is converged across both clusters reasonably quickly. This process makes the application user experience more predictable as values returned by the reads to any copy of the bin are identical once the convergence happens. When XDR links are up, the convergence occurs within an interval close to the network latency between the two clusters. Note that the network latency can be anywhere from a few milliseconds to a few hundred milliseconds, depending on the geographical distance spanning the XDR link between clusters.

Figure 3: Conflict detection and resolution example

Note that the clock times of the source and destination cluster nodes are the basis for the convergence algorithm, even if they are in geographically distant locations. Suppose the clock skew is stable between multiple nodes participating in updates to the same bin (i.e., clocks in different nodes are moving forward at the same rate, maintaining the same interval between them). In that case, the system can tolerate clock skew. However, this skew means that writes made by the node whose clock is behind will always lose during reconciliation when a conflict occurs. Furthermore, a cluster whose clock is behind will reject writes from the client for records that have already been written from another cluster with a clock that’s always ahead.

Moreover, if clocks in geographically distributed nodes all moved forward but at varying rates such that sometimes one cluster was ahead of the other and sometimes another was ahead (“jumping around”), the system may encounter scenarios where the bin values may not end up converging. A special case of this happens on the cloud during live-migrate events on instances where clocks can pause and catch up quickly at a later time. Also, to account for clock skews between two writes to the same bin at the same location in strong consistency mode, LUT values for a bin are not allowed to go back if bin convergence is configured.

Deploying XDR bin convergence introduces three new configuration parameters:

ship-bin-luts[boolean]: This must be enabled on XDR source nodes to use bin convergence. If enabled, XDR will ship bin-levellast-update-time(LUT). These LUTs are necessary to determine the winner when trying to resolve conflicts in mesh/active-active topologies.

conflict-resolve-writes[boolean]: This must be enabled on XDR destination nodes to use bin convergence. If enabled, bin-levellast-update-timewill be stored and will be used to determine the winner.

src-id[integer]: Allowed values are 1-255. This parameter is necessary to use bin convergence. Each DC involved in the XDR topology must pick a unique value. This value will be used to break ties that may happen with the bin-level last-update-time. For example, if the update time is identical on two concurrent updates to the same bin, the update that occurred at the location with the higher src-id will win.

Minor Features

Aerospike 5.5 includes some minor features, the most notable of which we describe below. As always, refer to the 5.5 release notes for complete details and restrictions. Some of these features are available only in the Aerospike Enterprise Edition.

Using Multiple Feature Key Files

It is now possible to specify multiple feature key files in Aerospike Database Enterprise Edition configurations through either or both of the following mechanisms:

multiple instances of the

feature-key-fileconfiguration parameterspecifying a directory with

feature-key-file. All files within the directory are assumed to contain feature keys. The server will not start if other files are present.

The processing of the files occurs in stages. First, any expired files (either because of date or version) are ignored: there must be at least one valid file, or the server will not start. Next, the boolean feature keys in the remaining files are or’ed together. For example, if asdb-compression is enabled in one file, and asdb-pmem in another, both features will be enabled.

The cluster-nodes-limit feature is the only one taking a numeric value, using the largest value across all files. Since zero means no limit on the number of nodes, it will take precedence over any other value.

New Duplicate Resolution Statistics

Duplicate resolution is a conflict resolution process initiated when Aerospike Database is recovering from a node failure or a partitioned cluster. The conflict-resolution-policy configuration parameter determines the exact policy employed. Starting in Aerospike 5.5, three new statistics are available to monitor this process on a per-namespace basis:

dup_res_ask: the number of duplicate resolution requests made by the node to other individual nodesdup_res_respond_read: the number of duplicate resolution requests handled by the node where the record was readdup_res_respond_no_read: the number of duplicate resolution requests handled by the node without reading the record

The statistics are reported in the log using the following format:

<namespace-identifier> dup-res: ask <nnn> respond (<nnn>,<nnn>)

where the namespace identifier appears without brackets, and <nnn> is an integer (also without brackets).

Support for Boolean Values in Maps & Lists

Boolean map and list values are stored internally in msgpack format as booleans, but were returned as integers (1 or 0) to application code (UDFs and client APIs). Starting with Aerospike 5.5, the boolean map and list values are returned to applications as true booleans (true or false). The implementation of this feature required matching changes on both server and client. The 5.1 C client has been upgraded to support this, and other clients will follow. Application code has to be changed as follows:

UDFs that run on Aerospike 5.5+ have to be modified to deal with booleans in CDT operations (rather than integers)

Code running on 5.1.0+ C client libraries that retrieve booleans from CDT operations likewise have to be modified to handle this data type

UDFs execute on the server, so this change does not depend on the client library version. It is recommended that applications affected by this change upgrade both server and client libraries at the same time. For further detail on how applications using the C client library need to change, please read this link.

Systemd Startup Script Improvement

The Linux systemd daemon is responsible for launching services at boot time in a coordinated manner. A key feature of systemd is that of targets, which specify that a given service is not to be launched until the server reaches a certain level of functionality.

Starting with Aerospike 5.5, the systemd script does not launch the database until the network-online target is achieved. Previously, launch was triggered by the network target. The difference between these targets is that the network target is reached when the process of bringing up the network begins, whereas the network-online target is not reached until the network is fully available and connections to other systems are possible.

The advantage of waiting for full network availability is that it suppresses spurious warnings that would otherwise be generated by the server attempting to initiate fabric connections prematurely.