Aerospike on EKS (AWS K8s)

Much like Java, Kubernetes offers the promise of ‘write once, run anywhere’. The wry riposte (a little unfairly) in the early days of Java, was ‘write once, debug everywhere’. To a certain extent this is the position we are in today with the various flavours of Kubernetes out there — getting up and running is different on Google Cloud Platform vs AWS vs Azure vs local (e.g. Minikube), and a handful of well placed pointers can be a great time saver.

This article is about getting Aerospike up and running on Amazon’s Kubernetes Service — EKS. As it happens, it has little EKS specific to say about Aerospike, it is instead about the things you need to do to get EKS up and running so you can start to run Aerospike on top of it. For users of Aerospike, we just want to make it as easy as possible, wherever it is you’d like to run it.

Pre-requisites

There are four things you need

eksctl – the AWS command line utility allowing you to administer (e.g. setup/teardown) your AWS Kubernetes cluster. We use it here to set up the EKS cluster itself. Details of how to install can be found at https://github.com/weaveworks/eksctl#installation.

helm – the package manager for Kubernetes applications. We use it here to deploy our Aerospike cluster. Details of how to install at https://helm.sh/docs/intro/install/.

kubectl – the Kubernetes client allowing you to deploy and administer Kubernetes applications. We use it here to perform ad-hoc Kubernetes operations. Installation details at https://kubernetes.io/docs/tasks/tools/install-kubectl/.

The AWS CLI, allowing command line management of AWS services. We use it here for credential management. Installation details at https://docs.aws.amazon.com/cli/latest/userguide/install-cliv2.html.

Below this is scripted in full for Centos - this script can be readily leveraged for use in other environments. You need to run using sudo or root.

https://gist.github.com/ken-tune/f1d7e95a183b1d0618bd7b2f90db3c31

Credentials

In order to use eksctl you will need to set up an AWS user whose credentials eksctl will run under. This is a slightly involved topic, and I have therefore split it out into a separate article

AWS Credentials for EKS (https://dev.to/aerospike/aws-credentials-for-eks-3a2i)

This article shows you how to set up the IAM policy you need as well as user and group configuration. It concludes with use of aws configure to store credentials so eksctl can make use of them.

Aerospike Up and Running

Now we’re in a position to launch our Aerospike cluster. First we need to create our Kubernetes cluster.

eksctl create cluster --name aero-k8s-demo

Be warned, this takes something of the order of 30 minutes to complete. Once complete, type kubectl config get-contexts to verify correct setup. A context contains Kubernetes access parameters - the user, cluster and default namespace if set. You output should be similar to

Next, we retrieve the helm chart that supports cluster setup, and add it to our local repository.

helm repo add aerospike https://aerospike.github.io/kubernetes-operator/

We are now able to install our Aerospike cluster. We will also enable the monitoring capability.

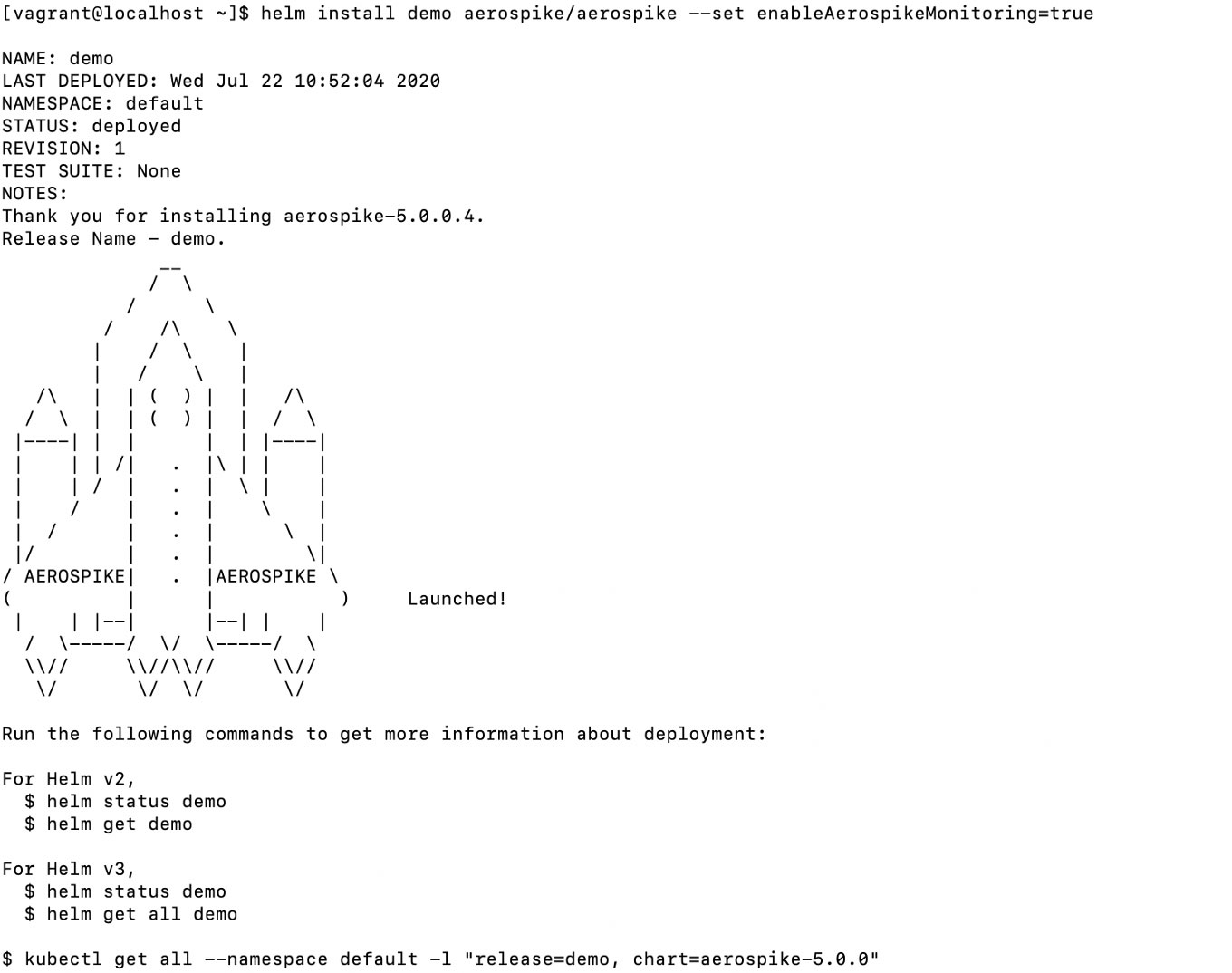

helm install demo aerospike/aerospike \

--set enableAerospikeMonitoring=true \

--set rbac.create=trueYou should see something like

As the last line in the screenshot suggests, we can take a look at our Kubernetes objects via

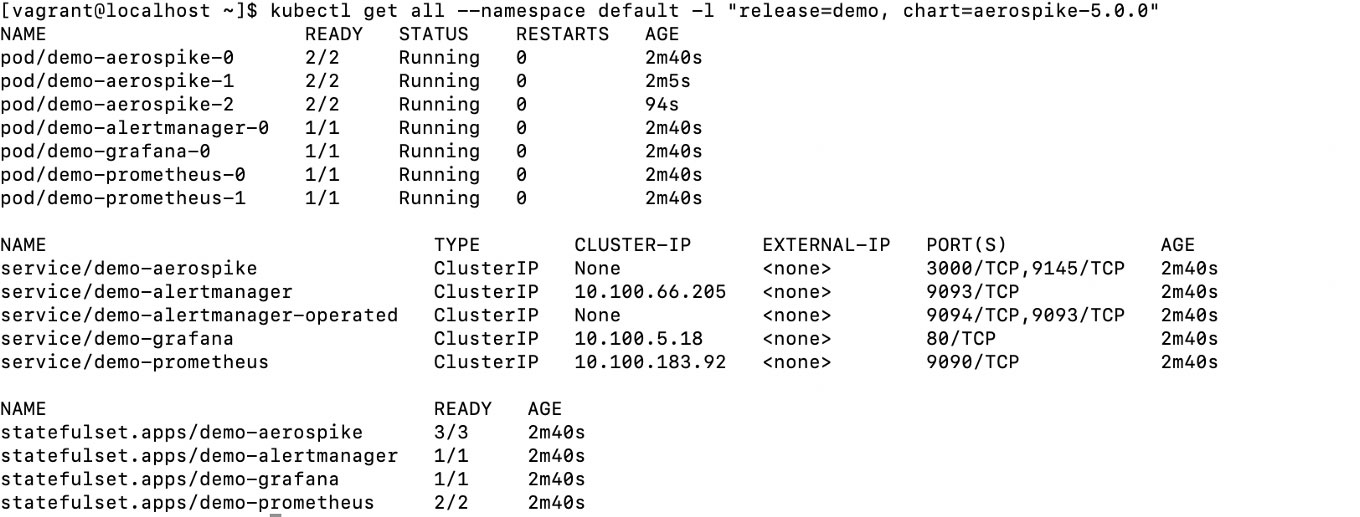

kubectl get all --namespace default -l "release=demo, chart=aerospike-5.0.0"

All your pods should be in the running state before proceeding - if not you can use the command below to wait until they are.

kubectl get pods --watch

Access Grafana Monitoring

In the screenshot above, you can see that the Grafana monitoring service is running on port 80. We will make that available locally on port 8080 via port forwarding.

kubectl port-forward service/demo-grafana 8080:80

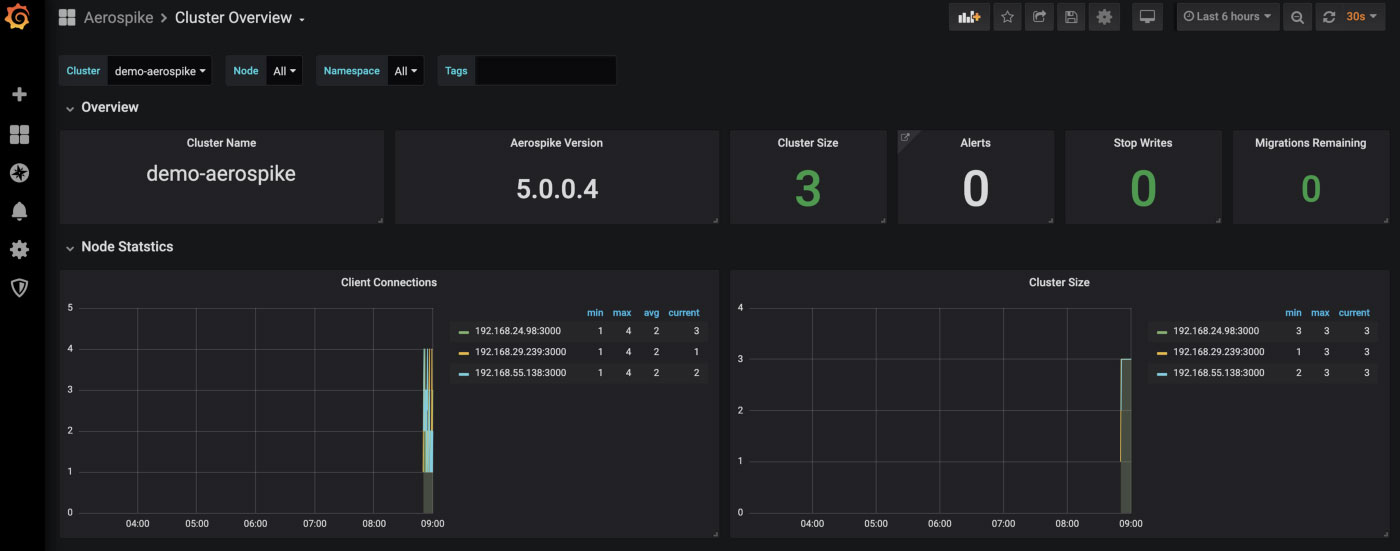

We can connect to this using a browser - http://yourhostname:8080. Credentials admin/admin. Note Grafana will make you change your password on entry. Do Home -> Cluster Overview at top left and you should see something like

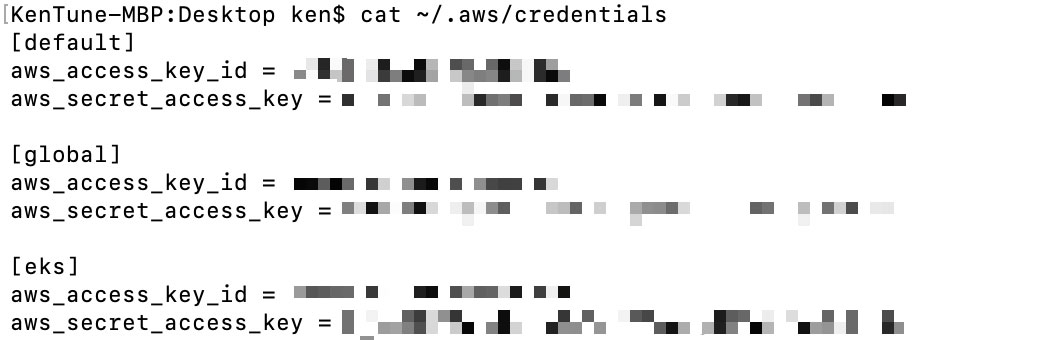

Note that you can do the above port forward in any environment, not just where you did the creation. You will need to make sure you have the same credentials in ~/.aws/credentials and the required kubectl context. Below I have added my eks credentials to my aws credentials file under the eks heading.

eksctl then has a neat utility allowing you to get your K8s context into your alternative environment. I’m taking care to run the command under the eks account (p flag) and in the region where the K8s cluster was created (r flag – get this from the cluster name if you are not sure).

eksctl utils write-kubeconfig --cluster aero-k8s-demo -p eks -r <REGION>

Using Aerospike

We’ll demonstrate use of Aerospike via our benchmarking software. From the kubernetes-aws project

kubectl create -f aero-client-deployment.yml

will create a single pod with a container with our benchmarking software installed. You can see the Dockerfile for the images used in the aerospike-java-client-build directory.

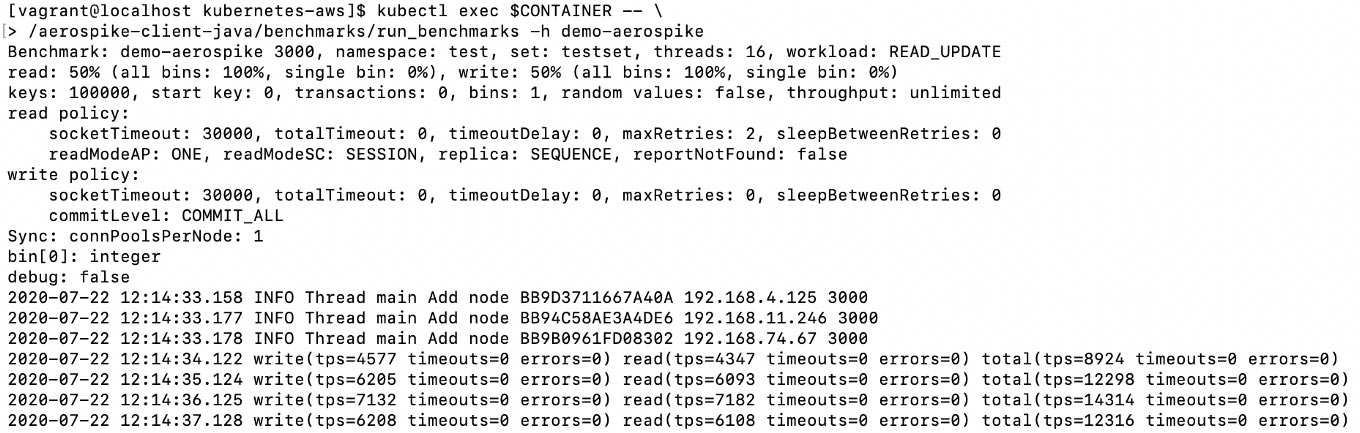

The commands below respectively

Retrieve the name of the container running the java client

Run the run_benchmarks command against that container.

CONTAINER=$(kubectl get pod -l "app=aerospike-java-client" \

-o jsonpath="{.items[0].metadata.name}")

kubectl exec $CONTAINER -- \

/aerospike-client-java/benchmarks/run_benchmarks -h demo-aerospikeThe output will be similar to

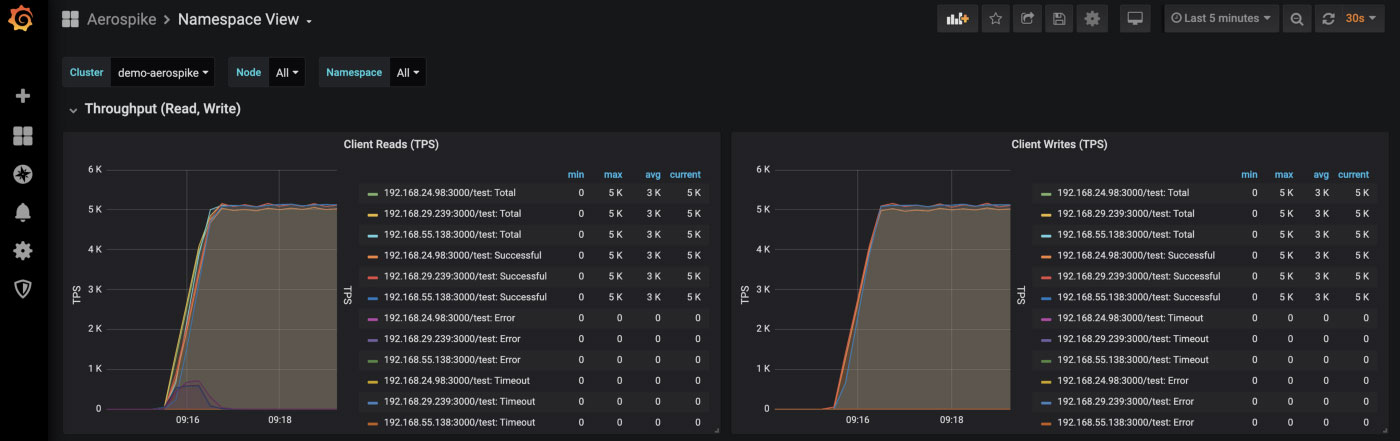

If you go back to Grafana, change ‘Cluster Overview’ to ‘Namespace view’ at top left and change the time period (top right) to ‘Last 5 minutes’ and wait a couple of minutes your output will be similar to

Read and write metrics for benchmarking activity

Tidying Up

To remove the java client

kubectl delete -f aero-client-deployment.yml

To uninstall the Aerospike stack

helm uninstall demo

To delete your EKS cluster

eksctl delete cluster aero-k8s-demo

Conclusion

The above gets you up and running with Aerospike on EKS, the AWS Kubernetes service. Fundamentally it is about the steps you need to take to get your EKS cluster running. From that point on (helm repo add onwards) you can use the same steps versus any Kubernetes cluster, be it EKS, GCP or minikube based.

Cover image with thanks to Kent Pilcher