Multi Record Transactions for Aerospike

https://github.com/aerospike-examples/atomicMultiRecordTxn

Aerospike is a high performance key-value database. It’s aimed at institutions and use-cases that need high throughput ( 100k tps+), with low latency (95% completion in <1ms), while managing large amounts of data (Tb+) with 100% uptime, scalability and low cost.

Because that’s what we offer, we don’t, at the server level, support multi-record transactions (MRT). If that’s what you need, then you will need two phase locking and two phase commit (assuming a distributed system). This will slow your system down, and will scale non-linearly, in that if you double your transaction volume, your time spent waiting for locks will more than double. If Aerospike did that it wouldn’t be a high performance database. In fact it would be just like any number of other databases and what would be the point in that?

Furthermore, believe it or not, despite the fact that our customers ask for many things, multi-record transactions are not high on the list. This is because in practice they’re less necessary than people think outside of a relational database. In an RDBMS you do need MRT because you shard your insert/update across tables in a non-natural way. In a key value or key object database, an insert/update that might span multiple tables is actually a single record change.

Even the textbook example of a change that supposedly has to be atomic, the transfer of money between two bank accounts, is not actually atomic in the real world. You can see this for yourself if you contemplate the fact that bank transfers are not instantaneous — far from it. That is because bank transfers cross system boundaries — and the credits and debits are not co-ordinated as a distributed transaction.

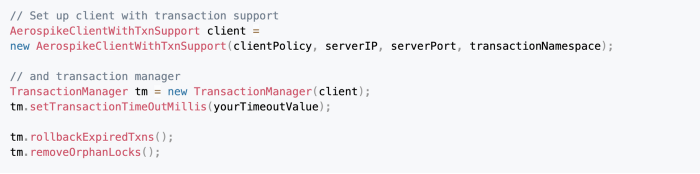

This article is however concerned with multi-record transactions and specifically executing them using Aerospike. Although the server will not natively support them, they can be achieved in software via use of the capabilities Aerospike offers.

Although, as discussed above, the need for MRT in key value databases is more limited than you might expect, there may well still be use cases that demand it. An example might be a workflow system – taking items off a queue and dispatching to other queues. The transfer of work item needs to be atomic — you don’t want a work item to potentially be processed twice or not at all due to a transactional failure.

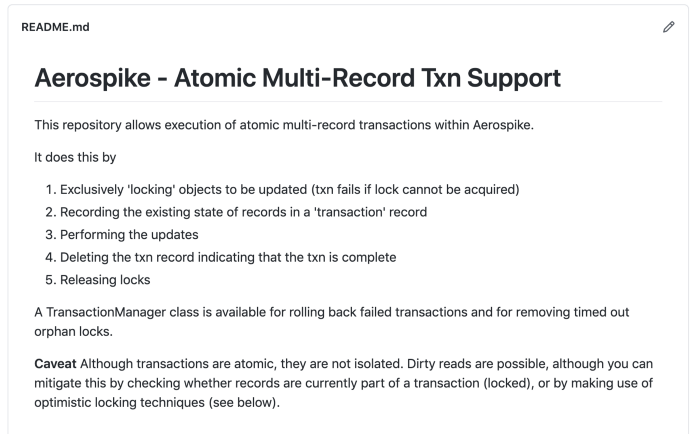

To that end, I’ve put together multi-record-txn, a package that supports atomic multi record updates in Aerospike. At the heart of this is our ability to create locks using the primitives we offer. A record can be created with a CREATE_ONLY flag and this is used for locking purposes — if a lock record for an object already exists, CREATE_ONLY will fail.

We build on this by storing the state of our records prior to update in a transaction record, which is deleted once the updates are complete. Following that, we release our locks.

Rollback, if needed, is accomplished by restoring the stored values. We supply calls for rolling back records of more than a certain age, and for releasing locks, similarly of a supplied longevity. Full details can be found in the project README.

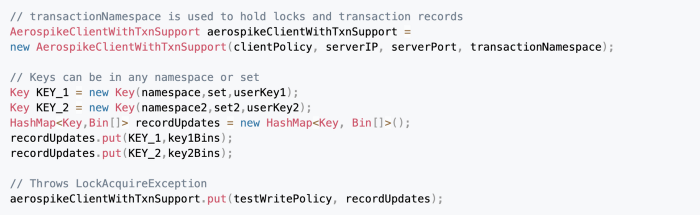

If it’s that easy, why don’t you put it into the product? Good question. The API comes with caveats, the principle one being that dirty reads are possible i.e. reads of values that may be rolled back. Specifically we are not offering isolation. The API does however offer the ability to lock records prior to update and to check locks, so in principle, if you were to insist on all gets being preceded by locks full isolation would be achieved. That said you wouldn’t have a high performance database anymore. The correct thing to do then is to use judiciously as you would with any tool. Also offered is the ability to do optimistic locking by supplying the generation ( see Aerospike FAQ ) of the record, with transaction failure occurring if generation count does not match what is expected. This keeps you secure when other database users may non-transactionally update records, without incurring the overhead of locking.

It is worth saying at this point, that the value of this API is much greater if using Aerospike Enterprise ( rather than Community Edition ) and in particular making use of Strong Consistency. Strong consistency gives you the guarantee that duplicate records in your database ( necessary for resilience purposes ) will not ever experience divergence. If you do not have this guarantee ( which very few databases in our performance range offer ) then there is potential for this to occur in the event of network partitions and process crashes. Divergence of records here would mean locks or transaction records being lost in a sub-cluster experiencing a partition event ( or process crash ). Strong Consistency gives you a guarantee this will not happen.

Usage

Multi record put that will succeed or fail atomically

Atomic multi-record incorporating generation check

So what happens if my transaction fails part way through?

Rollback

If transactions can they will self unwind. This will be done on a lock acquire or generation check exception.

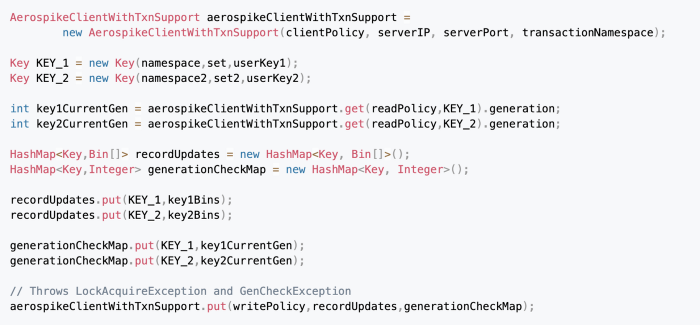

This may of course not be possible in the event of a network failure for example. For that we have the rollback call. This will (see below) allow rollback of all transactions of more than a (specified by the user) certain age, together with any orphan locks i.e. locks not associated with transactions ( the absence of a transaction record means the transaction completed, but the transaction process failed while unwinding the locks ).

Rollback of expired transactions / locks

Testing

But how do I know it’s sound? Try testing it of course. The README goes into some detail on this point and the test classes even more so. If you think something has been missed let me know.

More Detail

The FAQ covers a number of questions asked to date. Please do read this section and also the caveats section of the README ( in fact all of the README ) if you are considering using this.

Further direction

An obvious next step to take would be to incorporate two-phase locking i.e. supporting shared read locks as well as exclusive write locks in order to reduce contention.

Another possibility is that the locks are on the records themselves, rather than being separate records — this might optimize single record use.

Finally, in this post, Martin Kleppmann notes that this method may be problematic if there is the possibility that transactions can pause for unexpectedly long periods (exceeding the period you would reasonably expect the transaction to complete in). If you are considering using this API, you should consider the points he raises. His suggestion of ‘fencing tokens’ is an option for incorporating into this API if there is further interest.

To that end, please let me know if you use this API, and then I’ll know if there’s appetite for more.

Any questions/comments — please feed back through the GitHub issues facility.