Simple Load and Store with Aerospike Data on Spark

For an interactive Jupyter notebook experience

This notebook shows how to load data from and store processed data to Aerospike Database on Spark. The data transfer is enabled by the Aerospike Connector for Spark.

Setup

Execute the code cells in this section to set up Aerospike Server, Spark Server, and Spark Connector.

Ensure Database Is Running

This notebook requires that Aerospike Database is running.

!asd >& /dev/null!pgrep -x asd >/dev/null && echo "Aerospike database is running!" || echo "**Aerospike database is not running!**"Aerospike database is running!Initialize Spark

We will be using Spark functionality in this notebook.

Initialize Paths and Env Variables

# directory where spark notebook requisites are installedSPARK_NB_DIR = '/opt/spark-nb'SPARK_DIR = 'spark-dir-link'SPARK_HOME = SPARK_NB_DIR + '/' + SPARK_DIRAEROSPIKE_JAR = 'aerospike-jar-link'AEROSPIKE_JAR_PATH = SPARK_NB_DIR + '/' + AEROSPIKE_JAR# IP Address or DNS name for one host in your Aerospike clusterAS_HOST ="localhost"# Name of one of your namespaces. Type 'show namespaces' at the aql prompt if you are not sureAS_NAMESPACE = "test"AS_PORT = 3000 # Usually 3000, but change here if notAS_CONNECTION_STRING = AS_HOST + ":"+ str(AS_PORT)# Locate the Spark installation using the SPARK_HOME parameter.import findsparkfindspark.init(SPARK_HOME)# Specify the Aerospike Spark Connector jar in the command used to interact with Aerospike.import osos.environ["PYSPARK_SUBMIT_ARGS"] = '--jars ' + AEROSPIKE_JAR_PATH + ' pyspark-shell'Configure Spark Session

Please visit Configuring Aerospike Connect for Spark for more information about the properties used on this page.

# importsimport pysparkfrom pyspark.context import SparkContextfrom pyspark.sql.context import SQLContextfrom pyspark.sql.session import SparkSessionfrom pyspark.sql.types import StringType, StructField, StructType, ArrayType, IntegerType, MapType, LongType, DoubleTypesc = SparkContext.getOrCreate()conf=sc._conf.setAll([("aerospike.namespace",AS_NAMESPACE),("aerospike.seedhost",AS_CONNECTION_STRING)])sc.stop()sc = pyspark.SparkContext(conf=conf)spark = SparkSession(sc)sqlContext = SQLContext(sc)Store Data into Aerospike

We will first store simple generated data to Aerospike, and then show how to load data from Aerospike.

Create Data

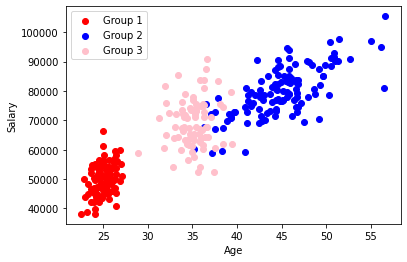

We create simple age-salary data with a specified distribution and the following structure.

- id: integer

- name: string

- age: integer

- salary: integer

# We create age vs salary data, using three different Gaussian distributionsimport numpy as npimport matplotlib.pyplot as pltimport pandas as pdimport math

# Make sure we get the same results every time this workbook is run# Otherwise we are occasionally exposed to results not working out as expectednp.random.seed(12345)

# Create covariance matrix from std devs + correlationdef covariance_matrix(std_dev_1,std_dev_2,correlation): return [[std_dev_1 ** 2, correlation * std_dev_1 * std_dev_2], [correlation * std_dev_1 * std_dev_2, std_dev_2 ** 2]]

# Return a bivariate sample given means/std dev/correlationdef age_salary_sample(distribution_params,sample_size): mean = [distribution_params["age_mean"], distribution_params["salary_mean"]] cov = covariance_matrix(distribution_params["age_std_dev"],distribution_params["salary_std_dev"], distribution_params["age_salary_correlation"]) return np.random.multivariate_normal(mean, cov, sample_size).T

# Define the characteristics of our age/salary distributionage_salary_distribution_1 = {"age_mean":25,"salary_mean":50000, "age_std_dev":1,"salary_std_dev":5000,"age_salary_correlation":0.3}

age_salary_distribution_2 = {"age_mean":45,"salary_mean":80000, "age_std_dev":4,"salary_std_dev":8000,"age_salary_correlation":0.7}

age_salary_distribution_3 = {"age_mean":35,"salary_mean":70000, "age_std_dev":2,"salary_std_dev":9000,"age_salary_correlation":0.1}

distribution_data = [age_salary_distribution_1,age_salary_distribution_2,age_salary_distribution_3]

# Sample age/salary data for each distributionssample_size_1 = 100;sample_size_2 = 120;sample_size_3 = 80;sample_sizes = [sample_size_1,sample_size_2,sample_size_3]group_1_ages,group_1_salaries = age_salary_sample(age_salary_distribution_1,sample_size=sample_size_1)group_2_ages,group_2_salaries = age_salary_sample(age_salary_distribution_2,sample_size=sample_size_2)group_3_ages,group_3_salaries = age_salary_sample(age_salary_distribution_3,sample_size=sample_size_3)

ages=np.concatenate([group_1_ages,group_2_ages,group_3_ages])salaries=np.concatenate([group_1_salaries,group_2_salaries,group_3_salaries])

print("Data created")Data createdDisplay Data

# Plot the sample datagroup_1_colour, group_2_colour, group_3_colour ='red','blue', 'pink'plt.xlabel('Age',fontsize=10)plt.ylabel("Salary",fontsize=10)

plt.scatter(group_1_ages,group_1_salaries,c=group_1_colour,label="Group 1")plt.scatter(group_2_ages,group_2_salaries,c=group_2_colour,label="Group 2")plt.scatter(group_3_ages,group_3_salaries,c=group_3_colour,label="Group 3")

plt.legend(loc='upper left')plt.show()Output

Save Data

We save the generated data in the set “salary_data” defined in aerospike.set parameter below and in the namespace “test” that was specified in the Spark context above as aerospike.namespace.

# Turn the above records into a Data Frame# First of all, create an array of arraysinputBuf = []

for i in range(0, len(ages)) : id = i + 1 # Avoid counting from zero name = "Individual: {:03d}".format(id) # Note we need to make sure values are typed correctly # salary will have type numpy.float64 - if it is not cast as below, an error will be thrown age = float(ages[i]) salary = int(salaries[i]) inputBuf.append((id, name,age,salary))

# Convert to an RDDinputRDD = spark.sparkContext.parallelize(inputBuf)

# Convert to a data frame using a schemaschema = StructType([ StructField("id", IntegerType(), True), StructField("name", StringType(), True), StructField("age", DoubleType(), True), StructField("salary",IntegerType(), True)])

inputDF=spark.createDataFrame(inputRDD,schema)

#Write the data frame to Aerospike, the id field is used as the primary keyinputDF \.write \.mode('overwrite') \.format("aerospike") \.option("aerospike.set", "salary_data")\.option("aerospike.updateByKey", "id") \.save()View Stored Data

Use the Aerospike AQL utility to view the stored data.

!aql -c "select * from test.salary_data"select * from test.salary_data+-------------------+-----+-------------------+--------+| age | id | name | salary |+-------------------+-----+-------------------+--------+| 38.84745269824979 | 139 | "Individual: 139" | 69645 || 33.97918907293992 | 272 | "Individual: 272" | 66496 || 25.45785726602289 | 76 | "Individual: 076" | 46214 || 43.1868235157955 | 147 | "Individual: 147" | 70158 || 25.88749070267593 | 79 | "Individual: 079" | 48162 || 54.98712625322746 | 160 | "Individual: 160" | 97029 || 43.66775304181341 | 145 | "Individual: 145" | 73062 || 44.35304300125181 | 198 | "Individual: 198" | 77081 || 42.5158131767696 | 105 | "Individual: 105" | 73984 || 56.51623471593592 | 196 | "Individual: 196" | 80848 || 22.91486461859545 | 66 | "Individual: 066" | 43879 || 26.13768535640089 | 84 | "Individual: 084" | 49447 || 42.95306230074591 | 152 | "Individual: 152" | 76974 || 26.41972973144746 | 5 | "Individual: 005" | 53845 || 46.55767726882384 | 180 | "Individual: 180" | 74441 || 41.81135705787229 | 211 | "Individual: 211" | 75883 || 42.46289000913426 | 209 | "Individual: 209" | 69066 || 33.88880875612859 | 290 | "Individual: 290" | 72171 || 40.9227899001288 | 168 | "Individual: 168" | 74485 || 24.45368999722374 | 64 | "Individual: 064" | 50538 || 25.29309225873203 | 11 | "Individual: 011" | 47301 || 41.08443489903744 | 121 | "Individual: 121" | 69059 || 26.43387466557501 | 67 | "Individual: 067" | 58173 || 37.83366632235595 | 218 | "Individual: 218" | 77712 || 50.4687163424899 | 162 | "Individual: 162" | 96742 || 25.25627511005183 | 26 | "Individual: 026" | 50349 || 42.70354738516746 | 119 | "Individual: 119" | 71501 || 45.11193363577878 | 217 | "Individual: 217" | 76815 || 36.73868279291174 | 247 | "Individual: 247" | 64397 || 28.88057978385297 | 261 | "Individual: 261" | 58736 || 35.14194917811759 | 254 | "Individual: 254" | 65494 || 26.33199940700781 | 55 | "Individual: 055" | 47052 || 24.18689142200467 | 88 | "Individual: 088" | 43930 || 34.61582850993675 | 294 | "Individual: 294" | 67240 || 25.83091434262693 | 45 | "Individual: 045" | 49342 || 48.70499394129772 | 108 | "Individual: 108" | 78914 || 46.81734082816165 | 216 | "Individual: 216" | 82378 || 26.91895863598789 | 3 | "Individual: 003" | 59828 || 44.81905792578452 | 123 | "Individual: 123" | 88321 || 39.31901069861998 | 264 | "Individual: 264" | 79805 || 44.55866761589274 | 171 | "Individual: 171" | 85346 || 44.59708878629509 | 182 | "Individual: 182" | 88112 || 44.30064433030314 | 131 | "Individual: 131" | 71438 || 25.50623242826136 | 97 | "Individual: 097" | 54193 || 44.35213108442584 | 109 | "Individual: 109" | 73225 || 23.64207332999615 | 35 | "Individual: 035" | 46737 || 44.62237914959798 | 164 | "Individual: 164" | 90424 || 26.26436315509618 | 54 | "Individual: 054" | 55476 || 45.84967817942239 | 125 | "Individual: 125" | 85134 || 25.000494245766 | 12 | "Individual: 012" | 66244 || 25.77585254835976 | 62 | "Individual: 062" | 51768 || 49.67310667314544 | 113 | "Individual: 113" | 85003 || 34.18772325493474 | 251 | "Individual: 251" | 63143 || 35.03714220167261 | 248 | "Individual: 248" | 76750 || 46.60405174417829 | 153 | "Individual: 153" | 83040 || 48.36055753204719 | 114 | "Individual: 114" | 88859 || 45.57430980213641 | 194 | "Individual: 194" | 94548 || 24.08476170165959 | 96 | "Individual: 096" | 46328 || 43.06512046705784 | 140 | "Individual: 140" | 78500 || 45.18908097916793 | 120 | "Individual: 120" | 80007 || 22.79485298523146 | 34 | "Individual: 034" | 49882 || 41.55122933798243 | 205 | "Individual: 205" | 74759 || 31.90256940957829 | 263 | "Individual: 263" | 81678 || 49.63878026576844 | 170 | "Individual: 170" | 84917 || 25.27733154496998 | 56 | "Individual: 056" | 47356 || 26.87692699227393 | 47 | "Individual: 047" | 49425 || 33.77525922313378 | 271 | "Individual: 271" | 59730 || 38.08819977056483 | 281 | "Individual: 281" | 75612 || 24.59843481162659 | 90 | "Individual: 090" | 52175 || 35.90122037525296 | 252 | "Individual: 252" | 54238 || 24.99849612471949 | 20 | "Individual: 020" | 44682 || 24.52123876549696 | 28 | "Individual: 028" | 56635 || 27.1259975101106 | 46 | "Individual: 046" | 50941 || 32.83361942986529 | 230 | "Individual: 230" | 54148 || 37.55702968716015 | 184 | "Individual: 184" | 67113 || 46.58598376039249 | 219 | "Individual: 219" | 80532 || 36.24668002275217 | 257 | "Individual: 257" | 80994 || 24.90196021709543 | 74 | "Individual: 074" | 48638 || 39.07899498554285 | 207 | "Individual: 207" | 72197 || 35.88192426220356 | 296 | "Individual: 296" | 83686 || 35.33158066768564 | 273 | "Individual: 273" | 71157 || 24.88165316362767 | 59 | "Individual: 059" | 51102 || 45.16418356065846 | 135 | "Individual: 135" | 76797 || 25.87531227356693 | 37 | "Individual: 037" | 53618 || 45.63248014149332 | 134 | "Individual: 134" | 77862 || 42.23627729566275 | 154 | "Individual: 154" | 90586 || 26.08538036032814 | 98 | "Individual: 098" | 53605 || 50.5714412429367 | 156 | "Individual: 156" | 88377 || 50.58123004549133 | 203 | "Individual: 203" | 91326 || 25.62963757719123 | 100 | "Individual: 100" | 56483 || 45.08999506629362 | 111 | "Individual: 111" | 76434 || 25.65864928914094 | 42 | "Individual: 042" | 54083 || 36.28570967548751 | 215 | "Individual: 215" | 67766 || 24.04479361358855 | 9 | "Individual: 009" | 39991 || 26.5744693830482 | 93 | "Individual: 093" | 56049 || 35.42212899746488 | 291 | "Individual: 291" | 67829 || 48.65041867984886 | 173 | "Individual: 173" | 80760 || 24.76376081231679 | 81 | "Individual: 081" | 47918 || 24.99476474249944 | 40 | "Individual: 040" | 46368 |+-------------------+-----+-------------------+--------++-------------------+-----+-------------------+--------+| age | id | name | salary |+-------------------+-----+-------------------+--------+| 23.30820819245901 | 77 | "Individual: 077" | 48478 || 36.20663115220678 | 259 | "Individual: 259" | 75433 || 43.87495649590418 | 188 | "Individual: 188" | 81210 || 25.95497068258835 | 27 | "Individual: 027" | 49940 || 25.32113509515113 | 50 | "Individual: 050" | 52924 || 36.26390557216931 | 288 | "Individual: 288" | 72847 || 35.9066590731213 | 224 | "Individual: 224" | 74906 || 45.58032665992633 | 212 | "Individual: 212" | 79747 || 22.5138778236622 | 17 | "Individual: 017" | 38148 || 31.98857053353701 | 231 | "Individual: 231" | 75925 || 24.8206346967664 | 53 | "Individual: 053" | 47587 || 35.36149798456427 | 299 | "Individual: 299" | 73583 || 35.37399618071812 | 262 | "Individual: 262" | 75711 || 25.76750620371324 | 48 | "Individual: 048" | 50148 || 34.15486648263456 | 276 | "Individual: 276" | 79019 || 35.26964423384393 | 260 | "Individual: 260" | 72119 || 38.29713989206178 | 137 | "Individual: 137" | 59536 || 36.5934205747499 | 249 | "Individual: 249" | 90706 || 35.18898568512165 | 186 | "Individual: 186" | 60407 || 24.55643080984997 | 29 | "Individual: 029" | 42254 || 42.54995976189524 | 165 | "Individual: 165" | 78846 || 25.62534178026618 | 36 | "Individual: 036" | 43336 || 36.350189394118 | 241 | "Individual: 241" | 83611 || 39.39998454736205 | 279 | "Individual: 279" | 61970 || 46.61088172055385 | 181 | "Individual: 181" | 83658 || 43.33014839145317 | 163 | "Individual: 163" | 72556 || 33.8810753644531 | 293 | "Individual: 293" | 67542 || 23.6363830959181 | 78 | "Individual: 078" | 52134 || 23.21107381279437 | 61 | "Individual: 061" | 38736 || 34.70631177289261 | 287 | "Individual: 287" | 65348 || 47.54481718739405 | 190 | "Individual: 190" | 69534 || 24.85865699390717 | 65 | "Individual: 065" | 47914 || 38.46678785970544 | 277 | "Individual: 277" | 62013 || 36.20944651871691 | 278 | "Individual: 278" | 61692 || 35.92189457867195 | 267 | "Individual: 267" | 64129 || 44.52394611117357 | 148 | "Individual: 148" | 80480 || 25.27611732265598 | 18 | "Individual: 018" | 45696 || 33.83509646655938 | 266 | "Individual: 266" | 76086 || 35.34473280302399 | 274 | "Individual: 274" | 74003 || 50.83291154818824 | 187 | "Individual: 187" | 92796 || 39.72256297603004 | 133 | "Individual: 133" | 72903 || 34.48040526116174 | 289 | "Individual: 289" | 56548 || 24.73452742986315 | 19 | "Individual: 019" | 43670 || 32.97228800174097 | 297 | "Individual: 297" | 63852 || 52.63646076333807 | 149 | "Individual: 149" | 90797 || 23.93457888255205 | 39 | "Individual: 039" | 46888 || 24.60518551971716 | 95 | "Individual: 095" | 48021 || 24.31403545898675 | 2 | "Individual: 002" | 47402 || 49.90462415484514 | 177 | "Individual: 177" | 88836 || 34.65124481816264 | 270 | "Individual: 270" | 63552 || 26.25147475955581 | 8 | "Individual: 008" | 56764 || 35.44696613908334 | 268 | "Individual: 268" | 73220 || 47.80817585023234 | 110 | "Individual: 110" | 90108 || 38.46251502919521 | 210 | "Individual: 210" | 67302 || 56.14454565605458 | 220 | "Individual: 220" | 94943 || 25.29664106310324 | 4 | "Individual: 004" | 50464 || 44.64230533331592 | 115 | "Individual: 115" | 77199 || 24.30898150222034 | 58 | "Individual: 058" | 44887 || 37.55862230964154 | 229 | "Individual: 229" | 60490 || 25.12969642655245 | 80 | "Individual: 080" | 56099 || 23.91100085165325 | 41 | "Individual: 041" | 50256 || 36.24289194006415 | 235 | "Individual: 235" | 75146 || 35.61575446391777 | 225 | "Individual: 225" | 52483 || 26.39629960543233 | 92 | "Individual: 092" | 45367 || 39.3403878518148 | 208 | "Individual: 208" | 71164 || 46.77721292571739 | 172 | "Individual: 172" | 72735 || 24.9717546123257 | 69 | "Individual: 069" | 56533 || 25.6669656008671 | 68 | "Individual: 068" | 52289 || 36.46788539299514 | 221 | "Individual: 221" | 67791 || 23.92403782223526 | 89 | "Individual: 089" | 45403 || 47.15950850314334 | 107 | "Individual: 107" | 76049 || 36.19305472236984 | 295 | "Individual: 295" | 60250 || 24.34404875265196 | 33 | "Individual: 033" | 54264 || 39.37109603380626 | 103 | "Individual: 103" | 70650 || 44.5656609587089 | 158 | "Individual: 158" | 82307 || 26.01254219983841 | 71 | "Individual: 071" | 45880 || 26.38695770425844 | 25 | "Individual: 025" | 51656 || 35.07469885610691 | 233 | "Individual: 233" | 63830 || 35.85881568977177 | 280 | "Individual: 280" | 62640 || 44.76894184915611 | 146 | "Individual: 146" | 76503 || 45.84237457281951 | 200 | "Individual: 200" | 78791 || 31.80357885327798 | 223 | "Individual: 223" | 60439 || 27.04404109036838 | 43 | "Individual: 043" | 55053 || 51.28350713525773 | 178 | "Individual: 178" | 90077 || 39.64510250993715 | 192 | "Individual: 192" | 72664 || 25.51215670533433 | 44 | "Individual: 044" | 45012 || 25.81033637987936 | 75 | "Individual: 075" | 53401 || 50.30396237031055 | 132 | "Individual: 132" | 78746 || 25.08233874902072 | 10 | "Individual: 010" | 58345 || 43.67491677796684 | 141 | "Individual: 141" | 79076 || 37.09568187885061 | 112 | "Individual: 112" | 72307 || 49.15999176564866 | 201 | "Individual: 201" | 87532 || 24.94527795295446 | 13 | "Individual: 013" | 47114 || 42.71790399344467 | 128 | "Individual: 128" | 83366 || 34.04728104778491 | 300 | "Individual: 300" | 67622 || 37.15863767021896 | 104 | "Individual: 104" | 58844 || 23.62771625204453 | 60 | "Individual: 060" | 53345 || 42.61365701705083 | 129 | "Individual: 129" | 77929 || 36.47287618289909 | 118 | "Individual: 118" | 75390 |+-------------------+-----+-------------------+--------++-------------------+-----+-------------------+--------+| age | id | name | salary |+-------------------+-----+-------------------+--------+| 34.72684230210607 | 232 | "Individual: 232" | 74119 || 24.10398532641521 | 52 | "Individual: 052" | 49836 || 25.385411666593 | 70 | "Individual: 070" | 48493 || 24.39423332104133 | 24 | "Individual: 024" | 51889 || 46.0366497775221 | 191 | "Individual: 191" | 84516 || 34.94841606011511 | 284 | "Individual: 284" | 59297 || 43.97255703917137 | 189 | "Individual: 189" | 76196 || 34.96677276547636 | 244 | "Individual: 244" | 61334 || 24.47428614857203 | 91 | "Individual: 091" | 47162 || 25.39547052370498 | 1 | "Individual: 001" | 48976 || 34.82926250847292 | 298 | "Individual: 298" | 73606 || 37.41393522454604 | 240 | "Individual: 240" | 52542 || 41.06986441279633 | 155 | "Individual: 155" | 72680 || 41.97248578452533 | 127 | "Individual: 127" | 78460 || 33.88136686744861 | 227 | "Individual: 227" | 73672 || 44.20600394284013 | 143 | "Individual: 143" | 87126 || 50.8215535658812 | 106 | "Individual: 106" | 91658 || 34.41467659655716 | 236 | "Individual: 236" | 70734 || 25.30086646117202 | 7 | "Individual: 007" | 51374 || 34.14119442749095 | 286 | "Individual: 286" | 71691 || 41.01749347248462 | 166 | "Individual: 166" | 80828 || 36.66253741915471 | 242 | "Individual: 242" | 67597 || 34.07230785121148 | 245 | "Individual: 245" | 71158 || 45.82761289603616 | 126 | "Individual: 126" | 91152 || 44.21687157148506 | 102 | "Individual: 102" | 82474 || 51.40563656530654 | 193 | "Individual: 193" | 97698 || 41.21442389919512 | 176 | "Individual: 176" | 78635 || 45.79820423103285 | 122 | "Individual: 122" | 87523 || 24.82054826105547 | 38 | "Individual: 038" | 55007 || 23.9936736589131 | 51 | "Individual: 051" | 42171 || 34.65214128521281 | 239 | "Individual: 239" | 61747 || 46.53337694047583 | 101 | "Individual: 101" | 89019 || 25.24920420954561 | 31 | "Individual: 031" | 54312 || 25.59043077849547 | 14 | "Individual: 014" | 51513 || 42.56064799325679 | 142 | "Individual: 142" | 80357 || 31.29085325544791 | 243 | "Individual: 243" | 70754 || 45.96865034153888 | 202 | "Individual: 202" | 82850 || 44.41128454132044 | 195 | "Individual: 195" | 73805 || 43.47469416168524 | 169 | "Individual: 169" | 85090 || 44.89546664470054 | 159 | "Individual: 159" | 82870 || 46.70560735944277 | 161 | "Individual: 161" | 79156 || 36.27366840833148 | 222 | "Individual: 222" | 71161 || 46.15744144083992 | 117 | "Individual: 117" | 71581 || 24.10182248524402 | 21 | "Individual: 021" | 38202 || 49.1773716696247 | 167 | "Individual: 167" | 70600 || 46.72555213577564 | 197 | "Individual: 197" | 77958 || 37.51982175000117 | 116 | "Individual: 116" | 72841 || 45.86378643346934 | 151 | "Individual: 151" | 93977 || 25.65434773386738 | 32 | "Individual: 032" | 50250 || 36.35641301228243 | 246 | "Individual: 246" | 64237 || 23.99306685642435 | 16 | "Individual: 016" | 46432 || 33.43000260281838 | 256 | "Individual: 256" | 64426 || 24.38949434240006 | 87 | "Individual: 087" | 53636 || 24.32137934236393 | 49 | "Individual: 049" | 50590 || 35.68815148712056 | 238 | "Individual: 238" | 65318 || 47.99445859151342 | 183 | "Individual: 183" | 77893 || 26.28219492010451 | 15 | "Individual: 015" | 50004 || 37.8137082474738 | 250 | "Individual: 250" | 83404 || 24.88105115844521 | 23 | "Individual: 023" | 43464 || 23.61137828720559 | 22 | "Individual: 022" | 42290 || 34.38071207570569 | 226 | "Individual: 226" | 61805 || 32.03178607840392 | 285 | "Individual: 285" | 65147 || 46.83459080530326 | 204 | "Individual: 204" | 83651 || 38.40860526256105 | 283 | "Individual: 283" | 74555 || 40.94561371543077 | 144 | "Individual: 144" | 59101 || 36.46912399056626 | 282 | "Individual: 282" | 87464 || 33.40940020738482 | 255 | "Individual: 255" | 85374 || 37.10710655471658 | 292 | "Individual: 292" | 71122 || 24.10221251354065 | 94 | "Individual: 094" | 45128 || 45.24321628641496 | 174 | "Individual: 174" | 85096 || 26.28828703337766 | 85 | "Individual: 085" | 59603 || 35.79943069800473 | 234 | "Individual: 234" | 67135 || 41.27090317888393 | 179 | "Individual: 179" | 76524 || 45.52700851448768 | 124 | "Individual: 124" | 83433 || 36.05016810737868 | 228 | "Individual: 228" | 66749 || 49.27720175120505 | 213 | "Individual: 213" | 82175 || 44.36528540877659 | 138 | "Individual: 138" | 80612 || 42.797912685033 | 130 | "Individual: 130" | 69916 || 24.06564069303854 | 6 | "Individual: 006" | 55035 || 23.34541624240619 | 99 | "Individual: 099" | 44895 || 24.68241178653287 | 73 | "Individual: 073" | 50934 || 25.01929121864152 | 86 | "Individual: 086" | 61123 || 51.04052349344122 | 214 | "Individual: 214" | 90306 || 46.86205839212964 | 199 | "Individual: 199" | 74419 || 24.59740890434833 | 30 | "Individual: 030" | 53791 || 45.68587902024383 | 136 | "Individual: 136" | 83196 || 26.14074137724695 | 83 | "Individual: 083" | 46115 || 32.39363653301354 | 253 | "Individual: 253" | 72542 || 33.0655111105172 | 265 | "Individual: 265" | 67828 || 24.52904962958139 | 72 | "Individual: 072" | 52539 || 46.39113119158637 | 150 | "Individual: 150" | 76906 || 48.0286095157561 | 185 | "Individual: 185" | 89932 || 24.78489604828937 | 63 | "Individual: 063" | 48627 || 23.78200189530308 | 57 | "Individual: 057" | 54649 || 45.83958306327029 | 157 | "Individual: 157" | 79086 || 35.07305643561117 | 269 | "Individual: 269" | 77824 || 35.13007434858572 | 258 | "Individual: 258" | 57509 || 26.41998520350787 | 82 | "Individual: 082" | 40776 || 33.17865867212326 | 275 | "Individual: 275" | 72272 |+-------------------+-----+-------------------+--------++-------------------+-----+-------------------+--------+| age | id | name | salary |+-------------------+-----+-------------------+--------+| 35.2134790582558 | 237 | "Individual: 237" | 63457 || 41.60086451199921 | 175 | "Individual: 175" | 79559 || 56.6362187203851 | 206 | "Individual: 206" | 105414 |+-------------------+-----+-------------------+--------+300 rows in set (0.165 secs)

OKLoad Data from Aerospike

We will show multiple ways of loading data into a data frame.

Load data without schema

The Aerospike Spark Connector can infer the schema by reading a number of records. This method returns data as well as metadata fields such as __key, __digest, __expiry, __generation, and __ttl.

# Create a Spark DataFrame by using the Connector Schema inference mechanism# The fields preceded with __ are metadata fields - key/digest/expiry/generation/ttl# By default you just get everything, with no column ordering, which is why it looks untidy# Note we don't get anything in the 'key' field as we have not chosen to save as a bin.# Use .option("aerospike.sendKey", True) to do this

loadedDFWithoutSchema = ( spark.read.format("aerospike") \ .option("aerospike.set", "salary_data") \ .load())

loadedDFWithoutSchema.show(10)+-----+--------------------+---------+------------+-------+------------------+---------------+------+---+|__key| __digest| __expiry|__generation| __ttl| age| name|salary| id|+-----+--------------------+---------+------------+-------+------------------+---------------+------+---+| null|[0F 10 1A 93 B1 E...|400973554| 3|2591999| 45.57430980213641|Individual: 194| 94548|194|| null|[03 50 2E 7F 70 9...|400973554| 3|2591999|34.652141285212814|Individual: 239| 61747|239|| null|[04 C0 5E 9A 68 5...|400973555| 3|2592000| 46.53337694047583|Individual: 101| 89019|101|| null|[1A E0 A8 A0 F2 3...|400973554| 3|2591999| 25.24920420954561|Individual: 031| 54312| 31|| null|[23 20 78 35 5D 7...|400973555| 3|2592000| 38.84745269824979|Individual: 139| 69645|139|| null|[35 00 8C 78 43 F...|400973554| 3|2591999| 25.59043077849547|Individual: 014| 51513| 14|| null|[37 00 6D 21 08 9...|400973555| 3|2592000| 42.56064799325679|Individual: 142| 80357|142|| null|[59 00 4B C7 6D 9...|400973554| 3|2591999| 33.97918907293992|Individual: 272| 66496|272|| null|[61 50 89 B1 EC 0...|400973555| 3|2592000|25.457857266022888|Individual: 076| 46214| 76|| null|[6C 50 7F 9B FD C...|400973555| 3|2592000| 43.1868235157955|Individual: 147| 70158|147|+-----+--------------------+---------+------------+-------+------------------+---------------+------+---+only showing top 10 rowsLoad data using schema

The schema can be explicitly specified, and only these fields will be retrieved. Note the schema used here was constructed earlier with id, name, age, and salary fields.

# If we explicitly set the schema, using the previously created schema object# we effectively type the rows in the Data Frame

loadedDFWithSchema=spark \.read \.format("aerospike") \.schema(schema) \.option("aerospike.set", "salary_data").load()

loadedDFWithSchema.show(5)+---+---------------+------------------+------+| id| name| age|salary|+---+---------------+------------------+------+|101|Individual: 101| 46.53337694047583| 89019||239|Individual: 239|34.652141285212814| 61747||194|Individual: 194| 45.57430980213641| 94548|| 31|Individual: 031| 25.24920420954561| 54312||139|Individual: 139| 38.84745269824979| 69645|+---+---------------+------------------+------+only showing top 5 rowsPushing Down Query Predicate

In order to get the best performance, it is important to minimize the amount of data retrieved to Spark. This is achieved by “pushing down” the query predicate or by processing filters in the database.

The Spark Connector allows the “pushdown expressions” option for specifying filters to be processed on Aerospike. Note that it cannot be used in load together with the where clause.

In the following example, we use the Base64 encoding of a simple expression id % 5 = 0 to get records where the ID field is divisible by 5. See the notebook Pushdown Expressions for Spark Connector for details on constructing an expression and obtaining its Base64 representation.

Compute Base64 Representation of Predicate

import aerospikefrom aerospike_helpers import expressions as exp

# Configure the client connectionconfig = { 'hosts': [ ('127.0.0.1', 3000) ]}# Connect to the servertry: client = aerospike.client(config).connect()except ex.ClientError as e: print("Error: {0} [{1}]".format(e.msg, e.code)) sys.exit(1)

# Build the expression for id % 5 = 0expr = exp.Eq( exp.IntBin("id") % 5, 0).compile()# Get Base64 representation of the expression for use in a pushdown-expression request.pushdown_expr = client.get_expression_base64(expr)client.close()print('The base64 representation of the expression "id % 5 = 0" is', pushdown_expr)The base64 representation of the expression "id % 5 = 0" is kwGTGpNRAqJpZAUALoad Using Pushdown Expressions

dfWithPushdownExpr = spark \ .read \ .format("aerospike") \ .schema(schema) \ .option("aerospike.set", "salary_data") \ . option("aerospike.pushdown.expressions", pushdown_expr) \ .load()

dfWithPushdownExpr.show(10)+---+---------------+------------------+------+| id| name| age|salary|+---+---------------+------------------+------+| 10|Individual: 010|25.082338749020725| 58345||140|Individual: 140| 43.06512046705784| 78500||160|Individual: 160| 54.98712625322746| 97029||120|Individual: 120|45.189080979167926| 80007||205|Individual: 205| 41.55122933798243| 74759||195|Individual: 195| 44.41128454132044| 73805||145|Individual: 145|43.667753041813405| 73062||300|Individual: 300|34.047281047784914| 67622||105|Individual: 105| 42.5158131767696| 73984||170|Individual: 170| 49.63878026576844| 84917|+---+---------------+------------------+------+only showing top 10 rowsUsing Secondary Index

First we create a secondary index on the salary field, and then retrieve data using the secondary index.

# create a secondary index on salaryimport aerospikefrom aerospike import exception as ex

client = aerospike.client({"hosts": [AS_HOST]}).connect()# create a secondary index on salaryindex_name = "idx_salary_int"try: client.index_integer_create('test', 'salary_data', "salary", index_name)except ex.IndexFoundError as e: pass# automatically selects appropriate secindary indexdfWithSecIdx = spark \ .read \ .format("aerospike") \ .schema(schema) \ .option("aerospike.set", "salary_data") \ .option("aerospike.sindex.enable", "true") \ .load() \ .where("salary >= 100000")

dfWithSecIdx.show()+---+---------------+----------------+------+| id| name| age|salary|+---+---------------+----------------+------+|206|Individual: 206|56.6362187203851|105414|+---+---------------+----------------+------+