Zero downtime upgrades in Aerospike made (even) simpler

Aerospike is a distributed key value database, designed to support high levels of throughput, with minimal latency, at scale. Aerospike is optimised for use with flash based storage, enabling it to achieve world class performance with best in class density and cost.

Aerospike was designed to be ‘always on’. Our resilience features are proven in production deployments, with customers able to report 100% uptime over periods of up to 8 years1.When we say always on, we mean always on. Aerospike will manage planned and unplanned outages at both host and cluster level.

A thorny requirement comes when upgrades are considered, meaning upgrade of the database software itself. Although increasing numbers of distributed databases do now support this, Aerospike has been ahead of the curve in supporting rolling upgrades since version 2. We would also highlight the simplicity of our process2 vs those offered by other vendors3.

Not only is the process simple, but it has been designed (like everything else in Aerospike) for speed. Aerospike’s primary key index is held in shared memory, so an Aerospike process can be stopped, to allow for a database upgrade, and on re-starting re-attach to an already in-memory index avoiding the need for an index rebuild.

If, however, you need to reboot your server in order to allow for OS upgrades or hardware maintenance, you will need to allow for index rebuild time. The time required will increase with the number of nodes you have in your cluster and for large numbers of nodes, you may wish to consider alternative approaches4.

A recently introduced operational feature, quiesce, can help5. Quiesce was designed to allow nodes to be taken cleanly out of a cluster when planned outage is needed. Quiesce will ensure our principle of ‘single hop to data’6 is preserved by handing off partition master responsibility, as well as handing off replica responsibilities to ensure no gap in resilience provision.

We can also make use of quiesce to transfer responsibilities wholesale from one cluster to another. This is of greatest utility in a cloud environment where there is effectively little or no cost in creating a ‘new’ cluster.The approach is

Add your new nodes (with required OS version/patching etc) to your Aerospike cluster

Quiesce ‘old’ nodes

Wait for migration

7 to complete

Retire ‘old’ nodes

You can see that this is potentially swifter than cycling through an update requiring a reboot several times over due to reboots requiring an Aerospike ‘cold start’8. This should not be confused with changes requiring only ‘warm starts’ (such as Aerospike upgrades) which typically complete rapidly.

Demonstration

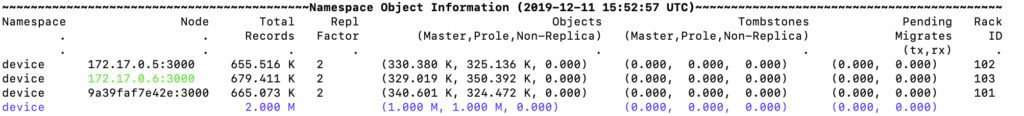

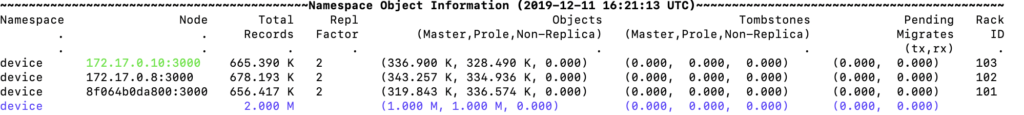

Let’s see how this works in practice. We start with a three node cluster containing 1m records. Note the IP addresses and the node id of the node I’m logged in to (9a39…).

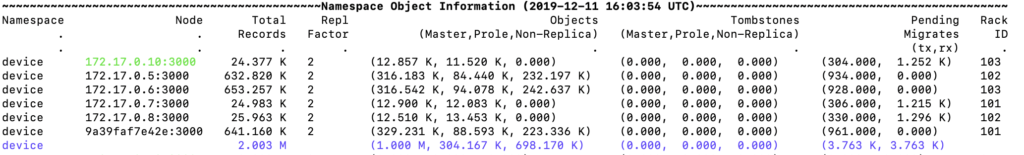

We add three new nodes and migration commences

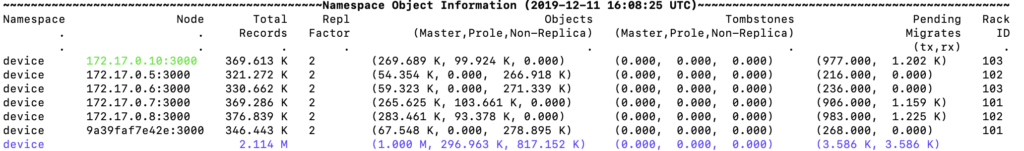

We issue a ‘quiesce’ command to the initial three nodes followed by ‘recluster’

asinfo -v ‘quiesce:’ with ${NODE_1} (repeat 3x)

asinfo -v ‘recluster:’

Migrations commence/continue9.

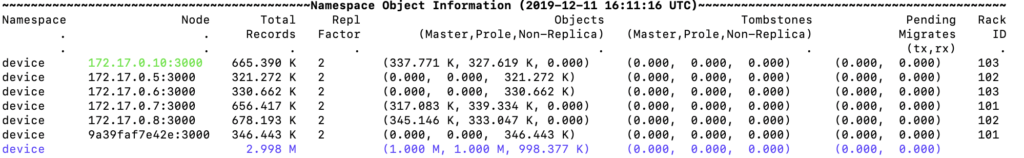

Once complete our original three nodes are no longer managing data

Original three nodes, rows 2,3,6 are no longer mastering or replicating (=prole) data

We now remove the three original nodes — 9a39…, 172.17.0.5 & 6, giving us an entirely new cluster — incorporating whatever patching etc motivated our upgrade.

ConclusionFor Enterprise products ease of use is a major differentiator. Hopefully this article helps you understand the efficiency with which Aerospike can be managed.

[1] https://www.aerospike.com/resources/videos/when-five-nines-is-not-enough-what-100-uptime-looks-like/

[2] https://docs.aerospike.com/operations/upgrade/aerospike/

[3] https://www.mongodb.com/blog/post/your-ultimate-guide-to-rolling-upgrades

[4] Interested readers should also look at our support for index in persistent memory which allows the primary key index to survive reboots and power cycles https://www.aerospike.com/blog/aerospike-4-5-persistent-memory-compression/

[5] https://docs.aerospike.com/reference/info/#quiesce

[6] https://www.aerospike.com/products/features/smart-client/

[7] Internal database process ensuring uniform distribution of data across cluster hosts

[8] See https://docs.aerospike.com/operations/manage/aerospike/cold_start/

[9] Continue if they hadn’t yet terminated following the previous step, commence if they had.