In this blog post, I’ll cover new features and changes in the generally available (GA) release of Aerospike 6.4. This release concludes our secondary index storage offerings with the introduction of secondary index on flash.

Secondary index on Flash

Before Aerospike Database 6, secondary indexes could only live in the Aerospike daemon (asd) process memory (RAM). This prevented Aerospike Database Enterprise Edition (EE) clusters from being able to fast restart (AKA warmstart) when secondary indexes were used on a namespace.

One focus of our secondary index work has been to add storage types that can warm-start.

The shared memory (

shmem) sindex-type was added in server 6.1.The Intel Optane™ Persistent Memory (

pmem) storage type was added inserver 6.3.

Server 6.4 adds the ability to have all secondary indexes in a specified namespace stored on an NVMe flash device. Not only do secondary indexes on flash persist and enable their namespace to warmstart, they also consume no RAM. Secondary index capacity planning is simply the calculated memory space cast to disk storage.

Though secondary indexes on flash only affect writes and queries, they carry a significant extra device IO cost for heavy write workloads, which should be taken into account for capacity planning. That being said, Aerospike should continue to perform well, compared to other databases, in mixed workloads where many reads and queries happen along with writes that modify the secondary index. You should choose the appropriate secondary index type for your use case.

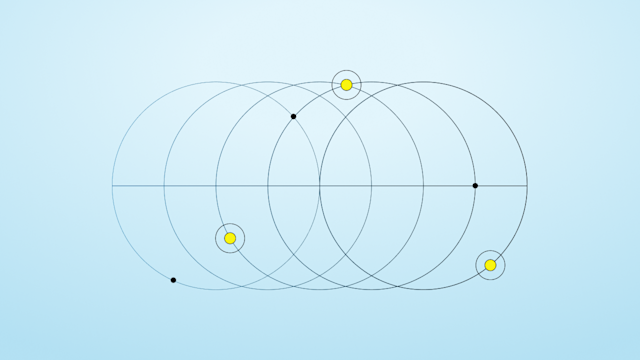

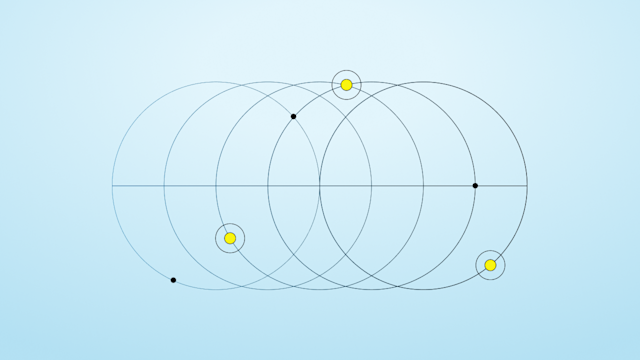

Write operations are impacted on latency and throughput when they must adjust secondary indexes. For each secondary index on a record bin where a value changed, the server crawls down the B-tree, at worst one device IO per layer of B-tree. As the B-tree layers fan out widely, the roots should get cached by mmap if the secondary index is used often, and we expect 1-3 device IOs per-write related adjustment. When the secondary index is very big, the cost per-adjustment might be 2-6 device IOs. Having enough memory for mmap to cache the first couple of layers of the B-tree will avoid paging and drive down the number of extra IOPS.

The impact on query performance is use case dependent. In general, the B-tree structure is very efficient. For example, a secondary index with 8 million entries has a B-tree three layers deep. The top two layers only cost a few MiBs of mmap cache, so traversing to the lowest layer may only cost a single device IO.

Adjacent values are packed in the same slab, so range queries (using BETWEEN) will get multiple values per device IO. Secondary index on flash will also be efficient for long queries which return many records.

Short queries – queries that consistently return a small number of records – will perform noticeably worse on secondary indexes on flash, relative to secondary indexes in memory (shared memory or PMem). In situations where your short queries return no records or a single record, you should model your data differently. Using a secondary index as a UNIQUE INDEX, regardless of whether the index is in memory or on flash, is an anti-pattern in a NoSQL database where single-key lookups have very low latency.

The cause for the performance difference between a secondary index on flash and a secondary index in memory is directly tied to the latency difference between memory access and device IO, as well as drives having a finite number of IOPS. Tuning how much memory is given to mmap has a bigger positive impact with secondary indexes on flash than with the primary index on flash. This is because the access patterns aren’t random – the B-tree roots are effectively cached, and with more RAM available to the mmap cache, the extra device IO cost decreases. When choosing to store the namespace secondary indexes on flash, you need to have enough aggregate device IO capacity to cover the increased IOPS cost needed to sustain your desired latency and throughput.

Secret Agent: Integrating with secrets management services

Starting with server 6.4, Aerospike EE can be configured to tell the newly released Aerospike Secret Agent to fetch secrets from an external secrets management service.

Secret Agent runs as an independent process, either on the same host as the Aerospike cluster node, or on a dedicated instance serving multiple nodes. The agent wraps around the native library of the service provider, and handles authentication against the service. Aerospike server is agnostic of the destination, and with a simple common configuration can be connected to a variety of services.

This initial release of Secret Agent fetches secrets from AWS Secrets Manager. The independent design makes it easy to rapidly add new integrations on a separate release cycle from the server, with Google Cloud Secret Manager, Azure Key Vault, and Hashicorp Vault planned next.

Improved Cross-Datacenter Replication (XDR) throughput

In server 6.4 an optimization previously used in server 6.1 to enhance XDR throughput in recovery mode or when rewinding a namespace was applied to stable-state XDR shipping. Ship requests are distributed across service threads using partition affinity. This highly efficient approach uses fewer service threads to ship more data, while creating significantly fewer socket connections.

As a result, the max-used-service-threads configuration parameter was removed.

Newly supported operating system distributions

Server 6.4 adds support for Amazon Linux 2023. While Amazon Linux 2 is based on, and mostly compatible with, CentOS 7, AL2023 is a distinct flavor of Linux. As such, the Aerospike Database 6.4 server and tools packages have an amzn2023 RPM distinct from the el7 one used for Amazon Linux 2.We recommend that users of Amazon Linux 2 upgrade their OS to AL2023 because CentOS 7 and Amazon Linux 2 will lose support in Aerospike Database 7.

Server 6.4 also adds support for Debian 12. Users of Debian 10 are encouraged to upgrade in order to enjoy the benefits of the newer OS kernel.

End of the line for single-bin namespaces

After many years as an optional storage configuration, support for single-bin namespaces was removed in server 6.4. Single-bin namespaces provided savings of several bytes per record, an appealing feature when Aerospike Database was a new product, flash storage was much slower, and SSD and NVMe drives were much smaller. Today, common modern flash drives are significantly faster and larger, Aerospike’s storage formats are far more efficient than they were when single-bin was introduced, and Aerospike EE has a compression feature that helps reduce disk storage consumption.

The removal of the single-bin and data-in-index configuration parameters paves the way for many exciting new features in the upcoming Aerospike Database 7, including a major overhaul of the in-memory storage engine. Users of single-bin namespaces may choose to delay their upgrade until server 7.0 in order to gain the benefit of these new features while avoiding the pain of upgrading to a server version which no longer allows for these storage options.

Aerospike users without single-bin namespaces in their cluster may upgrade to server 6.4 through a regular rolling upgrade. Otherwise, please consult the special upgrade instructions for Server 6.4.

Miscellaneous

Query performance – sharing partition reservations provides enhanced query performance for equality (point) and

BETWEEN(range) short queries, scans, and set index queries.Improved defragmentation – Server 6.4 avoids superfluous defragmentation of blocks with indexed records.

Batch operations – The configuration parameter batch-max-requests was removed in server 6.4. Also, the benchmarks

{ns}-batch-sub-read, {ns}-batch-sub-write, {ns}-batch-sub-udfare now auto-enabled and exposed by the latencies info command.Hot-key logging – the new

key-busylogging context provides the digest, user IP, and type of operation when an error code 14 (KEY_BUSY) is returned to the client.

Breaking Changes

The scheduler-mode configuration parameter was removed.

The deprecated scan info command (

scan-show,scan-abort,scan-abort-all) have been removed. Use the equivalent query commands (query-show, query-abort, query-abort-all).

For more details, read the Server 6.4 release notes. You can download Aerospike EE and run in a single-node evaluation; you can get started with a 60 day multi-node trial at our Try Now page.

Keep reading

Jan 28, 2026

Inside HDFC Bank’s multi-cloud, active-active architecture built for India’s next 100 million digital users

Jan 27, 2026

Aerospike named a finalist for “Most innovative data platform” in SiliconAngle Media’s 2026 Tech Innovation CUBEd Awards

Jan 20, 2026

Change data capture (CDC) to Kafka

Jan 15, 2026

How IDFC FIRST Bank built a real-time customer data access engine with Aerospike