MEC/Edge in Telecom – What does Aerospike bring to the table?

“Multi-Access Edge Compute (MEC)” revenues are expected to jump to $167 billion in 2025, a huge leap from the $3.5 billion recorded last year. MEC’s popularity is coming close to that of “5G” and/or “Open RAN” and received a significant push forward with a partnership between Verizon and AWS.

This partnership was announced in 2019 at the AWS “re: Invent” conference. It led to the commercial service of AWS Wavelength in Verizon Signal Access Points (SAP) less than a year later in the San Francisco Bay Area and in Boston. Today, the service is available in 13 major U.S. metro areas and should hit the intended target of 20 markets by the end of this year.

MEC Overview

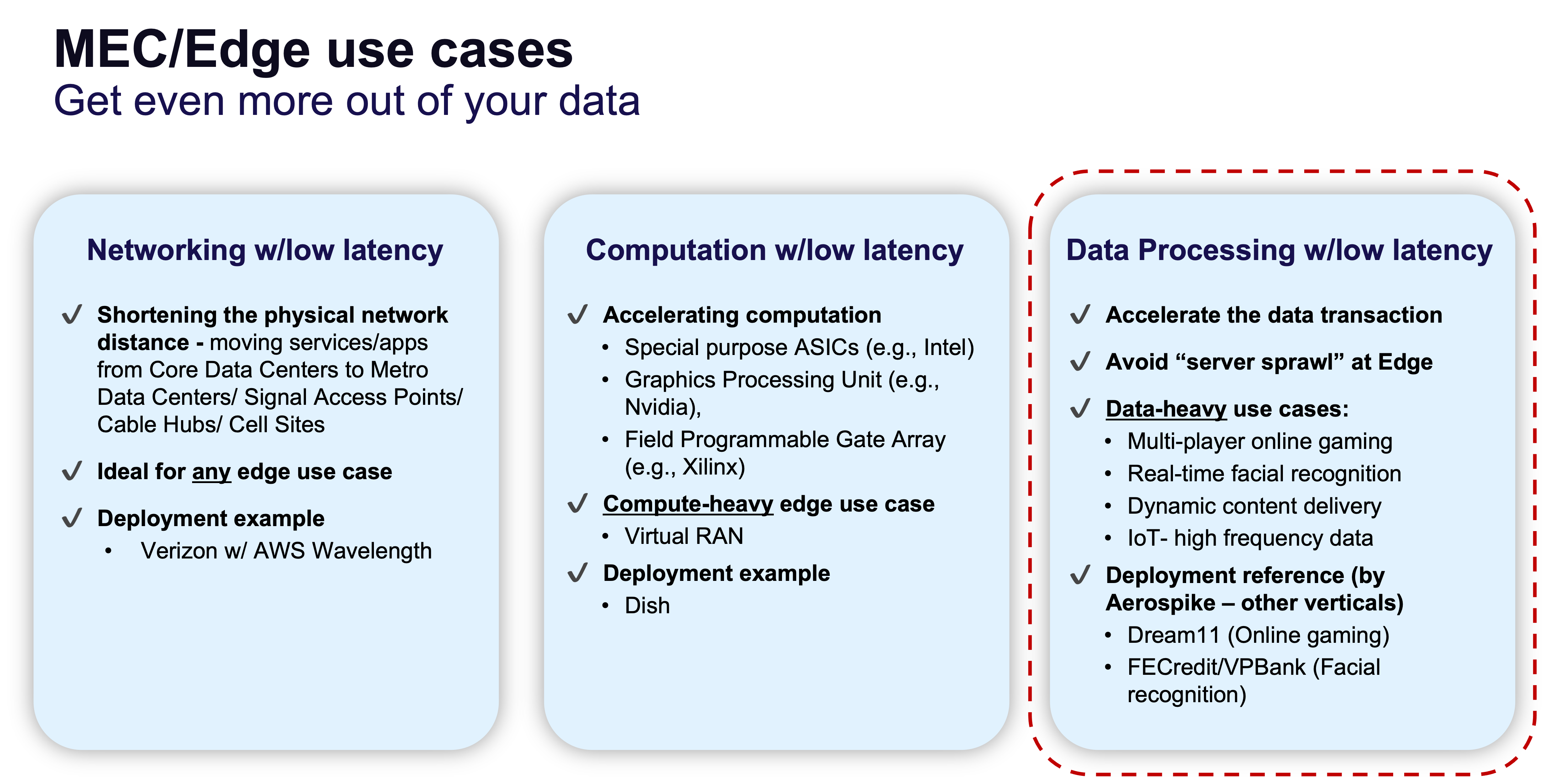

The core concept of MEC is very straightforward: lowering the latency to deliver faster service to end users. The most obvious way to do this is by moving the network infrastructure – such as a data center — closer to end users. Specifically, when the apps sitting on end-user devices can fetch the data from a nearby metro data center, instead of going to a far-flung mega data center, it cuts network latency. With less distance to traverse and fewer routers/switches/amplifiers, access network latency is reduced.

Another supply-side dimension to lowering the latency paradigm is cutting the time it takes for computation. This is where we talk about special purpose application specific integrated circuits (ASICs), graphics processing units (GPUs) and even field-programmable gate arrays (FPGAs). All of these are commonly classified as “accelerators”, which means they essentially deliver the computational outputs far faster than standard cores/CPUs.

If you are paying attention to what technological evolutions are happening in 5G distributed RAN/ virtual RAN (vRAN) segments, you definitely have come across this since the radio access network is a very computation-heavy use case. Accelerators are critical to making the commercial off-the-shelf (COTS) servers perform as well (if not better) as the proprietary black boxes coming from companies like Nokia and Ericsson. That’s why distributed RAN deployments at edge sites need to incorporate low latency computation.

Lowering Latency of Data Processing

Still, there is a third consideration that often doesn’t get as much attention: lowering the latency of data processing at the edge. End-users really don’t care about the source of latency. All they care about is how much time it takes for a service to be delivered to the requesting end point. It’s immaterial to them whether the latency is contributed by access network distance, by computation, or by processing of data.

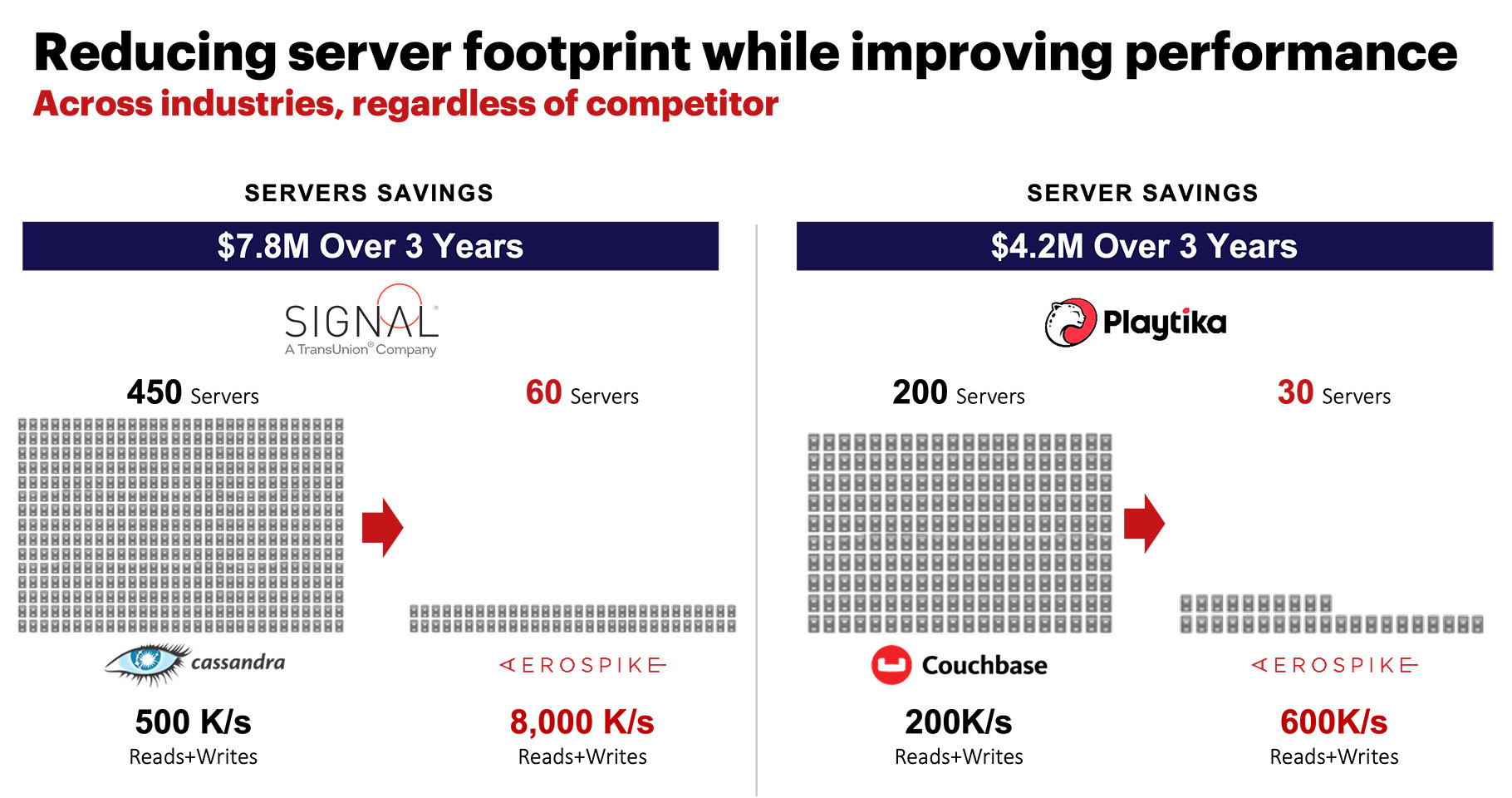

As the market-leading database vendor for real-time (i.e. low latency), high performance, and at scale data processing for mixed workloads (both reads and writes), this is a natural fit for Aerospike. You know what’s even more powerful? The fact that we are known to offer solutions at the lowest possible footprint, without making any compromise to performance thanks to our patent-winning Hybrid Memory Architecture (HMA) that takes advantage of solid-state drives (SSDs) optimized to work as dynamic random access memory (DRAM).

Now, let’s walk you through a “data-heavy” edge use case from the list inside the third bucket above, to look at how Aerospike can add value.

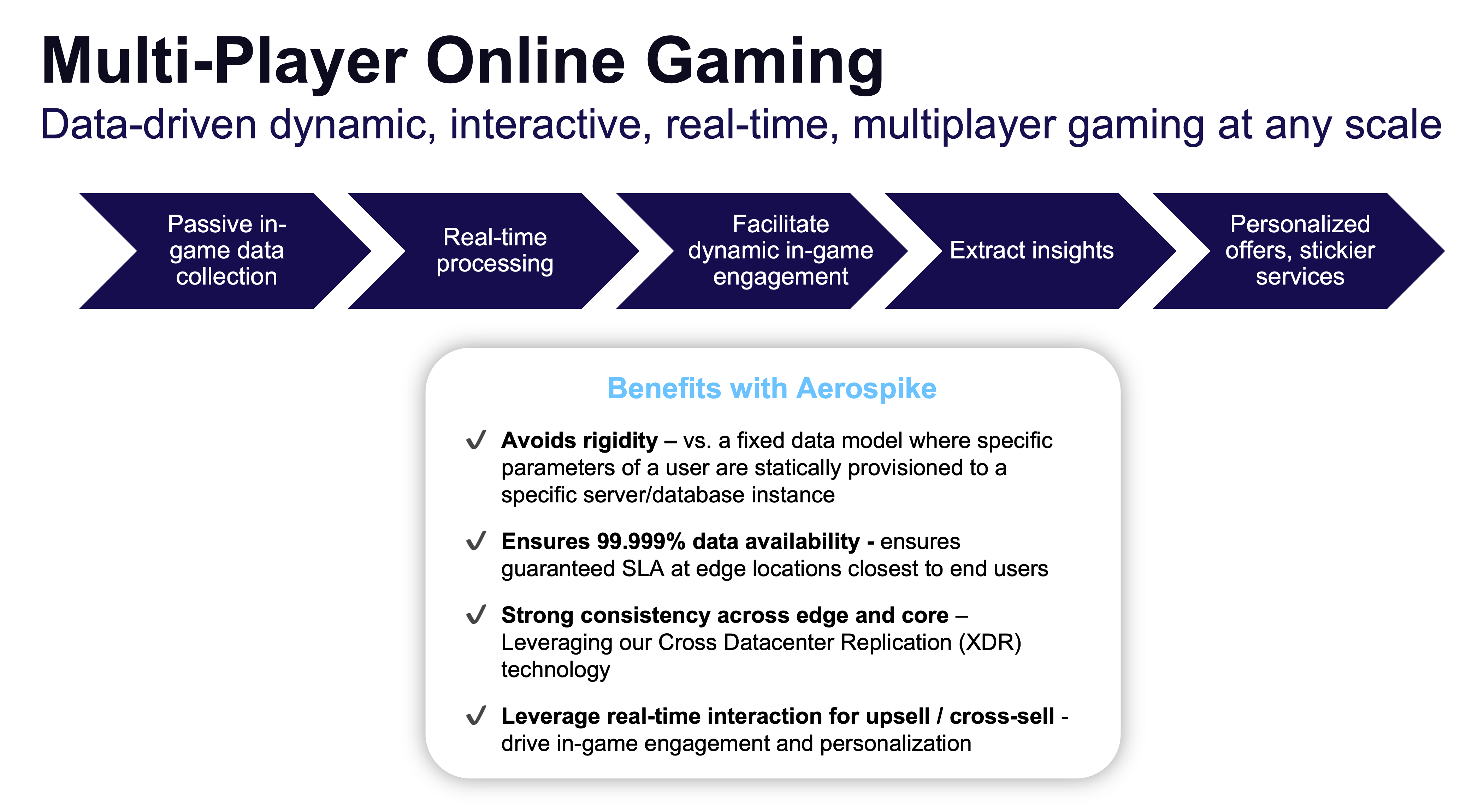

When you consider heavy data users such as multiplayer online gaming, for example, you need to accelerate the data transaction speed and avoid server sprawl even under a heavy workload (read/write) with a small footprint at the edge. As a proofpoint, fantasy sports platform Dream 11 uses Aerospike to serve millions of concurrent users at scale with our real-time data platform. In this edge context, here is what our database platform can assist with:

Passive in-game data collection

Real-time processing of data at the edge

Dynamic in-game engagement

Extract insights by using the system of record (SoR) in tandem with the edge database if and where needed

Personalize offers while gamers are still engaged in the game, making the gaming experience “stickier”

Aerospike makes sense for this kind of use case to:

Avoid the rigidity of a fixed data model where specific parameters of a user are statistically provisioned to a specific server/database instance.

Ensure 99.999% data availability at edge locations closest to end users.

Facilitate single digit millisecond round trip latency from client location to the edge and back.

Leverage upsell/cross-sell by driving in-game engagement and real-time personalization.

Taking a step back, let’s look at how Aerospike fits in to serve broader Telco use cases.

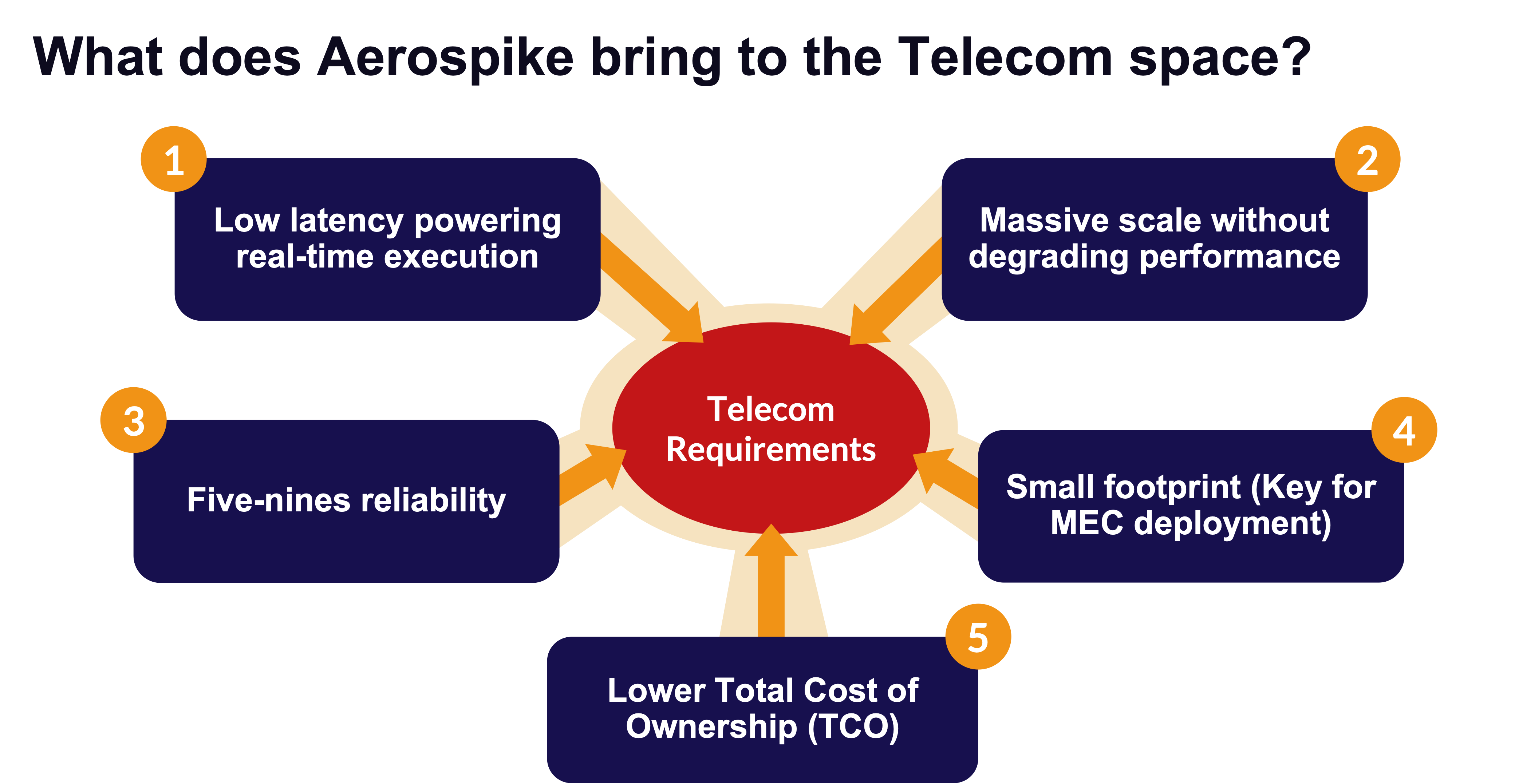

The key requirements that are often raised for telco-related database solutions include low latency, high reliability, ability to scale, and small footprint. All of the above need to happen in the context of a favorable business case with low total cost of ownership (TCO) or high total economic impact (TEI).

Referring to the bottom far right parameter, it’s very important that any infrastructure that is being considered/deployed at the edge should have a small footprint, as we do.

In the case of global gaming company Playtika, for example, the server footprint was reduced 6x, from 200 servers to 30 Aerospike servers. In addition, performance was boosted 300%, from 200,000 reads and writes per second to 600,000; data grew 70% with each studio able to grow from 2TB of unique data to 3.4TB; and savings projected to be $1.4 to $4.2 million over three years by using Aerospike.

With the massive amount of data streaming from mobile, 5G, and IoT sensor applications, Aerospike can help Telcos meet the challenge to manage, leverage and analyze this data across silos. Using Aerospike means companies can optimize new investments in modern infrastructure and applications and provide the best customer experience – all at real-time speeds.

Another reason why we are a strong contender for telecom edge deployment is – we can be deployed both at the edge and also at the core as the system of record (SoR) database AND our innovative cross datacenter replication (XDR) technology makes sure that the same copies of data are stored across edge and core (more about this and our legacy of strong consistency will be covered in a future blog post).

Visit our telecom webpage and learn more about how Aerospike has helped companies like Airtel, Viettel and Nokia.