Contextual understanding: Enhancing interactions and decision-making with GenAI

A nuanced understanding of context can amplify personalized experiences between humans and AI, revolutionizing the future of AI applications.

Understanding and leveraging context has become indispensable for enhancing the quality of interactions and decision-making processes. Context refers to the circumstances and settings in which an event or statement occurs, providing crucial insights necessary for accurate interpretation and response. It encompasses a range of factors, including situational, social, and interpersonal elements that influence how messages are perceived and understood. In the realm of linguistics, context goes beyond mere words to include extralinguistic factors, shaping meaning and comprehension. This significance is encapsulated in the principle that without context, meaning remains elusive, and interpretations can be misguided.

What is contextual understanding?

Contextual understanding is the ability to interpret information within the framework of its environment, background, and intent. Rather than isolating words or phrases, it requires recognizing the broader circumstances that shape meaning. This could involve understanding a person’s tone in a message, the specific situation in which a conversation occurs, or the historical and cultural influences that add layers to text. Whether in personal interactions or AI-driven responses, contextual understanding allows for more accurate interpretations and enhances communication by capturing nuance and intent.

Elements of contextual understanding

Contextual understanding is built on several elements that help shape accurate interpretations of messages and situations:

Linguistic context

This involves the immediate textual or conversational cues, such as word choice, syntax, and tone, that clarify meaning.Cultural context

Cultural background, norms, and values add layers to communication, impacting how certain words or actions are perceived across different groups.Situational context

The environment or situation in which a message is conveyed—like an office setting versus a casual conversation—guides appropriate interpretation.Historical context

Previous events or background knowledge provide essential insights that influence present-day interactions and decision-making.

Together, these elements create a holistic view that allows individuals or systems to interpret messages, emotions, and actions with greater precision, resulting in deeper understanding and connection.

Examples of contextual understanding

Customer service

A support agent interprets a customer’s frustration based on their tone and previous interactions, allowing them to respond empathetically and resolve issues more effectively.Language translation

Contextual AI tools recognize idioms or cultural references in one language and find equivalent phrases in another, improving translation accuracy beyond literal word meanings.Medical diagnoses

Physicians consider a patient’s symptoms within their medical history, lifestyle, and environment, rather than isolating each symptom, leading to more personalized and accurate treatments.Search engine results

Search engines use contextual signals like search history and geographic location to tailor search results, making them more relevant to each user’s unique needs.

Understanding context: From general to specific

The concept of context is not monolithic; it exists on a spectrum from general to specific. General context provides a broad background, setting the scene for understanding, while specific, in-the-moment context delves into the nuances and particulars of a situation. For instance, when considering a request made while driving, such as finding a place to rest, the context includes not just the act of driving but also personal preferences, such as disliking coffee. This specificity enriches the interaction, tailoring responses to individual needs and circumstances.

With large language models (LLMs), specificity is achieved through retrieval augmented generation (RAG), which incorporates detailed context into queries to produce relevant and customized responses. This approach exemplifies how a nuanced understanding of context can significantly improve the interaction quality between humans and AI.

Personalization and contextualization through RAG

Personalization is inherently tied to context. By understanding a user's history, current situation, and preferences, AI systems can offer remarkably tailored experiences. This concept extends beyond human interactions to encompass the Internet of Things (IoT), where context includes variables like location, environment, and historical data.

RAG enhances this process by retrieving and applying specific contextual information from a vast repository of vectors or embeddings. These vectors, representing different aspects of a user's profile or situational data, are crucial for crafting responses that are not just relevant but also deeply personalized. The continuous accumulation of these vectors, reflecting both historical patterns and current situations, enriches the AI's understanding, enabling it to deliver more accurate and nuanced responses.

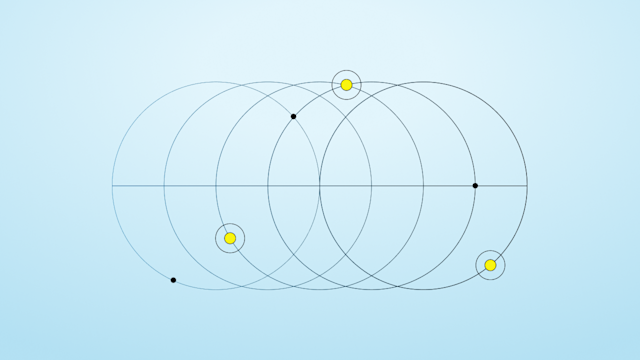

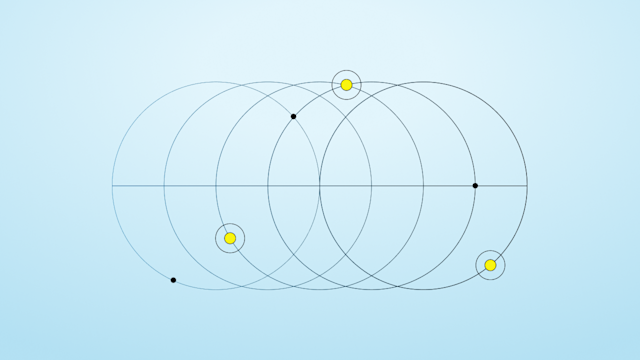

How embeddings capture and utilizing context

Embeddings play a pivotal role in capturing and utilizing context. These mathematical representations, or vectors, encode diverse aspects of data, allowing for nuanced profiling and semantic searches. The interaction between embeddings and LLMs is symbiotic; embeddings provide a rich, contextual backdrop that augments the semantic understanding of LLMs, leading to more precise and contextually relevant outcomes.

Accretion, or building up a set of vectors, is crucial for developing a comprehensive context encompassing various types of interactions, customers, or situations. This assembled context enhances the AI's predictive and responsive capabilities. Moreover, the accuracy of vector search is paramount, underscoring the need for high-quality, current data to inform model responses.

Integrating context in LLMs for enhanced responses

Providing context to LLMs enables a more refined and specific in-context response, which is crucial for improving user interactions and decision-making. However, the application of context does not stop at RAG. The variance in responses can be further minimized by incorporating additional layers of specificity beyond the LLM framework, ensuring even greater relevance and personalization.

Implementing such context-aware systems requires several capabilities: a vast, high throughput vector store, efficient ingestion of embeddings to maintain current context, and the ability to generate embeddings from diverse data sources. Additionally, accessing models suited for creating and applying these embeddings is vital, as is selecting the most appropriate foundational model for the task at hand.

The next phase of GenAI

In conclusion, context is the linchpin of meaningful interactions and effective decision-making in the era of generative AI. By understanding and applying the nuances of specific, in-the-moment contexts, AI systems can offer unparalleled personalization and relevance. The synergy between LLMs, RAG, and embeddings represents a frontier in AI research and application, promising a future where interactions with AI are as nuanced and comprehensible as those between humans.

Keep reading

Jan 29, 2026

Exploring recommendation engine use cases

Jan 27, 2026

Aerospike named a finalist for “Most innovative data platform” in SiliconAngle Media’s 2026 Tech Innovation CUBEd Awards

Jan 20, 2026

Change data capture (CDC) to Kafka

Jan 6, 2026

Engineering AI-powered AdTech for planetary scale at InMobi