Aerospike is pleased to announce the availability of Aerospike 5.0. This is a major release that greatly expands the ability to support globally distributed applications. Particularly noteworthy is the new multi-site clustering feature. For the first time, nodes in a cluster can be geographically distributed while maintaining strong consistency and low-latency reads. This is an active-active architecture with high availability, automatic conflict resolution and guarantees data will not be lost. This unique combination of capabilities will enable new use cases such as globally available systems-of-record.

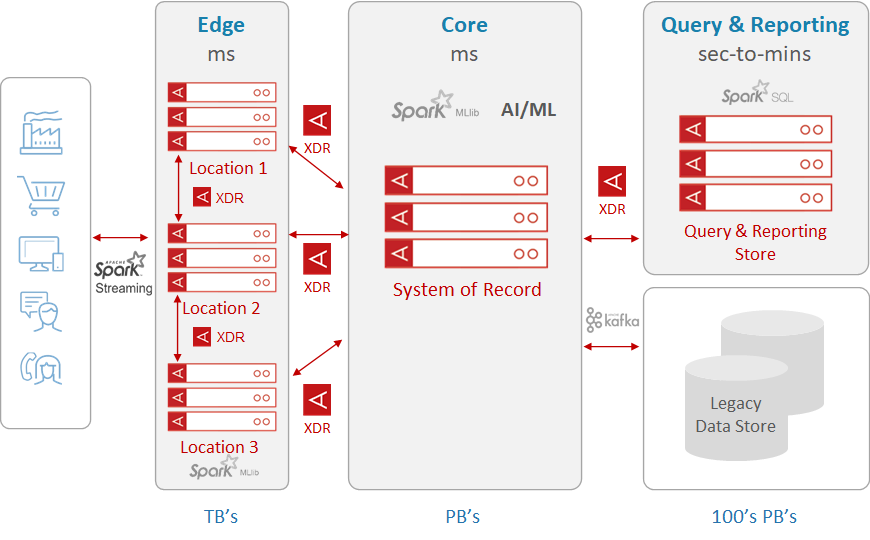

Aerospike Database 5 also features a major upgrade of Cross Datacenter Replication (XDR), offering new options, higher performance and greater configuration flexibility. XDR provides the ability to propagate updates asynchronously between two or more Aerospike clusters in topologies tailored to the needs of the application. It is particularly adept at pushing data collected in edge clusters to global repositories and vice versa. XDR supports active-active configurations where conflicts may need to be resolved in the application. This is the first in a series of XDR enhancements that will be rolled out in subsequent 5.x releases.

Multi-site clustering and XDR are available in the Enterprise Edition. See the release notes for a complete list of 5.0 features.

Upgrade/Downgrade Considerations

The new XDR implementation includes changes to low-level data structures and protocols. Aerospike 4.9 functions as a bridge release, although it does not expose new XDR capabilities. The following rules must be observed to preserve the ability to upgrade/downgrade between Aerospike 5 and earlier server versions:

In a cluster, all nodes running releases earlier than 4.9 must first upgrade to 4.9 before any node is upgraded to 5.0.

In a cluster, all nodes running Aerospike 5.0 or greater must downgrade to 4.9 before any node is downgraded to earlier releases. Downgrading to releases earlier than 4.9 may also require erasing storage devices.

Multi-site Clustering

Historically nodes in an Aerospike cluster have been located in the same datacenter, to minimize latency. In addition to inter-node heartbeats to keep track of cluster health, fabric traffic (from replication and migrations) is potentially high-volume and should cross as few switches as possible.

Starting with Aerospike 4, the Rack Awareness feature allowed cluster nodes to be divided between racks such that a given rack contains all the records in a database (either in a master partition or a replica). With this arrangement, the cluster can survive a rack failure without data becoming unavailable.

Multi-site Clustering further loosens constraints on the nodes of a cluster by allowing them to reside in different cloud regions or data centers. As with Rack Awareness, each site should contain all the database records, either in a master or replica partition. With this arrangement, and the use of the Client Rack Aware policy, reads will be satisfied locally (i.e. a few milliseconds). Write latency is dependent on network topology, but generally can be held to a few hundred milliseconds. For read-heavy applications, this results in the best of both worlds: fast access and regional availability.

A Multi-site cluster obeys the same semantics as an ordinary cluster, meaning that the full range of data consistency models are available, including Strong Consistency (SC) mode. This enables a new class of applications, e.g. for globally distributed financial transactions, and other systems of record.

It is important to note that this capability is superficially similar to shipping records both ways between clusters with XDR. The difference is that XDR can’t guarantee strong consistency: there is no way to avoid conflicts caused by plesiochronous writes to the same record in both clusters.

Cross Datacenter Replication (XDR)

XDR has been overhauled to address existing pain points and create a baseline for supporting new features. The result is higher performance, greater resilience, and enhanced configuration flexibility.

XDR Architecture

The key change is that the logic for determining which records to ship over XDR is now based on Last Update Time (LUT). The original implementation instead used digest logs (at over 90 bytes/record) and often shipped these between nodes. This added overhead, and under some rarely occurring race conditions, records that should have been shipped were skipped.

The LUT-based approach is more accurate and uses less bandwidth. Crucially, by exchanging the last ship time between nodes, using LUTs enables a replica to take over from a master without holes or dupes in shipped records.

The new XDR architecture eliminates dependency on the Aerospike C client library for XDR-related data transfers, resulting in a major performance boost. The client library approach reused existing code, but at the cost of greater overhead, particularly extra threads and stages, and record object conversions to satisfy the client API. The new implementation transfers data directly from server internal structures to client-server wire protocol with minimal conversions.

XDR traffic has been re-organized to minimize the use of expensive locks. Traffic between two nodes now executes on the same service thread, leading to higher cache usage, with the side-effect of increasing throughput and lowering latency.

New XDR Features

XDR in 5.0 supports many new features and more robust behavior in common scenarios:

A remote data center (DC) can now be dynamically added and configured while the source and remote clusters are running.

Remote clusters can now be seeded from an existing cluster, without using the

asbackup utility.

Configurations are now specified per DC and per namespace:

DC configurations can be different from each other.

Different namespaces in a given DC are independently configurable.

Rewind capability

A DC can be resynced starting from a specified LUT.

XDR can repair or catch up to a datacenter by injecting a timestamp into the shipping process.

When a new DC is added to XDR dynamically, it can be specified to start in either recovery mode, or to perform an initial sync.

DC independence

A cluster with XDR enabled can keep up with unaffected partitions even if a DC node goes bad.

Alternatively, XDR can be configured to halt if a DC node goes bad.

Connector Destination

The Change Notification framework has been extended to support destinations other than Aerospike cluster nodes. This is done with the ’destination connect’

clause in the XDR configuration. The canonical use case for this is supporting connectors that exchange data (in either direction) with other enterprise systems.

The limit on XDR destinations has been raised to 64 (formerly 32).

A Word on Active-Active

Active-Active is a term that often causes confusion because it refers to a spectrum of capabilities rather than a single mode of behavior. Aerospike defines an active-active system as a network of independent processing domains, each providing a representation of the same underlying database. Direct corollaries of this definition are that 1) writes performed in one domain are replicated to the others; 2) every domain may perform a write at any time independent of writes in the other domains.

By this definition, both Multi-site clustering and XDR qualify as Active-Active systems. Where they differ is their consistency models. With a Multi-site cluster, the constituent nodes are tightly bound by distributed state algorithms. This enables support of Strong Consistency (SC), which guarantees writes will be propagated without data loss (automatic conflict resolution), and will continue operating even if an entire rack is lost (either through nodes going down or loss of network connectivity).

By contrast, with XDR each independent processing domain is a separate Aerospike cluster, and writes are propagated asynchronously. When two XDR clusters are configured to send each other writes, if both write the same record there is the possibility the records will become inconsistent, and any conflict that occurs will have to be detected and resolved by the application (or manually).

LEARN MORE:

Keep reading

Feb 17, 2026

Giving database operators visibility and direct control with dynamic client configurations

Feb 9, 2026

Introducing Aerospike 8.1.1: Safer operations, more control, and better behavior under load

Feb 9, 2026

How to keep data masking from breaking in production

Feb 5, 2026

Helm charts: A comprehensive guide for Kubernetes application deployment