What is p99 latency?

Learn what p99 latency means, why the 99th percentile matters for system performance, and how to reduce tail latency. Discover how Aerospike ensures consistent, low latency performance at scale.

“P99 latency” refers to the 99th percentile of response times in a system. In practical terms, it answers the question: How slow are the slowest 1% of requests? To say a service has a p99 latency of X means 99% of requests finish in X time or less (only the top 1% take longer). It’s essentially the worst-case response time you’ll see for the vast majority of requests, or the maximum latency other than for that last 1%. For example, if a web API’s p99 latency is 200 ms, then out of 100 requests, 99 will respond in under 200 ms, while the slowest single request may take longer. This metric is also called a “tail latency” measure, because it captures the tail end of the latency distribution, or the slow outliers.

It helps to contrast p99 with other common latency stats:

Median (P50): 50th percentile, the middle of the pack. Half of the requests are faster, half slower. The median (or p50) tells what a “typical” request experiences, but nothing about the extremes.

Average (mean): The arithmetic mean of all response times. Averages can be misleading for latency; a few slow outliers drag the average far from what most users experience, or, conversely, a mix of many super-fast and some normal requests makes the average look better than the median. Unlike median or percentiles, an average doesn’t correspond to any specific real request in many cases.

P90, P95 – 90th or 95th percentile latencies: These also describe the tail, but not as deep into it. For instance, p95 latency is the level under which 95% of requests finish, where the slowest 5% exceed it. Higher percentiles, such as p99 or even p99.9, hone in on the rare slowest incidents. The 90th percentile shows the “typical worst-case” for most users, while p99 captures the rare worst cases.

P99 latency is a threshold that nearly all requests meet. It tells you that “99 out of 100 times, our response is this fast; only 1 out of 100 is worse.” It’s a way of defining performance, including the outliers, but not being skewed by one-off anomalies, the way “max latency” would be. Max latency could be an outlier event that’s not usually repeated, whereas p99 filters out the truly singular spikes while still reflecting consistent problems. Another way to put it: p99 is the maximum latency experienced by 99% of requests. It’s effectively the worst-case latency under normal operating conditions, ignoring exceedingly rare hiccups.

Why p99 latency matters

Focusing on p99 latency is important because it captures the user experience in the worst reasonable case, and those “long tail” delays have a disproportionate effect on satisfaction and system behavior. Unlike an average or median, the p99 highlights the outliers, or the slow responses that only a few users see, but that still damage your service’s reliability and reputation. Here are several reasons p99 latency is so important:

1. Outliers affect real users: Even if just 1% of requests are slow, those could affect thousands of users when your system is at scale. At high traffic volumes, a “rare” 1% occurrence happens frequently. For instance, if an API handles 1 million requests per day, the slowest 1% corresponds to 10,000 sluggish responses daily, each potentially causing a poor user experience. Tail latency causes the slow encounters that users remember and complain about. While average latency often masks variability, tail metrics show what the slowest interactions look like. In user-facing applications, consistency is key: even if only a small fraction of transactions are slow, users notice the inconsistency and lose trust.

2. Multi-step operations amplify tail effects: Today’s applications often involve multiple microservices or backend calls to fulfill a user request. The overall response is only as fast as the slowest component. If your web page load requires five API calls in parallel, and each API has 99% of responses under 100 ms, the chance that all five will complete within 100 ms is only about 0.99^5 ≈ 95%. In other words, about one in 20 page loads will take longer than 100 ms because one of the calls was in its slow 1% tail. To get the overall operation’s p99 low, each individual component often needs an even better percentile. That is, for five parallel calls, you’d need each at ~p99.8 to have an overall p99≈100 ms. This compounding effect means tail latency in distributed systems degrades end-to-end performance. A caching layer or optimization that improves average times won’t help the user if one out of those five calls still drags. In fact, unless a cache has an extremely high hit rate (99%+), adding caching might not improve the p99 latency of a multi-call operation because just one cache miss can put you back into a slow database path. Thus, p99 latency matters in complex architectures because one slow link in the chain slows the whole user experience.

3. Business and SLA implications: Many services define service level agreements or objectives (SLAs/SLOs) in terms of percentile latencies, such as “99% of transactions will complete in under 250 ms.” Meeting these guarantees means keeping p99 latency within target. If your p99 is high, it indicates frequent SLA violations, which can lead to penalties or customer churn. Even without formal SLAs, user-facing systems expect that most interactions are fast. By keeping tail latency low, you provide a consistently responsive experience for virtually all users, not just the “average” user. Consistency builds trust; people know what to expect. Conversely, if 1 out of 100 interactions is painfully slow, users will remember that one bad experience more than the 99 good ones. In domains such as finance or telecom, one delayed transaction, such as a credit card authorization or a stock trade, has outsized consequences, so engineering teams focus on the 99th (or even 99.9th) percentile to guarantee reliability under peak conditions.

4. Revealing hidden issues: Tail latency is often where systemic bottlenecks and rare bugs surface. A service’s mean latency might look stable, while the p99 is spiking due to infrequent events such as occasional database locks, cache evictions, or garbage collection pauses. By monitoring p99, developers uncover these hidden problems that only show up under stress or at random intervals. P99 latency helps identify less obvious bottlenecks, such as a periodic slow query or a network hiccup, because those issues will push some requests into the slowest 1%. In this sense, p99 is a more sensitive metric for performance anomalies. If you improve your p99 latency, it often means you’ve fixed something fundamental, such as optimizing a heavy code path or tuning a database, which makes the system more robust. In contrast, just improving the average might be possible by caching or handling easy cases faster, which doesn’t necessarily solve the harder, slow cases.

5. Tail latency and user perception: Finally, human perception is such that an inconsistent response time feels worse than a consistently slightly slower one. Users adapt to a service that always takes ~500 ms, but if it usually takes 100 ms and occasionally stalls for 3–4 seconds, it’s jarring. Those occasional worst-case delays, even if rare, erode confidence. In interactive applications such as search, recommendations, or gaming, a long pause disrupts the flow, even if most actions were quick. Thus, optimizing for p99 to reduce those rare high-latency occurrences makes perceived quality better. Google’s engineering teams focus on tail latency so that even under heavy load, nearly all searches complete fast, preventing users from seeing timeouts or long waits. Keeping tail spikes down reduces the worst outlier experiences users might otherwise encounter at some point during their session.

Understanding the tail latency challenge

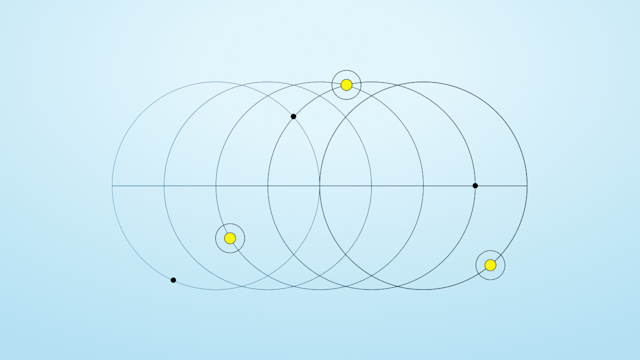

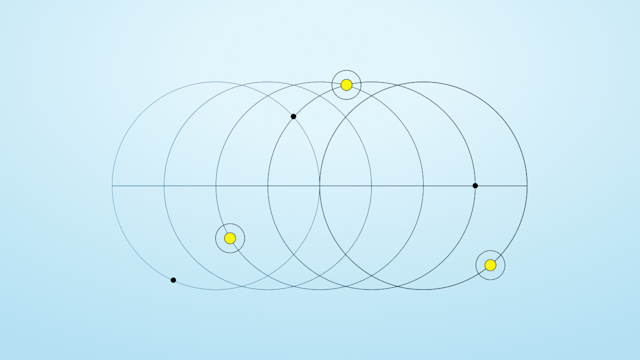

Why do some requests take so much longer than others? In an ideal world, every transaction would have about the same response time. In reality, most systems exhibit a long-tail distribution of latencies, where a small percentage of requests take significantly longer due to various factors. When you plot latency percentiles on a graph, you often get a “hockey stick” curve: relatively flat through most of the distribution, where p50 to p90 might be close together, then shooting up sharply at the tail end of p99 and beyond. In one illustration, the difference in response time between the 10th and 90th percentile was minor, but there was a sharp jump from p99 to p99.9, reflecting those rare, unpredictable delays.

Let’s explore why these tails happen:

Rare events and system variability

Complex systems have many components and potential points of delay. Even if something has a small chance of causing a delay, given enough requests, it will eventually show up. For example, suppose a server experiences a garbage collection pause 1% of the time, perhaps due to Java’s memory management, and that pause adds ~2 ms delay. That alone would mean the p99 latency is at least 2 ms higher than the median, because roughly 1% of requests hit a garbage collection pause.

Now add an even rarer event. Say 0.1% of packets get lost on the network, triggering a retry that adds two-seconds delay. That event will almost never affect median or p90 latency, but it will appear around the p99.9 mark, or one in a thousand, as a huge spike. In a distributed environment, there are many such low-probability events, including disk I/O hiccups, context switches, cache misses, sporadic lock contention, and their cumulative probability means something will go wrong for a small fraction of requests. The tail end of p99, especially p99.9, is where all those rare conditions show up. As latency expert Gil Tene quipped, “Outliers are the norm” in large-scale systems. If you have enough trials, even 1-in-1000 events occur regularly. This is why tail latency tends to be much worse than typical latency.

Queuing and contention

Many systems see slowdowns when they are under heavy load due to queueing effects. For example, an application server might handle most requests quickly when lightly loaded, but if it occasionally gets a surge of traffic, some requests will wait in a queue or backlog, increasing their latency. Those queued requests become the tail. If 1% of the time your server is near saturation, the requests during those moments will form the slow tail. This is why tail latency often worsens at high throughput.

As you approach system limits, a larger fraction of requests experience contention for CPU, disk, or locks, causing a long tail. In distributed systems, even if only one server out of a hundred is slow due to garbage collection or overload, any requests routed to that server become part of the overall tail.

Large services typically have many nodes; the slow performance of the slowest node affects the p99 for the whole service. So engineering for low tail latency requires building in headroom to avoid queues, and balancing to avoid one hot spot node lagging behind. Google’s seminal “Tail at Scale” insight was that in a parallel or replicated system, you have to mitigate straggler nodes through techniques like hedged requests or retries to bring down the overall tail latency, because the slowest of many components has the biggest effect on the user’s experience.

Garbage collection and runtime pauses

As mentioned, one common culprit for latency spikes is garbage-collected runtime environments such as Java, .NET, or Python. These systems occasionally pause normal processing to reclaim memory, causing jitter or irregular delays. For example, Apache Cassandra (written in Java) exhibits p99 latency spikes because of Java’s periodic garbage collections. A Cassandra user might see most read operations complete in 2 ms, but every so often, a read stalls for 50 ms during garbage collection, pushing that operation into the 99th percentile.

In contrast, a database written in C/C++ with manual memory management might avoid garbage collection pauses and have a tighter latency distribution. Other runtime behaviors, such as just-in-time compilation or virtual machine interference, similarly add tail latency unpredictably. Engineering teams address this by tuning garbage collection, such as by using low-pause collectors or smaller heap sizes, or by choosing technologies that favor consistent latency. Systems optimized for p99 often use languages such as C++ or Rust for critical components to eliminate garbage collection pauses.

Network variability

In distributed services, network communication often causes tail latency. Most requests resolve in milliseconds, but occasionally a packet is lost or a route is congested. TCP retransmits lost packets, but introduces tens or hundreds of milliseconds of delay depending on timeouts. If 0.1% of your requests hit a packet loss, those will take substantially longer; in one case, a 0.1% packet loss event introduced a 2-second delay at the 99.9th percentile. Additionally, variations in network routing mean some requests take a slightly longer path. In geo-distributed systems, a few requests might be served by a distant data center due to routing failover, leading to higher latency. All these network issues pile into the tail. P99 latency often includes cases of one or two retries or a second round trip due to network issues. This is why client-side timeouts and retries must be used carefully; they help cut off extreme waits, but add their own traffic, which, if not controlled, might make congestion worse for others.

Disk and I/O outliers

When storage is involved, such as database queries hitting disk, I/O variability affects the latency tail. Most disk reads might be fast because they’re served from cache or SSD, but if a request triggers a rare cache miss or needs to spin up a cold disk, it will be much slower. Traditional hard drives, in particular, had high variance; most seeks took about 10 ms, but a random seek could sometimes take 100 ms. Even with SSDs, there can be outliers.

For example, an SSD might block briefly for wear-leveling or garbage collection internally, causing a read to stall. If a database compaction or backup is running, some queries might slow down, contributing to tail latency. Today’s high-performance systems often use techniques such as log-structured storage or direct raw disk access to minimize variability. For example, Aerospike’s storage engine is optimized for predictability by avoiding long pause operations such as extensive compaction. Still, the general rule is that any non-uniform workload on disks or the network will produce outliers.

Multi-tenancy and noisy neighbors

In cloud environments or shared platforms, tail latency arises from resource contention with other workloads. If your service shares CPU cores, network interface, or storage with other processes, occasionally those can interfere. For example, another high-CPU process kicks in and uses cycles, or a co-located service saturates the network link for a moment. This “noisy neighbor” effect means even if your own application is consistent, the environment might introduce variance. Isolation techniques such as dedicated cores and rate limiting are sometimes needed to control p99 latency in multi-tenant setups.

To summarize, tail latency exists because real systems are not perfectly uniform. Rare events, whether internal, such as garbage collection or thread scheduling, or external, such as network retries, create a long tail in the distribution of response times. As you push a system harder or scale it out, these effects compound, because more load and more components mean more chances for something to go awry. Recognizing this, engineers pay close attention to p99 and higher percentile metrics to gauge the resiliency and performance of a service. It’s often said that “the average case is for when everything goes right; the 99th percentile is for when something goes wrong.” By understanding what causes those “something went wrong” moments, we design systems to mitigate them.

Measuring and interpreting p99 latency

Now that you know what p99 latency is, how do you deal with it? Here are the steps.

How to calculate p99

If you have a set of latency measurements, to get the 99th percentile, you sort them from fastest to slowest and find the value 99% of the way through the list. For a simple example, if you had 100 request timings sorted in ascending order, the 99th percentile would be the 99th item on that list, because 99 are faster and only one is slower. In practice, when the count isn’t a neat 100, one takes the rank as ceil (0.99 * N) where N is the number of samples. Many monitoring tools maintain histograms of response times and computing percentiles from them. Unlike an average, there’s no single formula; it’s a position in your data distribution. High-volume systems often report p50, p90, p95, p99, and p99.9 to give a full picture of the latency curve.

Instrumentation and tools

Today’s observability stacks make it easier to track p99 latency. Metrics collectors such as Prometheus or Datadog can be configured to compute percentile latencies over defined intervals, such as each minute or a five-minute window. Many web servers and RPC frameworks have built-in support to measure request durations and export histograms.

Distributed tracing tools, such as Jaeger and Zipkin, help by recording the latency of each service hop for individual requests. They can show how a particular microservice or query is contributing to tail latency. Load testing tools such as JMeter, Gatling, and Locust measure p99 under controlled high-load scenarios; they generate a large number of requests and report the percentile stats, which help you understand how your tail latency behaves as traffic increases.

It’s important to capture a large enough sample. Measuring p99 latency on only a handful of requests isn’t meaningful because with 10 requests, the “99th percentile” would basically be the maximum. You typically want thousands of samples to get a stable p99 value. If your traffic is low, you might aggregate over a longer time window or focus on p90 instead.

In fact, p99 metrics are less reliable for low-traffic services, as one slow request in a small sample skews the percentile. When ‘N’ is small, it might reflect a fluke rather than a persistent issue. On the other hand, if your service gets only 100 requests a day, then that one slowest request is actually 1% of your daily traffic, so you might still care about it. Was it an outlier worth investigating, or just a random blip?

Interpreting p99 in context

When you see a p99 latency number, consider it alongside other metrics. For a full view of performance, it helps to compare p50 to p99. If your median is 10 ms but p99 is 500 ms, that’s a huge spread, meaning most requests are fast but the tail is very slow, which might indicate specific problems, such as a missing database index for certain queries that run occasionally. If p50 and p99 are relatively close, it means the system is consistent, which is good. Comparing p99 over time reveals regressions: If a new code release causes the p99 to jump, something introduced a tail-end slowdown.

Percentile-based alerts are often used in production because they are less noisy than max latency alerts but more sensitive than average latency. For example, an alert might trigger if p99 latency goes above 300 ms for more than a few minutes, indicating a serious degradation for the slowest requests. This avoids false alarms from just one or two extreme outliers, while still catching trends where the tail is growing worse.

Another practice is correlating p99 latency with business metrics. If rising p99 correlates with a drop in user engagement or increased error rates, it’s a strong signal that tail latency is hurting the business. Teams often set explicit service level objectives such as “99th percentile checkout latency < 500 ms” and track progress against that, because it helps most customers have a smooth checkout process; those who experience multi-second waits might abandon their cart.

When interpreting p99, also consider the time window of measurement. A p99 latency reported over a one-minute window versus a one-hour window might differ. Over a longer window, you might accumulate more outliers if the system had a few glitchy moments in that hour. Some monitoring systems roll up percentiles, which can be tricky because “aggregation of percentiles” is not straightforward.

It’s often recommended to look at raw histograms or long-tail graphs if possible. For instance, you might look at a histogram of response times and observe the distribution shape. Perhaps 99.5% are under 50 ms, then a thin tail out to 1 s. This gives an intuitive picture of how frequent and severe the slow outliers are.

A concrete example from an access log analysis: An engineer plotted latencies of ~135,000 web requests over 10 minutes. The average latency was under 50 ms, suggesting performance was fine, but in the raw data, some requests took up to 2,500 ms. The average hid those occasional slow requests. When the engineer looked at the 99th percentile latency for that period, it showed spikes close to 1s. In other words, 1% of requests were as slow as ~1s, even though the average was ~50 ms. That p99 graph was far more indicative of the user experience, because it revealed that many users likely encountered a ~1s delay at least once. This example illustrates why relying on averages can be dangerous, and how p99 provides a more transparent view of performance under load.

Finally, improving p99 latency requires a holistic approach. It’s not one number to tweak, but a reflection of many underlying factors. So measuring it is just the start. When p99 is high, you need to dig into logs, traces, and system metrics to isolate why. Is it one specific query pattern that’s slow? Is only one server having issues? A certain time of day when batch jobs run? Often, the next step is to break down p99 latency by category, such as p99 per endpoint or per database query type, to find the culprit. With good monitoring and tracing, you can pinpoint that “1% worst-case” scenario and address it.

Strategies to improve p99 latency

Bringing down p99 latency often requires more effort and different techniques than improving average latency. By definition, we’re targeting the outliers, or the slowest 1%, which means we have to eliminate bottlenecks and handle rare conditions better. Here are several strategies (from system design to coding practices) to reduce tail latency:

Optimize the critical path: Look at what happens for those slowest requests and improve that path. This could mean adding an index to speed up a database query that occasionally does a full table scan, or refactoring an algorithm that has worst-case performance issues. Often, p99 requests are hitting a slow code path that isn’t taken for most requests. Profiling and tracing tools help identify where those extra milliseconds are going. Once identified, you might need to rewrite that part for efficiency or handle it asynchronously. For example, if 1% of API calls are slow because they trigger an expensive recalculation, consider doing that calculation offline or caching the results so the online request doesn’t pay the full price next time.

Horizontal scaling and load distribution: One fundamental way to reduce tail latency is to ensure no node gets overwhelmed. By scaling out (adding more servers) and balancing load evenly, each machine handles fewer requests and is less likely to experience queue build-ups. A scale-out architecture helps keep latency low under high throughput by adding more capacity. The benefit is twofold: lower average latency and a tighter tail, because it avoids overload situations. If certain partitions of data were busy, distributing them helps avoid one node becoming the slow point.

In a database context, sharding or using a distributed database that automatically balances data can help spread out the work, preventing one slow shard from dragging down the p99. It’s important, however, to also have intelligent load balancing. For instance, if one node in a cluster is responding more slowly, a smart client might route new requests to less busy nodes in the meantime. Some systems use queue length or latency feedback to route traffic away from a node that’s at capacity, which protects tail latency across the cluster.

Optimize your network and placement: Because network latency may be a factor, reduce the distance and hops between your services and users. Techniques such as deploying content closer to users via content delivery networks (CDNs) or edge computing reduce variability for geographically distributed users. For back-end services, if you have cross-region calls, consider replicating data so each region handles requests locally rather than incurring cross-region latency for a small fraction of requests.

In one AdTech example, engineers determined that even after code optimizations, the main cause of missing its 10 ms target was the physical distance between their service and the ad exchange clients. Their solution was to deploy an “edge” service in the same cities as the clients, reducing long network hops. The result was p99 latencies around 6 ms at very high request rates, and ~3 ms for the service’s own processing. The lesson is: Bringing data and computation closer to where it’s needed reduces the chance of slow network-related outliers. Likewise, keep your network infrastructure, such as load balancers and routers tuned. Enabling keep-alives, using HTTP/2 or gRPC to multiplex and reduce connection overhead, and avoiding unnecessary round-trips can all reduce tail latency.

Reduce data transfer volumes: Similarly, transmit less data if possible, because large payloads introduce variability; a bigger response might sometimes hit a slow path in the kernel or congest the network. Techniques such as compressing responses, using binary protocols instead of verbose text, and paginating or streaming large results so clients don’t timeout waiting help minimize even worst-case responses.

For instance, if 1% of requests were slow because they fetched an enormous dataset, you might impose limits or send data in chunks. That way, one heavy request doesn’t clog the pipeline and affect its own latency, and maybe its neighbors’.

Caching and memory optimization: Caching is a double-edged sword for p99, but used wisely, it reduces tail latency for read-heavy workloads. The key is to maximize cache hit rates on the hottest data. If most requests can be served from an in-memory cache, then only a few go to the slower backend, potentially improving the tail if the cache is effective.

However, you need a high hit rate to improve p99 for multi-call scenarios. Ensure your cache size (“working set fits in memory”) is sufficient so requests rarely need to be retrieved from slow storage. Also, optimize the cache itself for speed. For example, use in-process memory caches or very low latency dedicated cache servers. For write-heavy or dynamic workloads, caching might not help tail latency and could even hurt consistency, so it’s not a universal fix.

Another aspect is memory tuning: make sure your servers have enough RAM to avoid swapping, because disk thrashing affects tail latency. Also, using memory wisely to buffer and batch work helps avoid spikes. For example, a messaging system might buffer a bit more in memory to avoid needing access to disk when there’s a sudden surge.

Addressing garbage collection and virtual machine pauses: If garbage collection is an issue, consider strategies like using alternative collectors, such as ZGC or Shenandoah for Java, which aim to reduce max pause times, tuning heap sizes, or partitioning workloads to smaller heaps. Some teams run multiple smaller Java virtual machines behind a load balancer rather than one huge Java virtual machine, because each instance will collect at different times, so not all requests stall at once.

In extreme cases, rewrite critical components in a language that doesn’t use garbage collection, or use off-heap memory to avoid garbage collection effects. The goal is to eliminate long stoppauses that create latency outliers. If the runtime allows it, setting real-time priorities or isolating certain threads also helps by keeping logging or background tasks from preempting the request-handling thread.

Concurrency and timeouts: Thread pool tuning affects tail latency. For example, if you have a fixed thread pool and it’s exhausted, new requests queue up and incur latency. Using an async/reactive model or increasing the thread pool, with care to not overload the CPU, keeps things moving. On the flip side, having too many threads causes context-switch overhead that increases variability. It’s a balance.

Timeouts and retries are also tools: You might decide that if a request hasn’t succeeded in X seconds, it’s better to fail fast and possibly retry or return an error than to let a few requests hang long periods. This doesn’t improve the actual latency of that operation, because it failed, but it makes client-perceived latency look better by cutting off extreme waits.

Some advanced systems do hedging: If a request is taking too long, they send a duplicate request to another server and use whichever returns first, shielding the client from one slow replica. Google has used this to reduce tail latency in big systems, trading extra work for lower latency. Hedging increases the load slightly and could make problems worse if not limited. But it’s a powerful approach in clustered environments, especially read-heavy ones: By racing two requests, the odds that both hit a slow condition are low, so tail latency drops.

Read more about concurrency control in DBMS.

Prioritization and isolation: If you identify certain latency-sensitive requests, such as user-facing queries versus batch jobs, give them priority on resources. Rate-limit or isolate background tasks so they don’t contend with interactive traffic.

For example, run batch jobs during off-peak hours, or run them with low priority using the Unix “nice” command. Isolation can also be at the infrastructure level: Use separate disks or even separate machines for workloads that could interfere. By preventing less important work from causing delays in critical requests, you protect the tail for those critical ones.

One real-world example: Some databases have an “online compaction” or maintenance process that hurts latency; by controlling when and how that runs, or offloading it, you avoid p99 spikes.

Monitoring and adaptive strategies: After applying improvements, monitor p99, p99.9, and max in production. When you see a spike, investigate and learn from it. You may find new causes of tail latency as traffic patterns change, such as a new user behavior that triggers a slow path. Adaptive systems could auto-scale when latency climbs or shed load.

For instance, if p99 starts rising, an auto-scaler might add another instance proactively to reduce load-induced tail. Some systems even implement queue time monitoring; if a request waits too long in the queue, they time it out or spawn more handler threads. The philosophy is to be proactive about tail management. Expect that things will occasionally get slow, and have mechanisms to mitigate it when it starts to happen, and before most users notice.

Use cases where p99 latency is critical

Virtually any performance-sensitive system benefits from low p99 latency, but in some domains it’s more important. Let’s look at a few scenarios:

Real-time advertising (AdTech)

Online ad auctions are time-sensitive. When a user visits a webpage, an auction might be held among advertisers to serve an ad, called real-time bidding. The entire process, from bid request to auction to ad serving, often must complete in under 100 milliseconds, and components such as demand-side platforms and data providers might only get, say, 10–50 ms within that. In this context, p99 latency determines how many bids are missed. If your ad bidding platform has a p99 of 120 ms, that means 1% of the time you miss the 100 ms windo,w and those bids don’t count, meaning you lost money.

Therefore, AdTech companies push for extremely low tail latencies. One example is a Walmart ad platform job listing, requiring the system to handle more than 100k queries per second with p99 < 100 ms consistently. In AdTech, every millisecond counts, and a high p99 means revenue loss or a competitive disadvantage due to bids arriving too late. So these systems use in-memory databases, precomputed features, and edge deployments to keep tail latency low.

Financial trading systems

In electronic trading and high-frequency trading, latency is measured in microseconds, and p99 is often the benchmark. Traders don’t care if the average latency is 50 microseconds and the slowest is 500 microseconds; every order needs to get to the exchange as fast as possible, because the slow one might be the one that misses a market opportunity. Tolerance for outliers is low.

An AWS case study by a trading tech firm noted that in its experience, 99th percentile under 500 microseconds is considered acceptable for many asset classes, meaning even the slowest trades are sub-millisecond. They achieved p99 around 62 microseconds on cloud infrastructure with careful tuning. Think about that: the difference between median ~50 microseconds and p99 ~62 microseconds was only 12 microseconds, reflecting a tight distribution after optimizations. Reaching that consistency required steps such as disabling hyper-threading, pinning threads to cores, using busy-wait polling, and generally removing operating system noise.

In finance, if one out of 100 transactions were 10× slower, that could be an arbitrage opportunity lost or an order failing to execute in time. So they engineer for ultra-low tail latency using techniques such as kernel bypass, real-time OS patches, and colocating servers as close as possible to the exchange to avoid network unpredictability. The p99 (or often p99.9) is basically the metric for “worst-case latency under normal operation,” and they try to make that as low as technology allows, sometimes at significant cost.

As a result, financial systems often avoid garbage-collected languages, keep system load very low to have headroom, and synchronize to hardware clocks to avoid jitter. When we see a stat like one trading platform with 99% of latencies below 345 microseconds, it shows how tail-focused the design is.

E-commerce and web applications

Online retail, search engines, and social media have found that slow tail responses hurt user engagement and sales. Amazon found that every 100 ms delay costs 1% sales. That means not just average but nearly all interactions need to be snappy; if 1% of page loads or add-to-cart actions are a second slower, that means lost money.

Web companies often track the “percentage of requests under X seconds” as a key performance indicator. For instance, an SLA might be “99% of page loads under 2s.” Because a page load often includes multiple calls to the products API, the recommendations API, and so on, each of those services internally likely targets p99 in the hundreds of milliseconds or less, so the total stays within seconds.

Google likewise found that an extra 500 ms on search results led to a 20% traffic drop. So for search, it aims for low tail latency; even if the average is, say, 100 ms, it might strive for p99 not much higher, to avoid anyone waiting 600 ms or more for a query.

Web UX research shows that humans perceive delays beyond about 100–200 ms in interface reactions, and anything beyond 1–2 seconds starts to feel broken. So companies like Google, Facebook, and Amazon invest in CDNs, edge caching, and highly optimized backends so that almost every request completes quickly. They also use techniques such as asynchronous loading to hide latency and graceful degradation.

For example, if a secondary API call is slow at tail, show the page without that component rather than delay the whole page. But ultimately, ensuring that 99%+ of interactions meet the desired speed is important for user retention. It’s often in that last 1% that major issues, such as timeouts or repeated clicking out of frustration, occur.

For instance, if an online checkout has a long-tail latency where 1% of users wait 10 seconds to process payment, many of those users will drop off and not complete the purchase. So e-commerce sites work to eliminate such cases, perhaps by optimizing the payment gateway integration or providing fallbacks.

Large-scale SaaS and APIs

Any service that provides an API, especially one used in real-time user contexts, has p99 latency as a key metric. Take a mapping API or a payment API. Other applications call these and often block the user’s action until a response comes. If 1% of those calls are slow, it affects the downstream app’s performance. So SaaS providers often advertise their p99 latencies and design for consistency. For instance, a database-as-a-service might claim p99 reads in under 5 ms for a certain workload, to assure customers of predictable performance. Internally, that means they have to manage factors such as multitenancy and use fast storage tightly.

Another example is multiplayer online games. If most players have a 50 ms response time but some have 300 ms (p99 scenario), those with the spike will experience lag and a poor gaming experience. Therefore, game platforms measure latency distributions and strive to keep even the slowest frames or messages within a tight envelope, using techniques such as interpolation or dropping frames rather than having one frame be late.

Critical infrastructure (telecom, IoT)

In telecommunications, services such as call routing or subscriber authentication often require low tail latency. When you make a mobile phone call, the network’s databases need to set up the call within a short time. If 1% of those lookups are slow, that could be many dropped or failed call setups. Telcos often use in-memory data grids or high-performance data stores so virtually every query is fast, and they may have strict SLOs like “<50 ms 99.9% of the time”.

Similarly, in Internet of Things (IoT) or sensor networks, if you’re collecting real-time data from thousands of sensors and reacting in a smart factory or a self-driving car, you care that the slowest readings don’t come too late. A control system might require that 99.9% of sensor readings arrive within, e.g., 100 ms; any slower could cause safety mechanisms to trigger or degrade performance.

Each of these use cases underscores a common theme: the need for consistent, reliable speed. The exact p99 target differs from the millisecond range for web to the microsecond range for trading, but the mindset is the same: Don’t just optimize the middle of the distribution; optimize the worst-case experiences that are still likely to happen. By doing so, these industries keep service quality high, whether it’s maximizing revenue per user because no one walks away due to slowness, winning auctions, or executing trades at competitive speeds.

Next steps

Aerospike is built to keep that p99 tail tight. Aerospike’s real-time data platform is engineered for predictably low latency at scale, on the level of millions of transactions per second ,with consistent, low-millisecond p99s, even when workloads are spiky and data sizes are large. Its patented Hybrid Memory Architecture that indexes in memory and places data on fast storage, log-structured design, and a C-based core reduces jitter from garbage collection pauses, compaction, and I/O outliers. The result: is fewer stragglers in multi-service calls, more headroom under load, and SLOs that hold when it matters most.

Ready to turn “p99” from a risk into a competitive advantage? Explore Aerospike benchmarks or talk with our engineers about your latency targets. Get a demo or start a free trial to see how Aerospike keeps your 99th percentile fast at any scale.

Keep reading

Oct 15, 2025

The foundation for real-time AI: Inside Aerospike’s high-performance data infrastructure

Mar 28, 2024

Caching doesn’t work the way you think it does

Oct 9, 2025

Inside Airtel’s Converged Data Engine: Real-time intelligence powered by Aerospike

Aug 27, 2025

Why the next wave of travel marketplace innovation starts in the data layer