The foundation for real-time AI: Inside Aerospike’s high-performance data infrastructure

Learn how low latency, millions of TPS, and consistent performance enable predictive, generative, and agentic AI. Results from PayPal and Barclays.

Enterprises are intrigued by the promise of AI, but stymied by the latency. Models predict, generate, and reason, but only if the data keeps up. Every millisecond has a business consequence. In those milliseconds, a bank decides whether to approve a transaction, and a retailer chooses which product to recommend.

That was the takeaway when Aerospike Chief Evangelist Sesh Seshadri delivered his “Building High Performance Data Infrastructure for AI,” presentation at Align AI in San Francisco on building high-performance data infrastructure for AI. During his presentation, Sesh showed what happens when inference pipelines meet conventional database speed limits, and how rethinking the data layer improves real-time decision quality while keeping the latency budget the same. Predictive AI, generative AI, and now agentic AI each place demands on the database layer, forcing enterprises to rethink what performance really means, he said. AI support requires sub-millisecond latency, millions of transactions per second (TPS), and consistency across distributed environments.

But with the right infrastructure, here are the results to expect:

PayPal processes and analyzes emerging fraud patterns in under 200 milliseconds, supporting 8 million TPS.

AppLovin serves 70 billion ad requests daily across 3 billion devices, making real-time decisions under 1 millisecond.

Wayfair replaced 60 Cassandra servers with seven Aerospike nodes, with more than 1 million TPS at less than 1 millisecond latency.

Barclays consolidated multiple platforms into a real-time fraud detection system, managing more than 30 TB of data and more than 10 million daily transactions with four times the throughput and an 80% reduction in latency compared with its prior system.

Each of these deployments demonstrates that AI performance and efficiency start at the database layer.

The evolution of AI: Predictive, generative, and agentic

The same story is playing out across the industry. As AI adoption increases, workloads are evolving, and so are the requirements of the data systems to support them.

“AI is rapidly changing… predictive AI, generative AI, and agentic AI,” Sesh said, describing that evolution as three phases. Each represents a new way of thinking about intelligence, and each places new demands on the infrastructure that powers it.

Predictive AI focuses on anticipating what will happen next, relying on structured inputs, deterministic models, and speed.

Generative AI combines foundation models with real-time context to create text, code, and recommendations on the fly.

Agentic AI takes both ideas further: AI systems that plan, decide, and act autonomously, orchestrating data and micro-datasets in real time.

“Agentic AI takes predictive and genAI to the next level,” said Sesh. “How do you use those two for goal-oriented purposes?”

That evolution sets the stage for everything that follows: architectural patterns, performance benchmarks, and customer innovations, which show what happens when Aerospike becomes the foundation for all three.

Predictive AI: Powering real-time inference at enterprise scale

Predictive AI remains the foundation of most production systems today. It’s the layer of intelligence that decides what happens next, whether a transaction is legitimate, which product appears first, or which user receives a personalized offer. They depend on speed, reliability, and scale.

“Aerospike is a very high-performance database supporting latencies of sub-millisecond, throughputs of millions of transactions per second at a very high reliability,” said Sesh. “Our customers jokingly say, ‘We just go to sleep once we install Aerospike.’”

At Myntra, that reliability powers a real-time recommendation engine serving millions of users. Its architecture reflects a pattern now common in predictive AI pipelines: Operational and clickstream data flow into a platform that combines streaming and batch processing. From there, features are generated and served to an online feature store where Aerospike delivers real-time inference for live applications.

As models grow more complex, the inference process itself becomes multi-step. “In the e-commerce setting, you might say, ‘Hey, I got a query. What is this query? What category of products is it even referring to?’” Sesh said. “You might generate multiple signals or features, and then you feed that to a final, later model that makes a decision as to what products to recommend or give back.”

This stage-by-stage approach improves both accuracy and performance. Early models compute inference-time features quickly, narrowing the field for deeper analysis. “The other sort of common paradigm for multi-step is the amount of data you expose to a model,” Sesh said. “You expose a lot of data initially, and then decrease it over time because the initial models are what I call approximate, cheaper models. So you filter very quickly using some filters and then do a more detailed interview.”

The benefit is simple: better decisions within the same time. “Maybe instead of a hundred documents after the retrieval stage, I have 500 documents from the retrieval stage, and I want to rerank 500 documents rather than 100 documents,” said Sesh. “Obviously, that’s a better result. You’re going to make much better decisions for the same time budget.”

That’s why Aerospike’s architecture is central to enterprise AI workloads. By delivering predictable performance at extreme scale, organizations increase model sophistication without sacrificing speed.

“Aerospike on a technology basis allows us to do more complex risk calculations in less time,” said Nick Blievers, senior director of engineering at LexisNexis. “A more thorough risk calculation allows better accuracy for our customers, to avoid friction in their transactions and ultimately avoid losing money in fraud.”

Predictive AI may be the oldest form of intelligence, but it’s also the most unforgiving. Every millisecond matters, and Aerospike doesn’t waste them.

Generative AI: Grounding large language models with real-time context

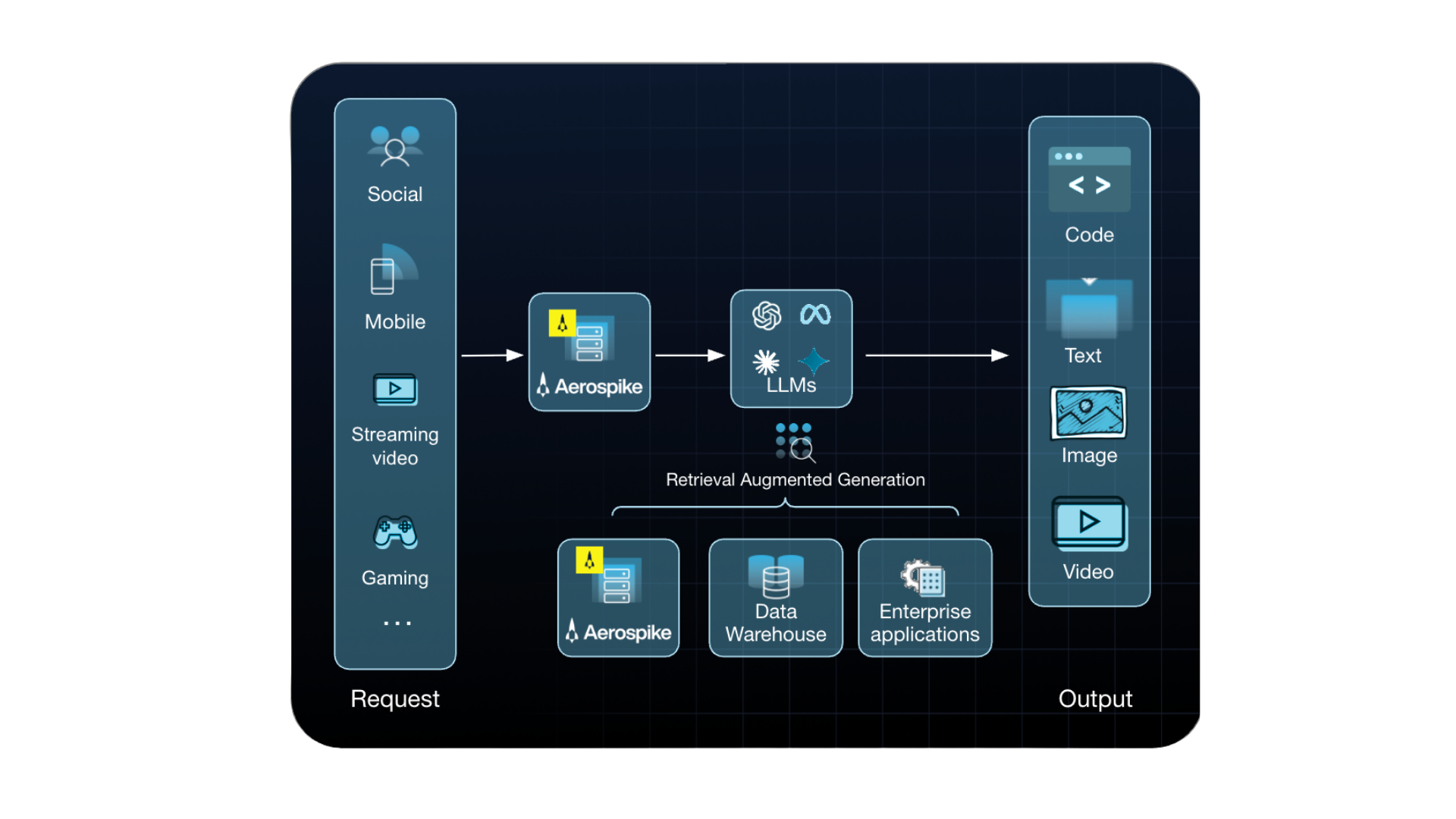

If predictive AI is about anticipating what happens next, generative AI is about creating something new. It’s the engine behind conversational systems, creative assistance, and the growing number of enterprise copilots redefining customer and employee experiences. But even the most advanced models can’t generate accurate responses without context, and that’s where data infrastructure becomes a bottleneck.

In retrieval-augmented generation (RAG), the challenge is to find the right set of documents, explained Sesh. “So it’s absolutely the same pipeline. This could have been search, could have been RAG,” he said. “RAG is nothing but a search in the context of finding the right set of documents to feed to an LLM. For the very same reasons, we are an awesome feature store for recommendations. We are an awesome feature store for search, and we are an awesome feature store for RAG.”

RAG requires low latency access to high-quality data, whether that’s customer histories, transaction logs, product catalogs, or policy information. When the feature store delivers that data in milliseconds, large language models (LLMs) produce relevant, grounded, and compliant responses.

At Myntra, this architecture comes to life through Maya, the company’s generative AI shopping assistant. Running on Aerospike, Maya combines real-time product data with user behavior to personalize every conversation and guide customers toward faster purchase decisions.

The same principles driving recommendation engines apply to generative systems, Sesh said. In both cases, the goal is to make real-time decisions based on the most relevant context possible. The faster the system retrieves, filters, and delivers that data, the smoother the experience feels.

In these systems, accuracy depends on retrieving the right data at the right moment. RAG pipelines rely on infrastructure that finds relevant context quickly enough to keep LLMs responsive and accurate.

Agentic AI: Enabling goal-oriented intelligence at scale

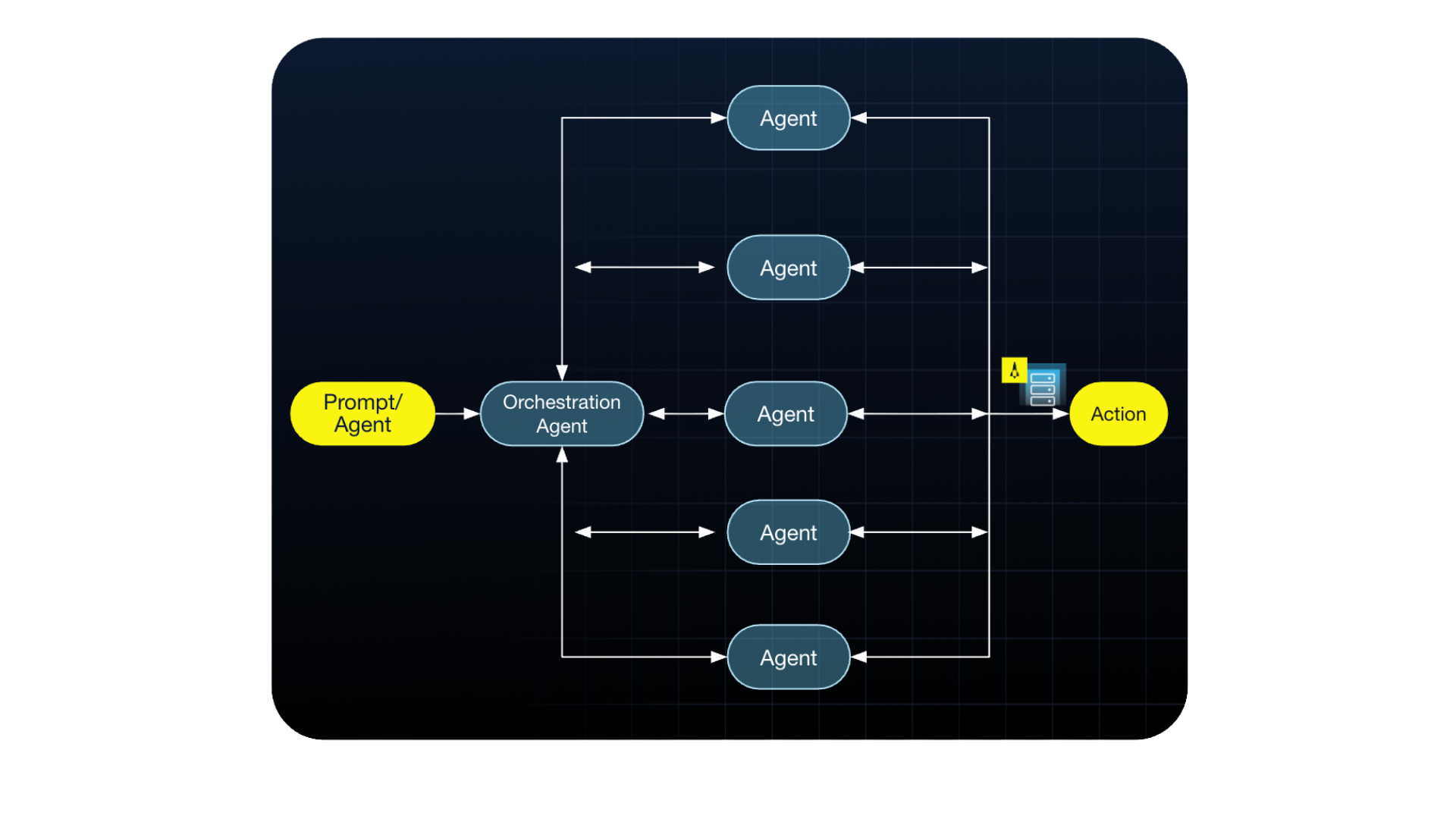

If predictive AI forecasts what will happen and generative AI creates new artifacts, agentic AI closes the loop. These systems don’t just respond; They plan, act, and adapt in pursuit of a goal. They represent the next evolution of intelligence, where automation meets reasoning in real time.

“Agentic AI takes predictive and genAI to the next level,” said Sesh. “It mimics what a human would do, and usually has multiple steps.”

Agentic systems combine LLMs, reinforcement learning, and decision frameworks to operate autonomously. They pull data from multiple sources, such as social media, mobile devices, data warehouses, observability platforms, and databases, and coordinate dozens of micro-interactions across them.

Sesh described these workloads as being defined by micro-datasets: small, fast, session-specific data objects that are constantly created, queried, updated, and retired, sometimes within seconds. “There are millions of agents that are probably working at the same time across one to millions of users,” he said. “These have finite life, and these are all things for which Aerospike is ideal.”

But autonomy introduces its own challenges. Guardrails must bound each agent’s decision for accuracy, fairness, and compliance to evaluate and correct actions in flight. These systems depend on validation loops to assess whether outputs align with desired outcomes.

Managing ephemeral data and continuous validation requires infrastructure that writes, reads, and expires large numbers of records with predictable latency. Aerospike’s patented Hybrid Memory Architecture, delivering in-memory performance with disk-level cost efficiency, was designed for that pattern.

As agentic AI matures, the database becomes the coordination and validation layer that helps intelligent systems reason, respond, and act responsibly.

Customer innovation: AI infrastructure in production

Throughout his session, Sesh returned to one consistent point: the proof of performance is in production. Enterprises using Aerospike for AI aren’t running prototypes. They’re operating real systems that make real decisions in real time.

At Barclays, accuracy and speed were non-negotiable. “Barclays is using us for fraud detection,” said Sesh. “They had an older system that was making a lot of inaccurate decisions. They had both false positives and false negatives, and they could not tighten it. They tightened it too much. They got too much of one versus the other.”

The solution was a simplified, single-platform architecture capable of real-time response. “Finally, we replaced a lot of moving parts with a single platform, simplified architecture,” Sesh said. “We were able to handle everything in real time and respond within the time budget.”

That change eliminated the tradeoff between accuracy and responsiveness. “With Aerospike, we were able to dramatically reduce stand-in processing, data consistency issues, as well as false positives and false negatives for future transactions,” said Dheeraj Mudgil, Barclays’ vice president and enterprise fraud architect.

At Wayfair, the focus was efficiency. “We went from 60 to 7 servers, and repeatedly we deliver way higher throughput,” said Sesh. The reduction in server count, combined with lower latency, demonstrated how improved data access translates into infrastructure efficiency.

At PayPal, Aerospike supports a multi-model fraud detection framework that combines segmentation, ensemble learning, and real-time decisions. Sesh said these multiple models work together to analyze transactions without exceeding time limits.

Each example illustrates the same principle: Real-time intelligence depends on databases that maintain sub-millisecond performance under heavy transactional load. Architectures differ, but the outcome is consistent: AI that performs at production scale.

Designing databases for AI: Lessons in performance and consistency

The production examples of Barclays, Wayfair, and PayPal point to a clear conclusion: building infrastructure for AI means rethinking how databases are designed. Throughput, latency, and consistency aren’t abstract metrics. They determine the quality of inference, accuracy, and user experience. Sesh explained that these constraints become most visible when AI systems proceed from experimentation to production.

“Those are true whether you’re powering AI use cases or not, but definitely true when you’re powering AI use cases,” Sesh said. “What’s your scale throughput requirement? What is your sensitivity towards latency? Do you want strong consistency? Are you sure you want strong consistency? Do you want eventual consistency? Do you want to consider three-year TCO?”

Each of these tradeoffs affects how AI performs in production. A real-time recommendation system, a retrieval pipeline, and a fraud detection model all depend on the same underlying database properties: predictable performance, consistent behavior, and efficient cost scaling.

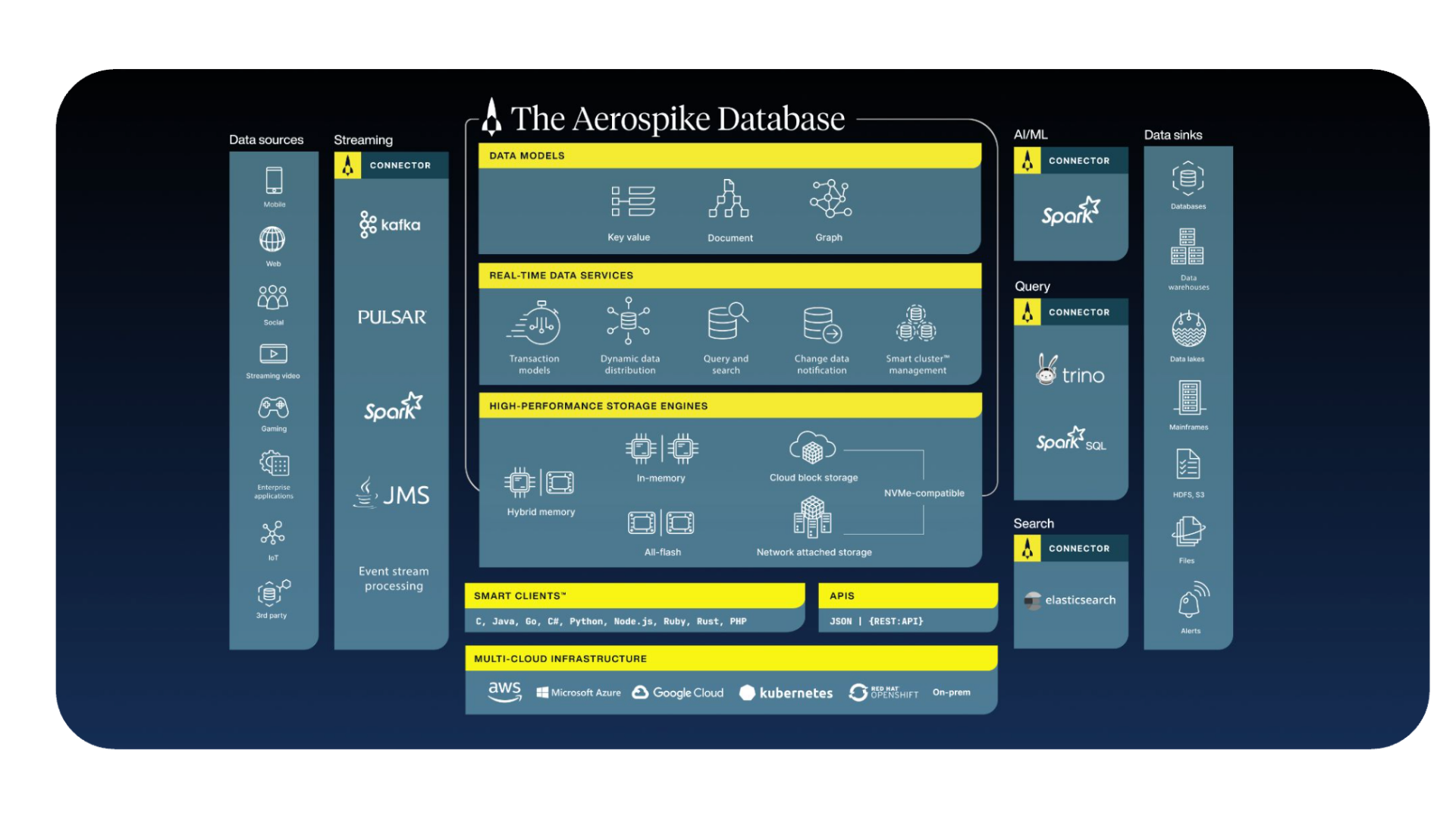

Aerospike’s Hybrid Memory Architecture addresses these performance and cost tradeoffs. “The Hybrid Memory Architecture gives you the read performance as though the data were in memory while storing the data on disk,” Sesh said. “Because it gives you the performance of a database that is in memory, it’s way better than the performance of a database that stores purely on disk.”

This architecture balances memory-speed access with disk-level efficiency, so systems maintain predictable performance as data volumes grow.

For teams designing infrastructure today, Sesh’s advice was clear: performance, cost, and reliability are not competing objectives; they are design inputs.

Built for the AI of today and tomorrow

As the webinar drew to a close, Sesh returned to the theme that connected every example and architecture discussed: performance and efficiency aren’t optional, but what make AI practical at scale.

“For AI, performance is super critical,” Sesh said. “The cost and the efficiency at which you can do it are very critical.”

From predictive models that detect fraud, to generative assistants that personalize experiences, to agentic systems that reason and act autonomously, the same foundation enables them all: fast access to the right data, at the right time, with predictable reliability.

Aerospike’s design philosophy reflects that reality. The database was built to handle the transactional intensity and architectural complexity of today’s AI without trading speed for scale.

As enterprises move from experimentation to production, their infrastructure decisions determine how far their AI can go. The lesson from the webinar was clear: the future of intelligence belongs to systems that think in real time, and Aerospike already powers that reality today.