From prediction to autonomy: AI’s evolution delivers new data demands

Explore the shift from predictive to agentic AI and what it means for enterprise data infrastructure, retrieval systems, and scalability.

(A version of this article was previously published on Forbes.com.)

Enterprise AI has evolved rapidly—from predictive models answering targeted questions to generative models producing content on demand, and now to agentic AI systems that pursue goals autonomously through multi-step reasoning. These shifts have come fast—sometimes within months. But each new wave brings new capabilities and new demands on data infrastructure.

To help enterprise leaders navigate this, it’s helpful to think in three phases: predictive AI, generative AI (GenAI), and agentic AI. Each uses data differently, places different pressure on infrastructure, and demands a different approach to scale.

Predictive AI: Local, targeted, transactional

The earliest enterprise AI systems were predictive. These tools were trained to evaluate specific scenarios—flagging fraudulent transactions, identifying potential customer churn, or serving a user the most relevant ad.

While these systems operated within narrow, predefined scopes, they were designed to make one-off decisions based on structured inputs—a snapshot of data from a single moment or transaction. As a result, the primary infrastructure demands were straightforward: ensure high availability and low-latency response. Speed and reliability mattered more than flexibility or adaptability.

To see how predictive AI delivers value in practice, consider how two Aerospike customers, PayPal and Wayfair, apply it at enterprise scale.

PayPal

PayPal is the world’s largest online money transfer, billing, and payments system. It owns the PayPal, Venmo, iZettle, Xoom, Braintree, and Paydiant brands. By leveraging technology to make financial services and commerce more convenient, affordable, and secure, the PayPal platform empowers over 325 million consumers and merchants in over 200 markets to join and thrive in the global economy.

PayPal relies on Aerospike’s patented Hybrid Memory Architecture to power predictive fraud detection across its global payment network. With Aerospike at the core, PayPal executes fraud checks on more than 8 million transactions per second, hitting 99.95% SLA compliance and reducing missed fraudulent transactions by 30x. Beyond accuracy, the move also created massive efficiency gains: a reduction in server footprint by up to 8x (going from 1,024 servers down to 120), nearly 3x lower hardware costs ($12.5M down to $3.5M), and a 5x boost in throughput, all while maintaining sub-millisecond decisioning.

“Prior to Aerospike, we were using another in-memory database and running into challenges in terms of the cost of scaling. We needed to seamlessly leverage both the memory and disk in such a way that it can guarantee a consistent performance. We moved to Aerospike for its Hybrid Memory Architecture to leverage next generation memory and SSDs to their fullest advantage.” — Sai Devabhaktuni, Senior Director of Engineering, PayPal

Wayfair

Wayfair is one of the world’s largest online destinations for home goods, offering over 14 million products from more than 11,000 suppliers. With a mission to help everyone, anywhere, create their feeling of home, Wayfair leverages technology to provide personalized shopping experiences. Innovations like Muse, an AI-powered visual discovery tool, exemplify Wayfair's commitment to transforming how customers discover, personalize, and shop for their dream spaces.

Wayfair brings predictive AI into the customer experience by using Aerospike to deliver real-time personalization at massive scale. The company replaced Cassandra and Redis with Aerospike, shrinking a 60-node cluster down to just 7 servers, an 88% reduction in infrastructure footprint. With sub-millisecond P99.9 latency and the ability to sustain over 1 million transactions per second during peak events like Way Day, Aerospike enables Wayfair to increase cart sizes, reduce abandonment, and keep the shopping journey smooth even under heavy demand.

“Wayfair leverages Aerospike for customer scoring and segmentation, tracking events online, ‘listening’ to customer activity for marketing decisions, onsite advertising, and recommendation engines...Aerospike is pretty much the heart of everything we do in AdTech. A lot relies on it.” — Ken Bakunas, NoSQL Data Architect, Wayfair

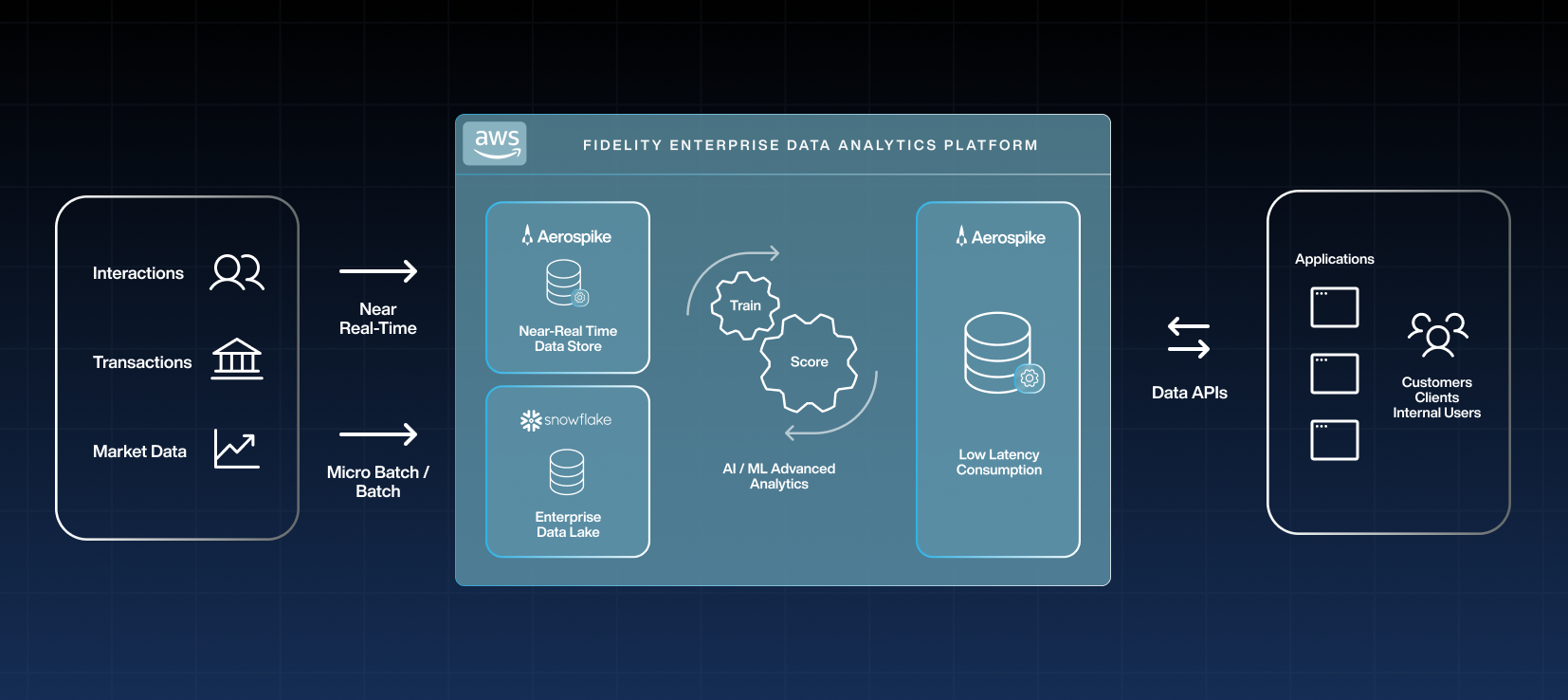

GenAI: Blending pre-training with context

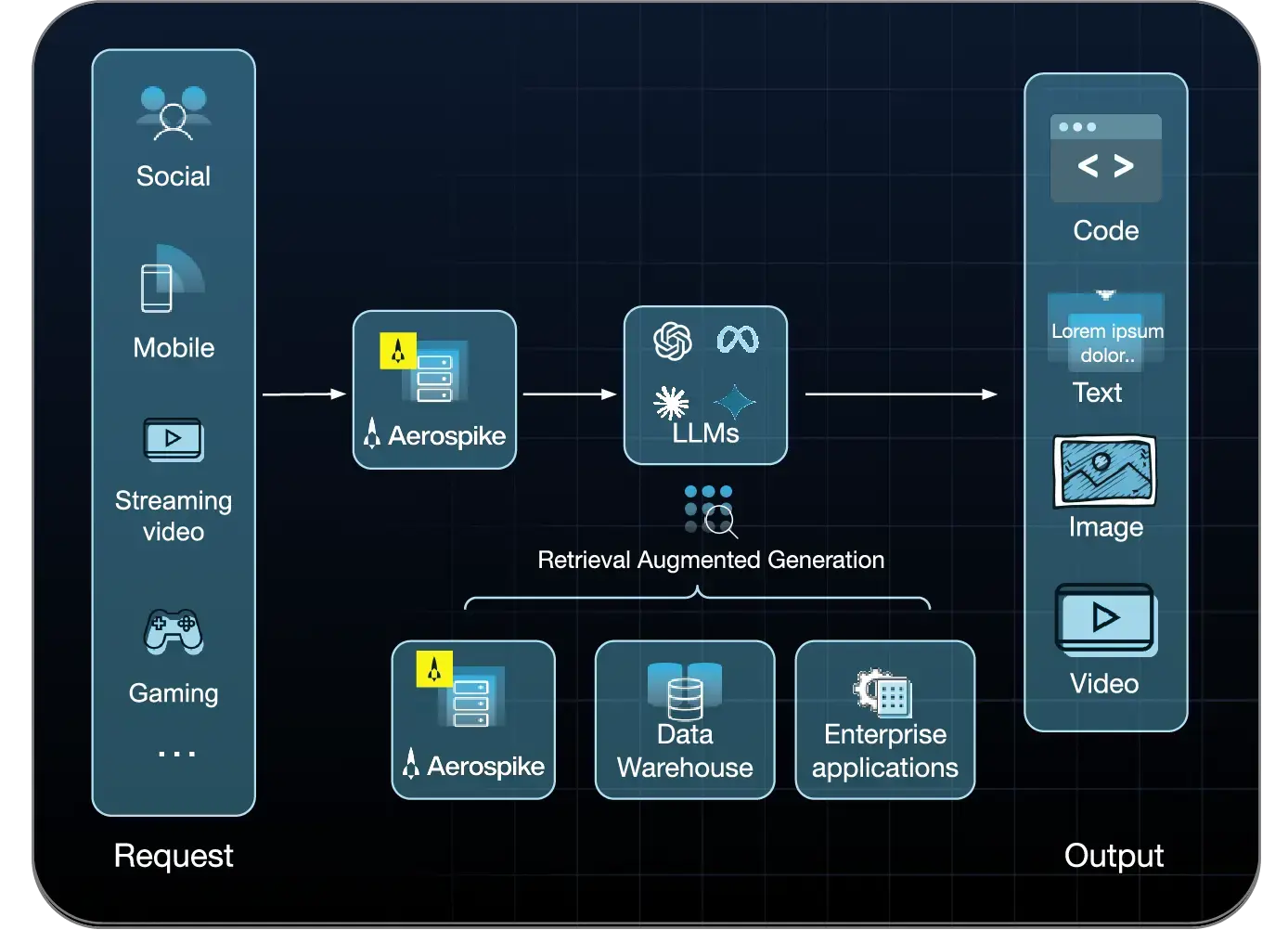

Then came GenAI. These models generate text, code, images, and summaries. They deliver open-ended outputs rather than simple binary decisions. While GenAI models can generate content based on their pre-training alone—without external context—most enterprise applications combine this knowledge with real-time business context to ensure relevance and accuracy.

This led to the rise of retrieval-augmented generation (RAG): systems that prompt a model with user input and relevant documents. Enterprises control what data the model sees, enabling curated, context-aware responses.

Unlike predictive AI, which typically relies on targeted lookups, RAG-based systems require infrastructure capable of fuzzy semantic search—retrieving information based on meaning, not just keywords—across broad, diverse data sources.

Consider Myntra, one of India’s leading fashion retailers, to see how enterprises are applying this in practice.

Myntra

Myntra is one of India’s leading online fashion retailers, offering millions of styles across categories and serving a diverse customer base spanning regions, demographics, and preferences. With a mission to democratize fashion and support self-expression at scale, Myntra leverages technology to make personalized shopping experiences available to millions of users daily.

Myntra uses Aerospike to support its generative AI shopping assistant, Maya. Maya offers a conversational interface where customers can move from discovery to purchase entirely within chat, powered by generative AI models and contextual product data. Aerospike delivers real-time lookups and checkpoints for conversational memory, ensuring each interaction feels seamless and personal.

Beyond Maya, Aerospike supports Myntra’s broader personalization strategy, enabling search, recommendations, and size-and-fit suggestions in under 50 milliseconds across millions of users and billions of real-time events. This reduces returns, improves satisfaction, and helps deliver individualized experiences at scale.

“We are one of the first fashion e-commerce companies to launch conversation shopping assistant. We wanted to actually take our customers from discovery to identifying the right products they want to buy through the whole sale experience...Maya is one of the use cases where GenAI is leveraged. And…we are also using Aerospike to check point the entire conversation. And it's perfect for us so far.” — Narayana Pattipati, Principal Architect, Myntra

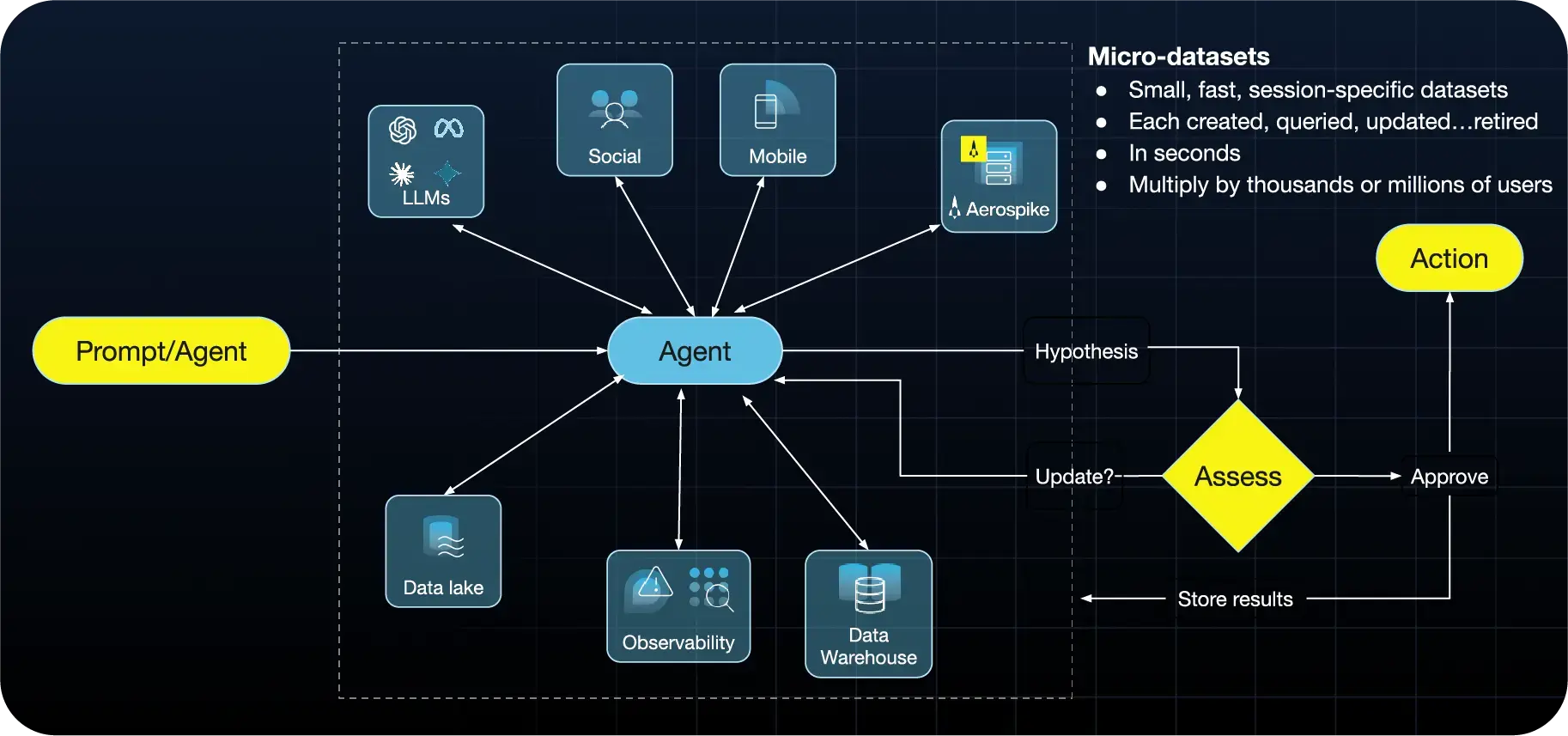

Agentic AI: Autonomous, multi-step, demanding

Agentic AI is in a different class entirely. Rather than responding to static prompts, these systems are given goals. They decide which steps to take, what data to retrieve, how to organize intermediate results, and how to refine their actions based on evolving input.

This initiates a continuous, adaptive cycle: retrieving data, reasoning over it, taking actions, storing partial outcomes, and adjusting future steps. From a systems perspective, the demands are substantial.

Agentic AI stresses the entire data stack. To meet the demands of agentic AI, enterprise systems must perform the following functions efficiently and at scale:

• Retrieve and integrate data from multiple sources during a single session.

• Cache intermediate results in short-lived, session-specific memory.

• Ensure real-time data freshness rather than relying on static documents.

• Handle increased per-session load due to iterative, multi-step processes.

User prompts may seem simple, but agentic systems often trigger dozens of internal operations—querying APIs, testing hypotheses, caching temporary results, and refining actions based on feedback. That memory must be created and accessed rapidly, and retired just as quickly.

Multiplied across thousands of users, this creates a "micro database" effect: fast-expiring, high-churn, session-specific datasets. Traditional infrastructure isn't designed for this scale or volatility.

What enterprises need to do

To support agentic AI, organizations must build data infrastructures that prioritize flexibility, responsiveness, and trust. To do this, enterprise leaders should take the following steps to ensure readiness:

• Make data discoverable. Agentic AI can’t use what it can’t find. Invest in metadata, semantic layers and indexing systems that describe what your data is, how to access it and how it can be used. Think of this as creating a map for autonomous AI to follow.

• Expose the right hooks. Discovery alone isn’t enough. Systems need accessible APIs or endpoints—structured, unstructured, and streaming signals—that agents can query. Adopt emerging standards like Anthropic’s Model Context Protocol to enable consistent discovery and interaction.

• Support session-scoped caching. Agentic AI systems need working memory. Architect systems to support short-term, per-session data stores—“scratch pads”—that are updated, queried, and cleared efficiently as needed. These micro-databases may only last minutes, but must be fast and reliable.

• Monitor and manage freshness. Stale memory leads to bad decisions. Build in automated refresh mechanisms and signals to flag outdated data and refresh cache. Your agentic AI needs to be made aware when its working memory is no longer accurate.

• Plan for redundancy and reuse. Develop mechanisms for sharing intermediate results across sessions when appropriate. This can reduce load and increase consistency.

Anticipate and design for trust failures. Deploy observability tools, audit logs, and guardrails to detect and contain trust failures.

This isn’t an argument against agentic AI. It’s a call for architectural maturity. With the right observability, guardrails, and real-time infrastructure, we can build powerful and trustworthy systems.

Smarter systems, not just smarter models

The shift from prediction to generation to autonomy isn’t just about model capability—it’s redefining how enterprise systems must work. Agentic AI introduces higher stakes, more complexity, and greater infrastructure dependency than any AI stage before it.

Leading organizations will meet this moment not just by deploying models, but by designing data systems that give AI the access, context, memory, and control it needs to operate securely and effectively at scale.

Keep reading

Jul 8, 2025

Gen AI 2.0 is here: Why agentic AI runs on real-time data infrastructure

Aug 18, 2025

Inside Arpeely’s real-time feature store for ad personalization

May 5, 2025

Inside Adobe’s real-time customer data platform: 89% savings leveraging Aerospike’s tiered storage engines

Aug 4, 2025

Champions of Scale: Celebrating the engineers pushing the limits of real-time infrastructure