Overcoming the traditional memory vs. storage compromise

Explore how Aerospike's Hybrid Memory Architecture technology revolutionizes database performance with optimal solutions for real-time, high-throughput needs.

For decades, the database industry has grappled with a seemingly inevitable trade-off: should performance be prioritized through the speed of memory (RAM), or should cost and scalability take precedence with the use of storage solutions like spinning disks or solid-state drives (SSDs)? This challenge has traditionally constrained the scope and efficiency of real-time applications using scalable data management systems.

Traditionally, NoSQL database solutions have leaned heavily on in-memory technologies to meet high performance, low latency, and high throughput demands, which are essential for consumer applications such as fraud prevention and personalized recommendation engines. This approach has often led to the perception that peak NoSQL performance is synonymous with purely in-memory systems.

This article spotlights Aerospike’s Hybrid Memory Architecture™ (HMA), a patented solution that transcends these traditional compromises by offering the speed of memory with the economic and scalability advantages of SSDs. HMA achieves this by uniquely combining in-memory indexing with direct, efficient operations on SSD storage, allowing for rapid data processing at significantly reduced costs. This architecture not only supports vast, high-performance workloads but does so with a cost efficiency at a large scale that can be up to 80% lower than comparable in-memory systems. This blend of speed and affordability is transforming expectations for database performance and scalability.

Is more RAM always better? Speed and caching myths

Since memory is inherently faster than storage, it makes sense to start with the assumption that an in-memory solution is the best path for achieving top system performance, while a caching solution with a large amount of memory usage is second best. Let’s take a look at how well both of those ideas perform in practice.

Microsecond latency: The potential of SSDs

Traditional spinning disks, unlike SSDs, could never support the predictable performance required for real-time applications, primarily due to their mechanical nature. First, they cannot perform direct reads efficiently because the physical movement of the read/write heads and the rotational latency of the disk introduce significant latency. Second, they are incapable of handling parallel and random read access effectively, as the mechanical head can only be in one position at a time, limiting the speed and number of operations that can be processed simultaneously.

SSDs overcome these disadvantages by allowing random access reads in parallel. Thus, they present a practical alternative to RAM for building real-time applications. They excel in random access performance and parallel data processing, enabling direct read access to data at very high throughput.

While data access times for RAM are measured in nanoseconds, SSD access times are notably higher, clocking in at microseconds. However, this difference becomes irrelevant for real-time applications, which typically require sub-millisecond read times. While every SSD operation introduces microsecond-level latency compared to the nanosecond-level latency for RAM, these small increments do not significantly impact overall application performance. With effective parallelism, millions of operations per second do not compound these RAM-SSD differences. Instead, other factors beyond raw data access times, such as networking delays, typically play a much larger role in overall system latency.

Caching is the best of both worlds, right?

A similar misconception is that a caching architecture is the next best thing to a fully resident in-memory database, bringing the lower latency of RAM for highly accessed data with the lower cost of storage for the rest. Caching is the process of storing selected data from a larger, slower datastore in a faster storage medium for quicker access.

To be clear, Aerospike’s HMA is not a caching architecture. In an HMA deployment, all the data is stored on SSDs, while only the indexes reside in RAM.

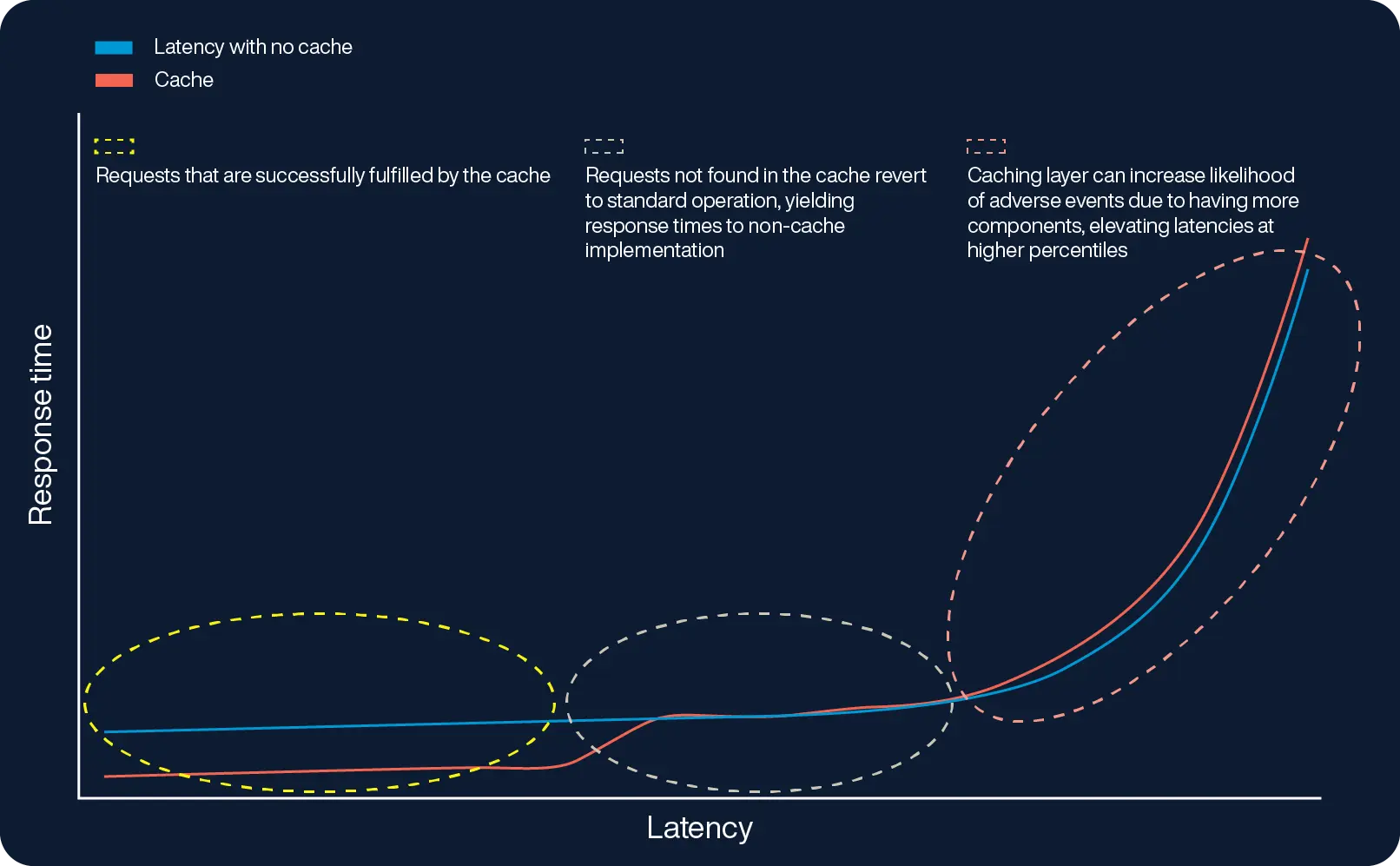

Caching’s benefits can be narrow and can actually introduce further latency, particularly for use cases that demand low latency at high throughput. In these environments, it’s typically the “tail latencies” that are problematic, resulting in the slowest 5%, 1%, or 0.1% of database transactions with disproportionately slow response times. With thousands or millions of transactions per second (TPS), these P95, P99, and P99.9 behaviors are actually quite unsatisfactory and add up to a poor customer experience.

A cache improves latency for the lowest latency (i.e., fastest) transactions. However, it does not reduce those adverse events and introduces additional complexity that can increase these events and the resulting latencies. Furthermore, a failure of an in-memory cache can result in stale data being served to the application or worse still, no data being available until the cache is repopulated from the data in persistent storage.

For further details on caching misconceptions, read this blog.

The only way to shrink the tail latency (orange circle) is with an exceedingly high cache hit rate, where nearly every request is found in the cache memory. As the article shows, even a 99.9% cache hit rate will still have significant latency spikes. The only way to approach such a high cache hit rate is to have portions of your data that are NEVER accessed or store the entire database in memory.

Perceived challenges of SSDs for a high-performance database

We’ve explored why neither in-memory setups nor cache-memory configurations are always ideal and why spinning disks cannot meet the demands of high-performance databases. In contrast, SSDs emerge as a superior solution for high-performance database workloads, capable of executing thousands to millions of input/output operations per second (IOPS) with latencies under a millisecond.

However, utilizing SSDs effectively involves overcoming several challenges. Notably:

Challenge 1: Without a specialized file system handler, SSD requests are slowed by multiple steps.

Challenge 2: SSDs face wear-level issues due to frequent writes, potentially reducing their lifespan, especially under heavy write loads.

Challenge 3: Persistent storage devices like SSDs require periodic defragmentation to optimize performance. This must be carefully managed to prevent it from impacting system efficiency.

C-based architecture enables direct SSD access and speeds and parallelism

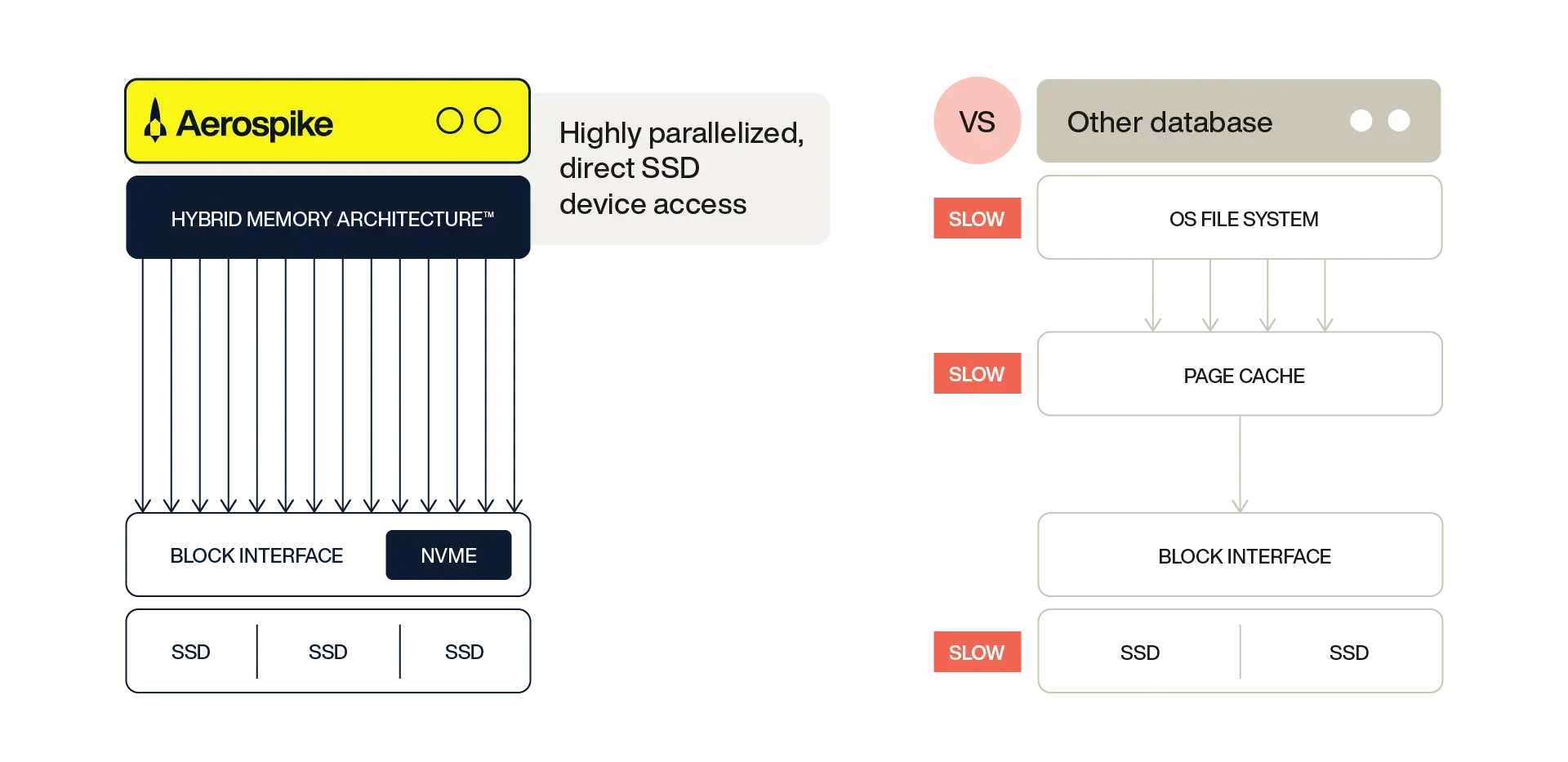

Aerospike introduced a custom file system handler tailored for SSDs in Linux to gain direct access and fully leverage the SSD’s capabilities. This specialized handler is fine-tuned to exploit the SSDs' inherent strengths in random read capabilities and parallelism, delivering extremely high read throughput. The design, facilitated by Aerospike being written in C, lets Aerospike treat the SSDs as raw devices, enhancing both speed and reliability in data handling.

In short, this approach enables Aerospike to talk to the hardware natively, not an API layer. This treats the SSDs as a very large parallel memory space, not a file system.

Memory-like performance from disk

The core advantage of Aerospike’s HMA approach is that it provides predictable low latency for read and write operations to persistent storage. Ultimately, these read/write operations are what takes time. There is no disk I/O with HMA for index access since indexes are in memory. For data read, Aerospike executes a single I/O to fetch the data from the SSD if the item is not already cached in memory (e.g., the item could have been recently read or written). The read latency of I/O for SSDs has little penalty for random access, so Aerospike can deliver memory-like performance with sub-millisecond latency for reads and writes.

Some other database systems also attempt to leverage the benefits of SSDs. As Figure 2 shows, though, rather than direct access, they are slowed by multiple steps involved in a buffer pool-based storage architecture, as follows:

Make access requests through a slow operating system (OS) file system, then

access page cache, then

access the block interface, and then

access SSDs through the file system interfaces.

Managing writes to reduce hot spots and extend SSD life

To effectively address SSD wear-level challenges and extend their lifespan, Aerospike employs a combination of strategies, including copy-on-write and large block writes to a log-structured file. The log-structured file mechanism not only allows for extremely high write throughput but also addresses the wear leveling issue.

'Copy-on-write' means that instead of modifying data in place on the SSD—that is, on the same block—the system writes changes to a new block and updates the reference in the object’s in-memory index entry, preserving the original until the write is successful. This approach distributes the wear more evenly across the SSD, thus avoiding hot spots and ensuring that all areas of the SSD wear uniformly. It also maintains sub-millisecond read and write latencies, which is crucial for high-performance database operations.

Seamless defragmentation and SSD optimization

These steps to maximize SSD life, particularly the log-structured file system and copy-on-write mechanisms, necessitate ongoing space reclamation through background defragmentation. This is a common requirement for non-volatile storage like SSDs, unlike volatile memory, where it is less of an issue.

Aerospike has engineered a system in which defragmentation runs seamlessly alongside normal operations. This ensures timely reclamation of blocks during runtime without impacting the database’s write throughput.

In this approach, the system maintains a map that monitors the fill factor for the block (i.e., the amount of the block filled with valid data). When the fill factor becomes too low (a configurable value), indicating underutilized space, the block is flagged for defragmentation.

The defragmentation process efficiently consolidates sparse data into fewer blocks, moving valid data to a write buffer. This buffer temporarily holds data before it is permanently written back to the SSD. Aerospike enhances operational efficiency by using separate write buffer queues: one for regular client requests and another for data undergoing defragmentation.

To keep the system running smoothly and to preserve the longevity of the SSDs, Aerospike manages the rate of defragmentation based on available buffer space, prioritizing empty write blocks. This strategy ensures optimal use of storage capacity and maintains the SSD’s performance over time.

Managing dense nodes

Aerospike HMA deployments achieve millions of transactions per second with sub-millisecond latencies, utilizing clusters that are often just a tenth the size of traditional setups that rely solely on in-memory approaches. This efficiency brings significant advantages, including reduced costs, simplified management, and fewer node failures.

However, supporting such a high transaction rate on fewer nodes demands that each node operates with exceptional efficiency. While SSDs provide the necessary storage density, optimizing these dense nodes for high performance involves several critical strategies. These strategies ensure that each node can deliver the required throughput and maintain low latency, effectively addressing the challenges posed by the intensive use of SSDs.

By dividing data across multiple SSDs with a technique called "striping" and using a sophisticated hash function, Aerospike ensures data is spread out evenly across disks and nodes. This method prevents any single SSD from becoming a bottleneck, thereby boosting the overall system's efficiency and performance.

Different size read and write blocks: To make this even more effective, Aerospike utilizes different size data units for read blocks (RBLOCKS) and write blocks (WBLOCKS). Setting smaller read blocks (128 bytes) enables organization and access of small amounts of data, making efficient use of SSD space up to 2 TB. On the other hand, setting larger SSD write blocks (ranging from 1 to 8 MB) ensures the larger data writes that optimize both the lifespan of the SSDs and the database's performance.

Hardware optimizations: Enhancing node efficiency

Aerospike has significantly enhanced node performance through several hardware optimizations, each aimed at maximizing the system's operational efficiency:

CPU and NUMA pinning: By dedicating specific processes or threads to particular CPUs or cores and aligning memory access closely with the CPU that needs the data (NUMA pinning), Aerospike reduces latency and maximizes throughput. This method ensures each core is fully utilized, avoiding wasted resources and speeding up data processing.

Network to CPU alignment: Aerospike aligns network processing with CPU activities by using parallel network queues and mapping network requests to specific CPU threads. This alignment optimizes handling network requests, prevents bottlenecks during peak loads, and ensures consistent performance.

Leveraging advanced SSD technology: Incorporating cutting-edge SSD technologies like PCIe and NVMe, Aerospike takes advantage of their faster data access speeds and higher throughput. This adaptation not only boosts the system’s capacity to manage larger volumes of data efficiently but also enhances the overall speed of transactions.

Beyond theory: Demonstrating real-world impact

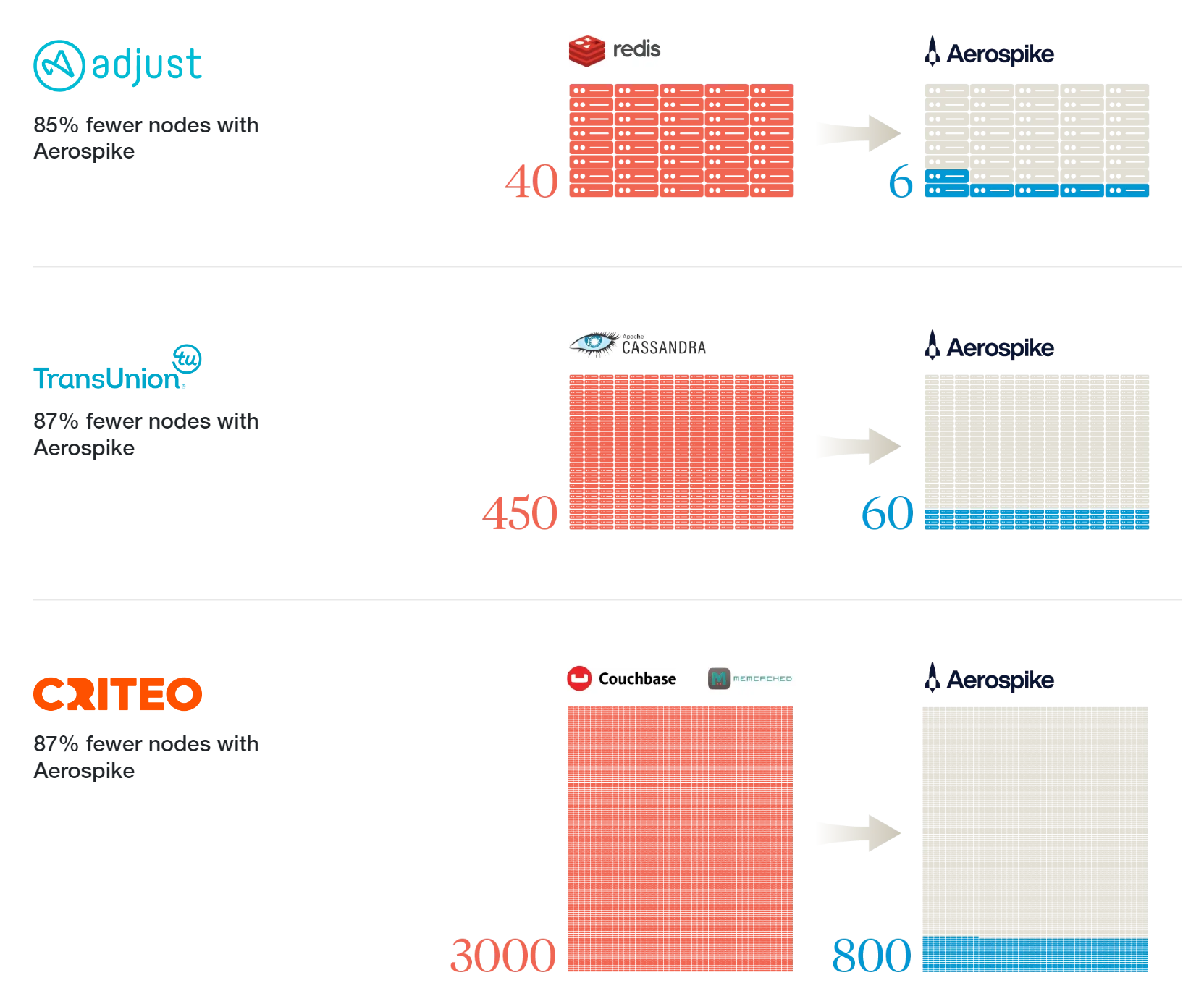

Examining real-world use cases, from smaller clusters to expansive networks, shows the enormous efficiency and performance gains from an HMA approach.

The following examples cover diverse industries and deployment scales.

Adjust, a global app marketing platform, operates in the competitive mobile advertising space where real-time data processing is crucial for delivering accurate analytics and fraud prevention services. Prior to adopting Aerospike, Adjust managed a 40-node cluster environment that struggled to keep pace with the company's rapid growth and the real-time demands of its services. By transitioning to Aerospike's HMA, Adjust was able to consolidate its infrastructure down to just six nodes. This dramatic reduction in node count led to significant cost savings and a smaller carbon footprint, all while delivering the real-time performance critical to Adjust's operations and their clients' success.

TransUnion, a leading global risk and information solutions provider, required a high-performance data platform to manage the complex demands of real-time fraud detection and credit risk assessment. Their legacy system, originally comprising 450 nodes, faced scaling and performance efficiency challenges. After transitioning to Aerospike, TransUnion successfully consolidated its operation to only 60 nodes. This drastic reduction not only resulted in lower operational costs and reduced energy consumption but also enabled TransUnion to achieve unparalleled data processing speeds, enhancing their ability to deliver timely, accurate risk assessments to their customers.

Criteo, a multinational advertising and marketing technology firm, leverages vast amounts of data to deliver personalized online advertisements in real time. Managing this data effectively is critical for Criteo's success, requiring a database solution capable of high-speed processing on a massive scale. Initially operating a 3000-node setup, Criteo reduced its footprint to 800 nodes. This change not only brought about a 70% decrease in server count but also significantly cut down on energy use and associated carbon emissions. Moreover, these efficiency and performance capabilities have allowed Criteo to maintain its edge in delivering real-time, personalized ad content, proving that scalability and environmental sustainability can go hand in hand.

These case studies illustrate the extraordinary, measurable benefits of our HMA technology. Whether for small startups or large enterprises, Aerospike delivers unparalleled efficiency, sustainability, and scalability, redefining what's possible in the realm of data management.

Unlock optimal database performance

Aerospike's HMA offers an optimal solution for real-time, high-throughput database needs by combining indexes in RAM with data on SSDs. This approach leverages the speed of RAM and the scalability and cost-effectiveness of SSDs, effectively overcoming the limitations associated with traditional caching strategies and expensive, all-in-memory systems.

Aerospike’s engineering innovations, such as direct SSD access and sophisticated data management techniques, ensure efficient handling of immense workloads while maintaining predictable performance and durability. By reducing operational costs and complexity, HMA provides a superior choice for modern database applications.

By redefining efficiency and scalability, Aerospike's technological advancements challenge outdated notions about database architecture. In a world where data demands are constantly increasing, Aerospike demonstrates that sustainable growth is possible. Our approach not only meets current high-performance needs but also anticipates future demands, ensuring that Aerospike remains at the forefront of database technology.