What is a log-structured merge tree (LSM tree)?

Explore log-structured merge trees: Write batching, disk hierarchy, compaction trade-offs, and how Aerospike’s Hybrid Memory Architecture solves LSM limits.

A log-structured merge tree, or LSM tree, is a tiered, write-optimized data structure that organizes key-value pairs in multiple components, residing partly in memory and partly on disk. Each component is maintained as an append-only sequence, so new records and updates are written sequentially rather than issuing costly random writes to persistent media.

The smallest, in-memory component, often called the memtable, absorbs incoming updates. When it fills, it is flushed to a sorted string table on disk, creating an immutable Sorted Strings table (SSTable) file. Larger on-disk levels hold progressively bigger runs, produced by repeated merging and compaction. Because the tree never overwrites data in place, the design avoids read-modify-write penalties inherent in traditional B-tree systems and scales gracefully on solid-state or magnetic media.

Trade-offs and weaknesses

The elegance of LSM’s idea of batch writes now, clean up later has a downside: compaction, read amplification, and heavy metadata overhead. Each time a memtable flush lands on disk, it spawns a new SSTable. Eventually, dozens of overlapping tables must be merged, rewritten, and re-indexed; in size-tiered schemes, one record might be rewritten three to ten times before it settles in the coldest level. That rewrite traffic, multiplied across terabytes, inflates write amplification and accelerates the wear on flash drives, while wasting bandwidth.

Even when compaction keeps pace, small random reads still traverse a gauntlet: consult Bloom filters (which themselves live in RAM), open file descriptors, perform scattered NVMe reads, and binary-search multiple blocks, all before confirming a miss or returning a value. If compaction falls behind, the number of candidate files rises, Bloom filters become stale, and the tail-latency curve widens dramatically. Meanwhile, every SSTable requires its own in-memory filter, index block, and file-descriptor slot, so a “write-optimized” system can paradoxically consume gigabytes of DRAM just to stay read-tolerant. Finally, workloads dominated by short-range scans suffer from fractured locality: keys that were once contiguous are now sprinkled across levels, forcing extra seeks compared with a clustered B-tree.

These issues explain why several next-generation engines, most notably Aerospike’s patented Hybrid Memory Architecture (HMA), have taken a different path. By writing unsorted blocks once, keeping the primary index in DRAM, and using a lightweight block-level garbage collector instead of multi-stage compaction, HMA eliminates most write amplification and guarantees at most one disk I/O per lookup. In short, LSM-trees excel where raw ingest rate matters above all, but for applications that also demand strict, predictable read latency and flash endurance, products like Aerospike offer a more efficient alternative.

Ecosystem and examples

LSM-tree storage engines dominate many write-intensive, scale-out platforms. Open-source projects such as Apache Cassandra, HBase, RocksDB, LevelDB, and Accumulo, as well as commercial services that embed them, use the design to maintain high ingest rates for event logging, time-series telemetry, real-time analytics, and large social-graph workloads. Operators can fine-tune dozens of parameters, such as memtable size, level fan-out, compaction style, and Bloom-filter bits, to take advantage of the sequential bandwidth of NVMe or even spinning disks, letting clusters grow to petabyte scale while still delivering millisecond-class reads for most keys.

Yet the same trade-offs that make LSM trees work well with bulk writes become constraints in latency-sensitive or flash-endurance-limited scenarios. Background compaction, multiple Bloom filters, and scattered SSTables add variability to tail latency and inflate the number of total bytes written. For use cases such as real-time bidding, fraud detection, or edge analytics, where sub-millisecond p99s and predictable SSD wear are important, architectures that avoid multi-level compaction can be a better fit. HMA stores the full primary index in DRAM and writes unsorted blocks to SSD, resulting in LSM-like ingest rates while guaranteeing, at most, one disk read per lookup and minimal write amplification.

In practice, the choice boils down to workload priorities: if peak ingest throughput and elastic scale are all you’re interested in, an LSM-based system is an option; if you also want deterministic latency and flash longevity are equally critical, engines such as Aerospike provide a compelling, more predictable and capable alternative.

How do LSM trees work?

An LSM tree turns random writes into orderly, bulk transfers and then tidies up the results in the background. Here’s a more detailed version of the process we described earlier:

Write path. Every mutation is first appended to a write-ahead log for durability. The same record is then placed in the memtable that resides in memory. When the memtable reaches a predefined size threshold, it turns immutable and is written out as a newly generated sorted string table. Successive tables accumulate at level 0 until a background compaction thread merges them into the next level, preserving global key order.

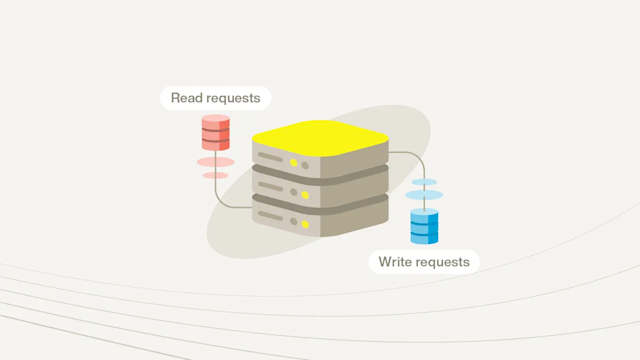

Disk hierarchy. Each level is larger than the one above by a configurable fan-out factor (typically 10). Levels provide non-overlapping key ranges, letting a point look-up touch at most one file per level. This deterministic layout keeps read performance predictable even if total data storage reaches the terabyte range.

Compaction policy. Compaction rewrites overlapping tables into new, bigger tables, removing obsolete key versions and reclaiming space. This process also controls write amplification: excessive or poorly tuned compaction multiplies the amount of data written to disk, while insufficient compaction degrades look-ups because more on-disk fragments must be searched. More recent implementations support leveled, size-tiered, or hybrid strategies, each balancing write amplification against query latency.

LSM trees include a number of concepts. Here are more details.

Write operations

Incoming writes are first appended to an in-memory buffer (often called a memtable) and simultaneously recorded in a write-ahead log for durability. Because the buffer is held entirely in memory, each write is a simple pointer advance and copy, avoiding random I/O on disk. Once the buffer reaches a predefined size, it is frozen, queued for flush, and replaced by a new writable buffer. The frozen buffer is then written to disk in one sequential transfer, which reduces seek overhead and improves throughput on solid-state or spinning media alike. This design turns many small random writes into one large sequential write, improving sustained write throughput while limiting write amplification to later compaction steps.

SSTables

The on-disk representation of a flushed buffer is an SSTable. Each SSTable stores key–value pairs ordered by key, plus an index and metadata footer. Ordering means efficient merging during later compaction rounds and provides fast range access because records are in contiguous regions. SSTables are immutable; updates to a key result in a newer version appearing in a newer file, leaving older versions intact until compaction discards them. A small per-SSTable index kept in memory maps key ranges to file offsets, so only a few bytes of memory are used per table. To avoid opening many files for every point lookup, implementations cache file descriptors and store a Bloom filter for each SSTable. The Bloom filter indicates whether a key is definitely absent from the file, eliminating unnecessary disk reads and improving read performance, especially when the key exists only in newer levels.

Read operations

A point lookup starts at the mutable in-memory buffer, proceeds through any immutable, still-unflushed buffers, and then checks SSTables from the newest level to the oldest. The process consults each level’s Bloom filter before touching the disk; if the filter says “not present,” the reader skips that file entirely. Because recent data reside in higher (newer) levels that contain fewer files, most searches complete after examining a small subset of the hierarchy. For range queries, the ordered nature of SSTables lets the system merge‐iterate over multiple files, returning keys in sorted order while reading each key once. Compaction periodically merges overlapping SSTables across levels, discarding obsolete versions and reorganizing data so that deeper levels remain roughly non-overlapping. This background activity maintains predictable read performance by limiting the number of SSTables that must be consulted.

Point lookup

Point lookup follows a hierarchical path that reduces disk touches. The memtable, an in-memory skip-list, is examined first; if it holds a current value or a tombstone, the search ends. Otherwise, the Bloom filter of every lower-level SSTable is consulted. A negative Bloom filter response means the key does not exist in that file, so no disk I/O occurs. Only filters that return a possible match trigger a read of the corresponding data block on disk. Because SSTables are sorted by key, one binary search inside the block finds the candidate record, which is then checked for version ordering or deletion. Engines often pin the most recently used filters and index blocks in memory to keep the critical path short. This combination of a probabilistic Bloom filter gate and one on-disk data block read yields predictable, low-latency read performance even when hundreds of SSTables accumulate at deep levels.

Range query

Range query traverses the keyspace in order, collecting all keys that fall between the user-supplied start and end boundaries. The engine begins in memory, walking the newest in-memory run first, then proceeds through on-disk levels that hold older data. Because every participating run is already sorted, the iterator can merge them in one pass, producing the next smallest key at each step. When it finds the key’s most recent version, stale copies in lower levels are skipped, which prevents duplicates.

Filtering by Bloom filter is not effective here, because every key in the requested range must be evaluated, so the iterator pays a fixed I/O cost proportional to the number of segment files that overlap the range. This can cause read amplification: If keys are widely scattered across levels, additional data block reads are necessary even though only some of their contents lie in the target interval. Designs such as hierarchical compaction and tiered compaction trade write cost for tighter key clustering, reducing range-query amplification at the expense of needing to write more often.

Beyond the LSM tree

LSM trees showed that buffering writes and flushing them sequentially could improve throughput, yet they still pay a steep tax in compaction complexity and unpredictable read latency. Because Aerospike’s HMA keeps the full primary index in DRAM while writing unsorted data blocks directly to NVMe/SSD, the result is the best of both worlds: one I/O-per-read determinism plus LSM-class ingest rates, but without multi-level compaction or dealing with Bloom filters. That’s why enterprises that have outgrown the latency spikes and write-amplification overhead of traditional LSM engines use Aerospike for real-time bidding, fraud prevention, and edge-scale analytics. If your application requires sub-millisecond decisions, it’s time to move from “write-optimized” to “always-optimized.”

Harness predictable performance at any scale: Switch to Aerospike and leave compaction headaches behind.