How Aerospike delivers near in-memory performance at disk prices

Learn how Aerospike achieves near-in-memory performance with disk-based storage through its Hybrid Memory Architecture. Explore how HMA enables real-time speed, petabyte-scale, predictable latency, and major cost savings.

When Aerospike was founded, we kept running into the same conversation with systems architects. The business wanted fast and accurate decisions regardless of scale. Finance wanted a cost curve that didn’t get steeper every time the dataset grew. The engineering team had to strike a seemingly impossible balance between using memory to improve performance and managing the cost associated with it.

If they pushed everything into memory, they faced an infrastructure bill that grew faster than revenue. If they relied on a cache that provided faster access to a portion of data, they had to accept the resulting complexity, inconsistencies, and performance unpredictability caused by constant cache hit rate fluctuations.

In-memory performance with disk-based storage

We built Aerospike on a third option: Hybrid Memory Architecture (HMA). From the application’s perspective, Aerospike HMA behaves like an in-memory system, even though the data is stored entirely on disk and never cached. This enables the entire dataset to be accessible with near in-memory performance, without requiring any of the data to reside in RAM.

From terabytes to petabytes with linearly scaling performance and cost

The results are noteworthy. Criteo experienced a performance increase and a cost decrease when the company collapsed a Couchbase and Memcached stack into Aerospike. The real-time bidding platform matches 950 billion times a day, 250 million per second at peak, with submillisecond response times. They decreased the hardware resource usage by over 75% and saved millions of dollars per year.

HMA also enables Aerospike to excel at scale. Aerospike distributes data evenly and automatically across all nodes in the cluster using a fully randomized partitioning strategy. This eliminates the risk of hot spots or unbalanced nodes that can create bottlenecks and limit scalability. As a result, increasing your dataset from terabytes to petabytes has virtually no impact on performance.

A great example is The Trade Desk, one of Aerospike’s earliest customers. They began with a modest dataset when the company was still small. As they grew into one of the largest AdTech platforms in the world, that very same Aerospike cluster scaled seamlessly to store petabytes of data, without sacrificing performance.

How can disk performance rival in-memory speed?

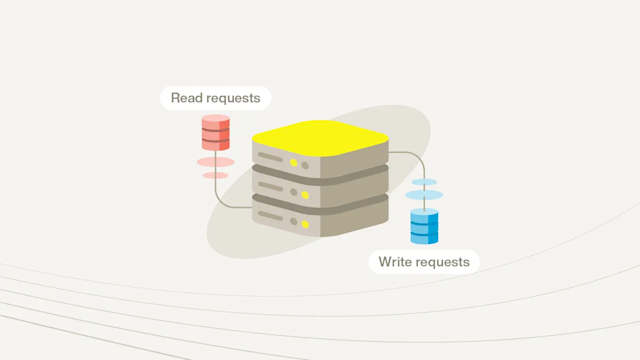

The read path in Aerospike is fully deterministic. Clients are cluster-aware, so each request is sent directly to the node that owns the target record. On that node, the in-memory primary index performs a constant-time lookup and maps the key to an exact physical location on storage. Using that location, the server performs a single read or write operation on disk and returns the result.

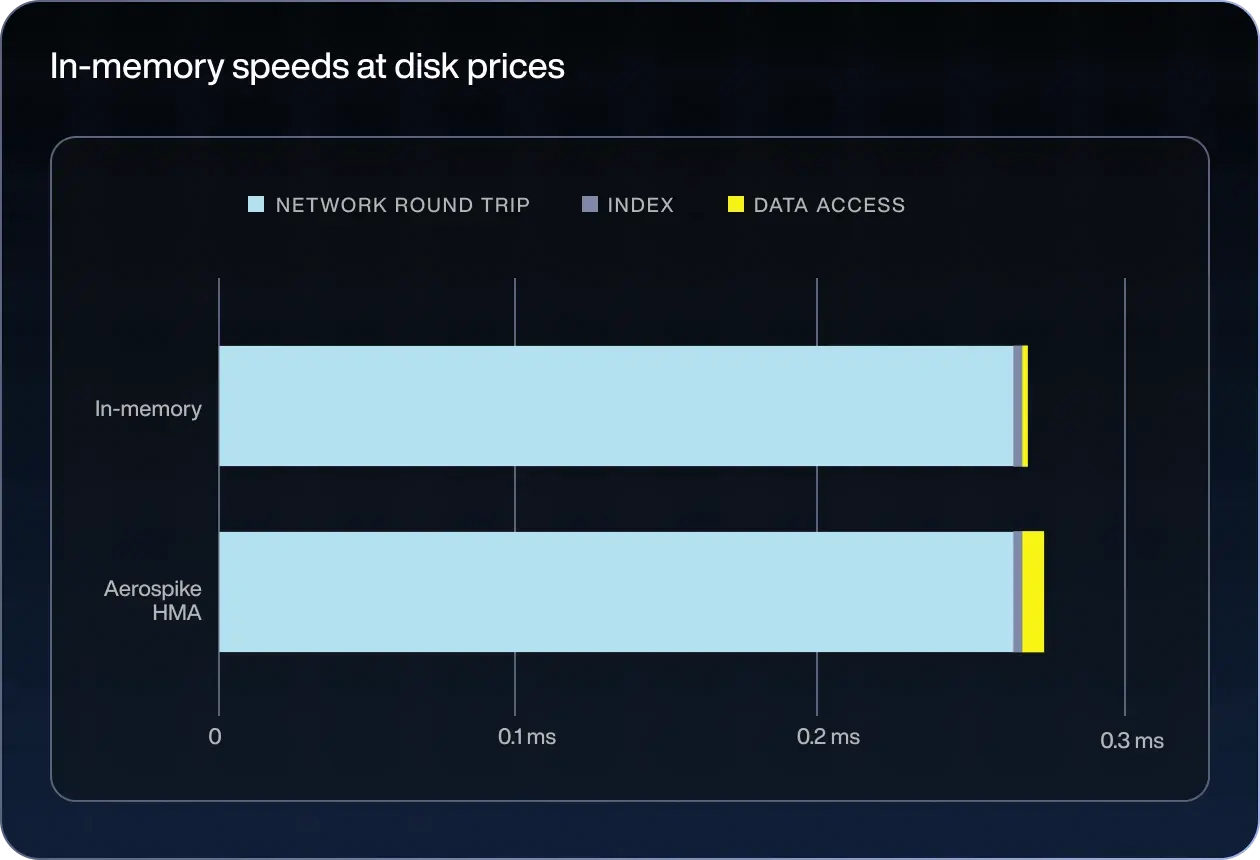

In this read path, there are three major latency sources:

Network round-trip: Typically, on the order of hundreds of microseconds

Index lookup in memory: Typically, a few nanoseconds

Data access: Typically, a few microseconds

Compared to a pure in-memory system, the only meaningful difference is the data-access component: memory access occurs in nanoseconds, whereas disk access in Aerospike’s HMA takes microseconds, thousands of times slower. However, because network latency dominates the end-to-end path, the difference in data-access time becomes negligible in practice. As a result, HMA and in-memory systems deliver virtually identical performance for almost all real-world use cases.

The write path follows the same deterministic pattern. The client sends the request directly to the node responsible for the record, and that node handles replication to its peers. Updating the primary index is a bounded-time, in-memory operation, and the data itself is written to disk with a single access.

Efficient architecture

We have engineered the Aerospike primary index so that it is compact enough to fit within a reasonable amount of RAM. As a result, the memory footprint of an Aerospike cluster is only a fraction of the total dataset size. This makes it easy to see why Aerospike dramatically reduces memory requirements compared to pure in-memory systems, delivering substantial cost savings.

At the same time, this architecture also makes Aerospike more cost-effective than traditional persistent NoSQL databases, whose performance is inherently constrained by less efficient access patterns.

Ultimately, performance gains do not come from “making a computer run faster,” but from reducing CPU cycles and minimizing memory and disk operations. The same architectural choices that enable Aerospike to perform as well as in-memory systems also allow it to use significantly fewer resources than competing technologies. This is reflected in our Cassandra and ScyllaDB benchmarks, where Aerospike delivered substantially better performance while using roughly 33% less hardware.

Are there any trade-offs?

Designing any software system requires balancing competing priorities, and Aerospike is no exception. Like all technologies, we make trade-offs. The difference is that we view these trade-offs as both reasonable and fully justified by the performance, efficiency, and predictability our technology delivers, which is why we are always comfortable discussing them openly.

1. Rebuilding the primary index on node restart

The first trade-off stems from keeping the primary index entirely in memory. When a node restarts, it must rebuild this index. Depending on the amount of data on the node, this rebuild may take a few minutes as the system scans the storage layer at startup.

However, we have engineered the primary index to live in shared memory, outside the server process itself. This means that if only the server process restarts, such as during a rolling restart, the new process simply reattaches to the existing shared-memory index and continues immediately, avoiding the rebuild and greatly minimizing downtime.

2. Fixed-size primary index entries

The second trade-off relates to how we maintain a compact index. Each primary index entry is a fixed 64 bytes. This is extremely small and results in substantial memory savings in most scenarios.

For typical record sizes of 1 KB, the index for 1 TB of data requires about 62.5 GB of memory. If the average record size increases to 2 KB, the index requirement is cut roughly in half to around 31 GB. However, as record sizes become very small, the index-to-data ratio increases and the memory savings diminish. In the extreme case of 64-byte records, Aerospike’s memory footprint approaches that of a pure in-memory system, though Aerospike still provides durability that in-memory systems do not. In such scenarios, grouping many small objects into larger records is recommended.

3. Optimal performance requires SSDs as raw block devices

The final notable trade-off is related to storage configuration. Aerospike delivers its best latency when SSDs with NVMe interfaces are attached as raw block devices (i.e., without a file system). As SSDs are now standard across modern server environments, this is less of a practical constraint than it was a decade ago when we built this technology, but it’s still worth highlighting.

By bypassing the file system and interacting directly with the device, we eliminate unnecessary overhead and achieve significantly lower latency. If SSDs are unavailable, Aerospike will still perform well, but the performance gap relative to in-memory systems increases. Even so, Aerospike remains an order of magnitude faster than traditional NoSQL databases.

No more tradeoffs between performance and price

Aerospike delivers in-memory performance at on-disk cost. A compact in-memory primary index, combined with SSD-based data storage, allows HMA to provide memory-like latency, petabyte-scale capacity, and predictable behavior, while avoiding an all-RAM footprint or a fragile cache layer.

For teams that need real-time access to growing datasets, HMA offers a practical path forward: near in-memory performance, durable on-disk storage, and a cost profile that stays under control.