Artificial Intelligence

Build the highest performing, most precise, and cost effective AI

Build AI apps to act on massive amounts of real-time data to build an infinite context window on less infrastructure.

Accurate predictions, faster

Feed insatiable AI algorithms to successfully ingest, persist, search, and retrieve from massive amounts of data at the industry’s lowest latency.

No more limits

Achieve performance, scale — billions of graph vertices, each with 1000s of edges or large amounts of vectors — and reduce costs by 80%, all at the same time.

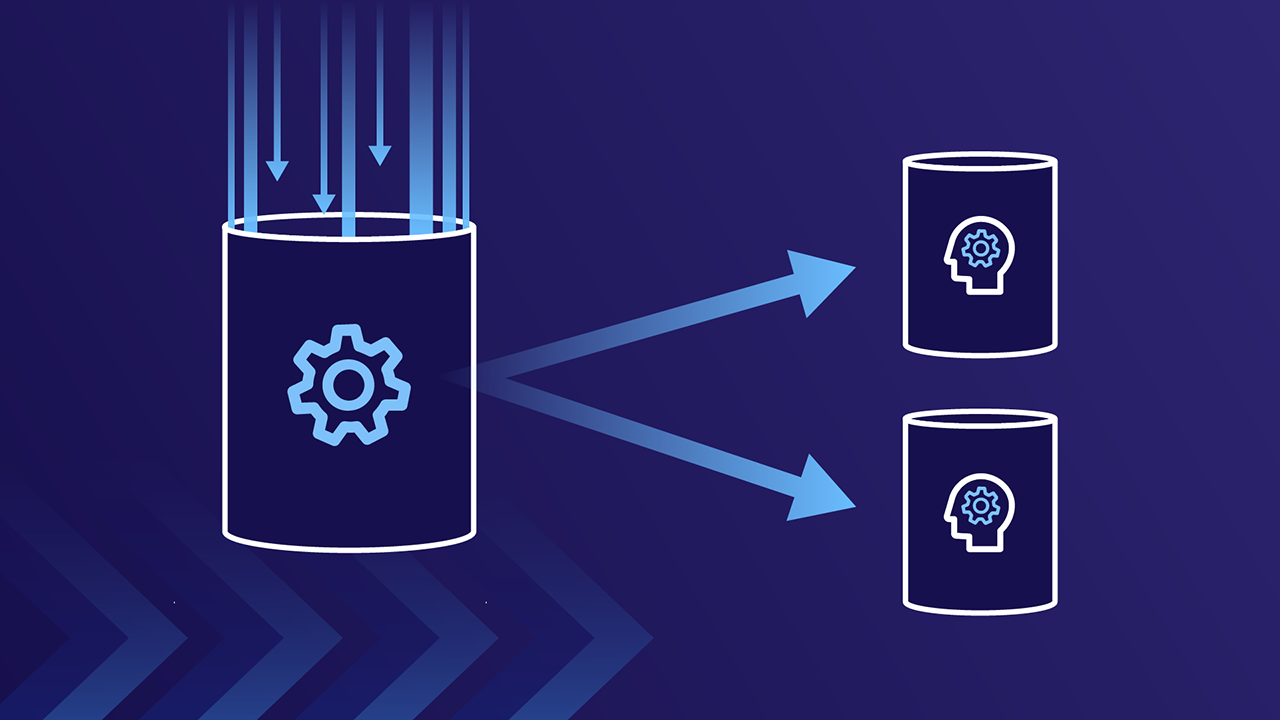

Maximum flexibility

Choose the best representation for your AI features by using one database that can handle vector search, key-value, and graph for all real-time AI use cases.

Use cases

Get started with Aerospike

Discover how to leverage Aerospike for your AI application