Optimizing your database infrastructure costs

Modern tech products’ success rates are predicated on the efficient collection and use of big data — but more importantly, on the database infrastructure to store, process, and analyze it. If managed incorrectly, infrastructure can quickly become cost prohibitive, so much so that a business’s profitability is eroded. In this article, we will discuss how to avoid costly mistakes on your path to growth (with real-life examples) to optimize database infrastructure costs.

Tricky nature of database infrastructure costs

Tech companies frequently underestimate the cost of operations. A technology-based business can experience unexpectedly large cloud bills if things are misconfigured, and that could even lead to solvency issues.

For instance, a company woke up with a $72,000 overnight bill on the Google Cloud Platform (GCP). When setting up a test project, they didn’t properly configure the Google Firestore NoSQL database deployment. As a result, the cloud database made 116 billion reads and 33 million writes to Firestore.

For tech companies, including database infrastructure costs in their unit economy is critical since scaling can easily bring a tech-based business under if the company underestimates the cost of its operations.

Database infrastructure cost optimization

To get to the bottom of why infrastructure costs are so frequently mismanaged, let’s review one of the cases from Xenoss’ practice. This case is a great illustration of how bad architectural decisions made by product managers can impact the business and, despite that, how the platforms can still be salvaged.

A company turned to Xenoss facing surging costs of operation and needed an urgent infrastructure revamp. This was an ad tech company that built its solution from scratch for the buy-side, a demand-side platform (DSP) in the ad trade.

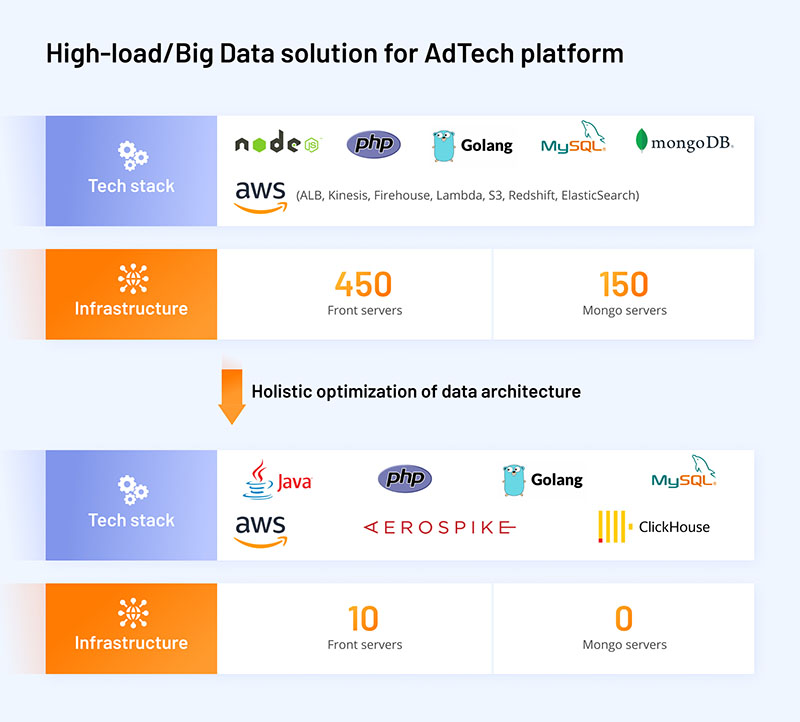

The product team chose the classic tech stack, Node js, PHP, Go, MongoDB, MySQL, and they picked load balancers from Amazon. After three years of development, they already had six load balancers, which balanced the load on 450 front servers, supported by 150 Mongo servers.

The product managed to keep its development team relatively small. However, the AWS costs started surging almost immediately, adding an additional 27% each month.

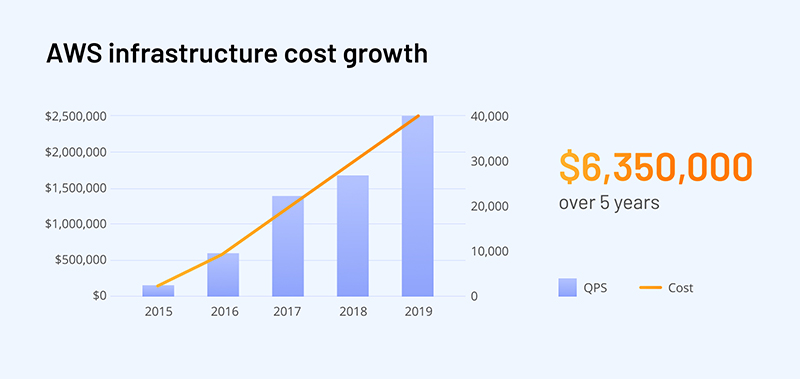

Figure 1. A DSP’s rising cloud bill from Amazon

Right after the launch in 2015, the infrastructure bill was negligible, but by 2016, it added up to half-million dollars a year, and by 2019, the cloud bill was close to $2.5 million. Overall, the company spent over $6 million on database infrastructure costs over five years.

Those funds could have been spent on business development, marketing, and other strategic initiatives that can generate growth. Instead, it was spent on AWS. The bank would hardly notice those expenses, but for an evolving startup, it is tough to justify such costs to the board.

Such infrastructure expenses would be enough for several startups’ seed funding rounds. If, in 2015, investors had known what the cloud bill would be in several years, they would have seriously reconsidered investing in such an enterprise.

Tech solution: What did we do?

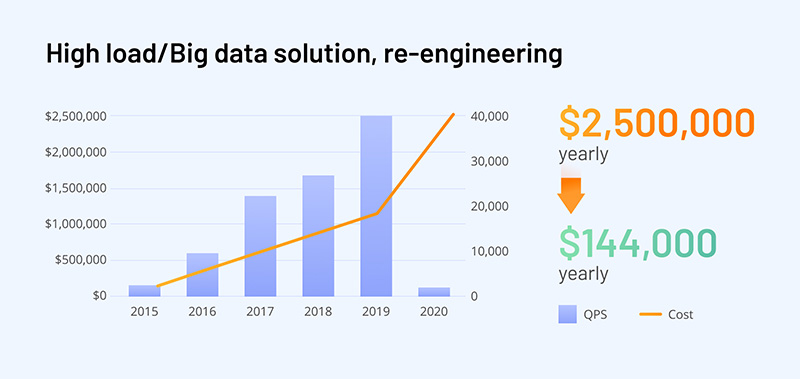

Before the new approach was introduced, the client had already wasted close to $5.5 million due to infrastructure mismanagement. A fast change was critical. After we analyzed the system bottlenecks and made a complete revamp, the yearly database infrastructure costs went down from $2,500,000 to $144,000. To reach those numbers, the Xenoss team took several steps that included redesigning the cloud architecture, real-time infrastructure, and data pipelines. We’re going to dive into details to illustrate one of the approaches you can take to tackle similar problems.

Figure 2. Re-engineering database architecture

Cloud architecture revamp

When analyzing the architecture for potential cost-minimizing strategies, one has to consider the optimization of shared-economy services. For instance, several AWS Lambda requests can be a cost-effective option, but those costs rise tenfold once you operate a high-load solution. You end up paying more for the shared hardware than if you operated this hardware yourself.

To ensure efficient resource provisioning for the client, we replaced pricy cloud managed services with more cost-effective self-service tools. This meant that we eliminated shared-economy services, stopped billable data-transfer services (Kinesis, Firehouse), and improved load-balancing.

Re-engineering architecture for real-time

Xenoss deployed an on-premise system and re-engineered the architecture so it doesn’t rely on Amazon APIs. Additionally, the team adapted the system for real-time, replacing NodeJS with Java, since with thousands of queries per second and fluctuating traffic patterns, Java offers a much more resilient environment.

Also, our architects substituted the data model that required MongoDB servers with Aerospike‘s node-local in-memory storage for real-time access. This decision significantly increased each server’s performance, reducing the number of servers from 450 to 10 while doubling the traffic. On top of it, we implemented an effective, scalable in-memory search with K-D indexing.

Reengineering of data-pipelines

To eliminate any redundancies in the transfer and access to data, we re-engineered the data pipeline. Data was written and read only once for intermediate operations. This helped to significantly reduce data storage and access costs, allowing for more advanced options for data compression and warehousing. Without the proposed optimization strategy, the platform would waste $2.5 million annually on database infrastructure costs while unable to scale and increase the load tolerance of the system.

Figure 3. High-load, big data solution for adtech

Without the proposed optimization strategy, the platform would waste $2.5 million annually on infrastructure costs while being unable to scale and increase the load tolerance of the system. It is essential to understand how the company ended up with such costly architecture in the first place. We’ll discuss more about common architectural mistakes in part 2 of this blog series.

Key takeaways for database infrastructure costs

When designing an architecture strategy, the team should determine how high the costs of queries to the database will rise when their business scales and the number of partners, clients, or traffic increases.

Even at the early stages of development, when the solutions have little traffic, the product team cannot be oblivious to the fact that with the scale, the costs will rise.

This article was written by guest author Vova Kyrychenko, CTO & Co-founder of Xenoss and is part 1 of a 3-part series. Stay tuned for parts 2 and 3, and watch the full Architecting for Scale and Success webinar with Aerospike and Xenoss to hear more about their use case.