Protecting your data with replication factor

Learn about replication factor in distributed systems, its role in data availability and fault tolerance, and best practices to optimize performance and redundancy.

Imagine you have a critical document, such as a passport or birth certificate. If you keep the original in one place and something happens to it — like it gets lost, damaged, or destroyed — you're in trouble. But suppose you make several certified copies and store them in different safe locations (one at home, another in a safety deposit box, and one with a trusted friend). In that case, you can still access the others even if something happens to one copy.

The same is true for your company’s data.

What is a replication factor?

Replication factor refers to the number of copies (replicas) of data distributed across multiple nodes in a cluster. A higher replication factor means more copies, improving data availability and fault tolerance and reducing data loss risk.

This way, if one node goes down, the system retrieves the data from one of the other nodes instead.

Replication in data systems

Different replication strategies and default replication factors affect how data is stored and retrieved across nodes, influencing system performance.

In distributed systems, a replication group (or cluster of nodes) works together to improve data availability and maintain data accuracy levels across the nodes because if you lose enough nodes, the database cannot serve the data. With Aerospike, it's N+1 -- to tolerate one node outage, you need two copies of the data; to tolerate two concurrent node outages, it's three copies, and so on. Most other NoSQL databases that offer strong consistency use the quorum method, meaning 2N+1 -- tolerating one node outage needs three copies, two concurrent node outages need five copies, and so on.

The different components of replication factor

There are more aspects of the replication factor in addition to how many copies are made. Here are some of the most common:

Synchronous and asynchronous replication: In synchronous replication, data is written to multiple nodes before a successful response is returned to the application. This ensures that sufficient replicas are up-to-date before the operation is marked as complete. This approach can provide strong consistency and is preferred for scenarios where data accuracy is critical, as it removes the risk of data conflicts.

On the other hand, asynchronous replication allows data to be written to one node first, with the updates propagated to other nodes later. While this approach reduces latency and improves write performance, it may lead to eventual consistency, where replicas are not immediately synchronized. In the worst case, it can also lead to the loss of data the application believes has been correctly written. In other words, asynchronous replication can’t produce strong consistency. This isn’t necessarily a problem, depending on the use case. But note that while you have to have synchronous replication for strong consistency, just using synchronous replication does not guarantee strong consistency. Learn more about synchronous versus asynchronous replication in real-time DBMSes.

Partitioning and data distribution: Data is often divided into smaller pieces called partitions to improve workload distribution across the cluster. Each partition is replicated according to the system's replication factor, improving balanced data distribution among nodes. This balanced approach helps avoid bottlenecks and optimizes system performance, particularly in large clusters.

Dynamic adjustments to meet evolving needs: Depending on the configuration, platforms such as Aerospike let you adjust the replication factor dynamically without extensive downtime, making it easier to meet changing requirements. This is helpful for businesses that want to ensure the system is still available when it’s busy or when applications are redesigned to allow for more frequent access to edge data.

Aerospike’s unique consistency algorithm also helps reduce hardware costs. As mentioned above, some distributed systems use quorum-based consensus algorithms, meaning that to tolerate a single node failure, they need three copies of the data – plus additional hardware to account for overhead uses such as fragmentation and compactions, as well as the CPUs, NICs, power supplies, rack space, switches, and other hardware to manage the additional storage.

Best practices for replication factor

Selecting the optimal replication factor depends on your specific data requirements, including availability, performance, and risk tolerance. A higher replication factor improves data redundancy but uses more space. The key is to find a balance that maximizes reliability without overly taxing the system's resources. Balancing these elements means the cluster operates efficiently without compromising fault tolerance.

Dynamic adjustments and monitoring

Review your replication strategy regularly to adapt to evolving needs. In platforms like Aerospike’s Available and Partition-tolerant mode (AP mode), dynamically adjusting the replication factor allows you to scale efficiently while maintaining data consistency and minimizing disruptions.

Prioritize node distribution across racks

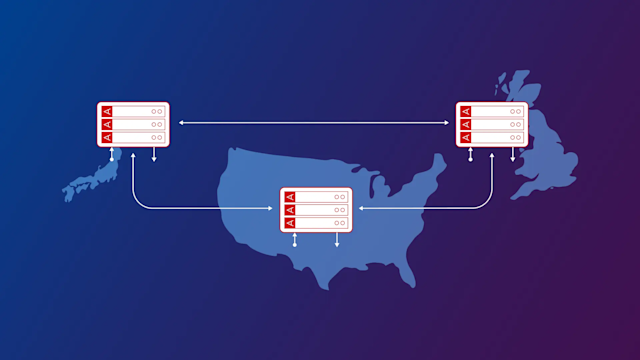

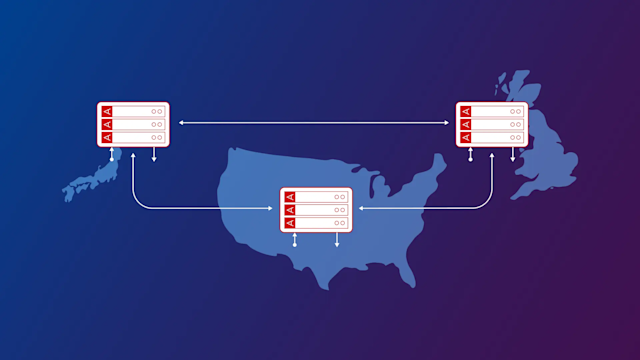

Implementing a replication strategy that distributes copies of the data across nodes in different racks or data centers can further enhance fault tolerance. A typical scenario is to have the replication factor the same as the number of data centers or racks so each rack has a full copy of the data. This setup allows your data to remain available even if an entire rack fails. An additional benefit of this scheme is that data reads are possible from the local data center, reducing latency.

Handling data consistency in high availability scenarios

Use synchronous replication methods for scenarios where data accuracy is critical. This approach means data writes are confirmed across all replicas before they’re marked as complete, reducing the risk of inconsistency during failures.

How Aerospike implements replication factor

Replication factor has different meanings for different database vendors. To some, including Aerospike, a replication factor of two means two copies of every piece of the data. To others, it means two spare copies of the data for three total copies. For the purpose of this discussion, this article will use the former definition.

How you implement your replication strategy affects infrastructure costs. Because Aerospike doesn’t depend on quorum consistency, it typically requires only a replication factor of two (2), compared with the three (3) that most other systems require. This results in fewer resources used and higher data availability with fewer nodes. Compared with Cassandra, which often requires extensive hardware investments to handle data redundancy, Aerospike’s architecture minimizes the total cost of ownership (TCO), making it a more budget-friendly solution without sacrificing performance or scalability.

You can read more about this in our e-book, Five places your true database costs are hiding.

Flexibility and scalability of Aerospike’s replication

One advantage of Aerospike’s AP mode is its ability to dynamically adjust the replication factor without extensive downtime. Aerospike's architecture is built to handle these adjustments efficiently, reducing the risk of data loss and ensuring higher fault tolerance.

This flexibility contrasts with Cassandra, where changing the replication factor requires the operator to initiate the 'data repair' process, which is resource-intensive and will affect performance. For more information on how Aerospike's architecture supports this flexibility, see data distribution.

Data durability and consistency

Aerospike also excels in maintaining data durability and consistency. With features like synchronous and asynchronous data replication, it provides a balance between speed and reliability, ensuring that data integrity remains uncompromised even in the event of node failures.

Take your next step with Aerospike

Understanding replication factor is essential for ensuring data reliability and high availability in distributed databases. Aerospike’s architecture is designed to optimize these aspects, efficiently managing data distribution and durability. Unlike Cassandra, which struggles with performance issues as replication factors increase, Aerospike's consistency and data distribution models mean it scales efficiently without compromising speed or data integrity. With Aerospike, organizations run more efficiently with fewer resources.

Explore our resources on data distribution, durability configurations, and consistency models to learn more about how Aerospike handles replication factors and data consistency.

Ready to see how Aerospike can power your data strategy? Check out our free trial, or contact our team for a personalized demo.

Keep reading

Oct 9, 2024

Data replication between data centers: Log shipping vs. Cross Datacenter Replication

Sep 18, 2024

Balancing data durability and data availability for high-performance applications

Jan 25, 2024

Implementing strong consistency in distributed database systems

Dec 14, 2023

Geo-distribution deployment models: Achieving business outcomes while minimizing latency