What is data redundancy?

Understand data redundancy, how planned replication boosts availability and recovery, the risks of inconsistency and cost, and practical steps to implement it safely.

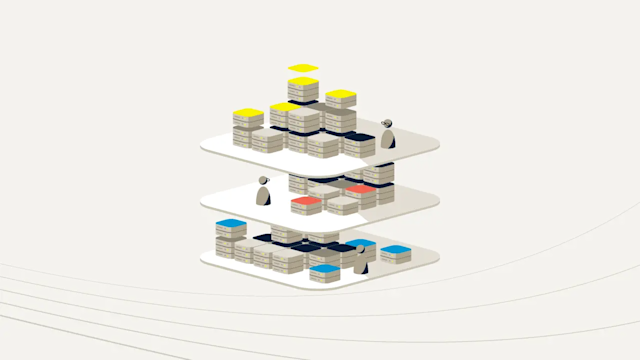

Data redundancy is the condition in which the same logical data is stored in two or more independent physical locations. In other words, identical information resides in multiple files, databases, or systems at the same time. For example, the same customer record might appear in separate departmental databases, or a file might be copied to several servers. It duplicates important system elements so that when one fails, others take over. This duplication can happen intentionally or unintentionally.

Intentional redundancy is introduced on purpose to improve reliability, data availability, or performance. For instance, replicating data across servers gives users access to information even if one server fails. Keeping multiple synchronized copies is a deliberate design choice in high-availability architectures.

Unintentional redundancy usually stems from poor design, siloed systems, or human error. This happens when the same data gets stored repeatedly by accident, such as in improperly normalized databases or when merging records from different sources. Unintentional duplicates lead to inconsistencies, errors, and wasted storage.

In traditional data management, especially with a relational database, eliminating excessive redundancy is a goal because of the risk of anomalies and discrepancies. However, redundancy is not inherently bad. When managed, redundant copies of data are useful. In short, data redundancy is both a friend and a foe; the difference lies in whether it’s planned and controlled.

We use the words redundancy and replication interchangeably, but in stricter terms:

Redundancy is the property of having multiple independent copies

Replication is a mechanism to maintain them

Backups are point-in-time, usually offline/immutable

Duplication is any extra copy (intentional or not)

Benefits of data redundancy

Intentional data redundancy, implemented through techniques such as database replication, mirrored storage, and backups, provides numerous benefits for organizations. By having multiple copies of your data, you add a safety net that protects against failure. Here are the advantages of data redundancy and why it matters for today’s businesses.

Supporting high availability and uptime

One of the primary reasons to maintain redundant data is to provide high availability. In a redundant system, if one server, disk, or data center goes down, another copy of the data is ready to serve requests without interruption. Redundancy eliminates single points of failure by having backups that take over when a failure occurs.

In practice, this means clustering servers or cloud nodes so that when one source of data fails, another copy is available. By replicating data across multiple servers or locations, companies get near-continuous service. Even if one component crashes, users are redirected to a healthy copy of the data with minimal downtime. This failover capability helps meet the “five nines” (99.999%) availability that many enterprises want.

In essence, data redundancy underpins fault-tolerant architectures. It keeps applications running through hardware outages, network issues, or other disruptions by always having another up-to-date copy to rely on.

Faster data access and performance scaling

Copies of data in multiple places also boost performance and scalability. With redundant datasets, read requests get distributed to different nodes, avoiding bottlenecks.

For example, in an active-active cluster, all nodes hold the same data, so they share the query load and respond faster to users. Geographic redundancy (maintaining data copies in various regions) lets users retrieve data from the nearest server, reducing latency. Distributed data replicas lead to lower latencies, faster loading, and faster information retrieval for users.

Multiple database replicas also handle higher throughput. As traffic grows, adding new nodes with copies of the data scales out capacity almost linearly.

In addition, redundant data means quicker updates in some scenarios. Employees or applications get access to a local copy of needed information instead of everyone contending for one centralized database, which helps maintain performance when the system is busy.

Overall, by spreading read workloads and placing data closer to where it’s needed, redundancy not only prevents downtime but also contributes to a faster, more scalable system that meets performance requirements.

Backup, recovery, and business continuity

Data redundancy is a cornerstone of disaster recovery and business continuity planning. By keeping copies of data, organizations help prevent catastrophic data loss and recover more quickly from incidents. Backups are a basic form of redundancy, by compressing and storing data snapshots to restore them if the primary data is lost.

In fact, redundant storage often serves as an alternative data backup method, providing an extra layer of protection beyond regular backups. Should a database get corrupted or a data center experience a disaster, a redundant copy, such as an off-site backup or a replica in another region, gets activated to restore service and data. This capability reduces downtime in a crisis.

For example, when Hurricane Sandy struck New York in 2012 and knocked out power and infrastructure, systems with redundant replicas in unaffected regions continued operating without missing a beat. In that case, a company’s secondary data copies allowed it to keep serving customers despite the primary site’s outage. By replicating databases across geographically distributed servers, organizations keep regional disasters from wiping out their data or halting their operations.

In sum, maintaining redundant data, whether through live replication or scheduled backups, is important for quick recovery from failures. It supports business continuity, meaning the business keeps running with minimal interruption even if something goes wrong in one location.

Improved data integrity and reliability

Another benefit of data redundancy is improved data integrity and reliability. Having multiple copies provides a form of cross-verification: One copy can be checked against another for accuracy and completeness. Organizations use redundant data to double-check and confirm that information is correct and intact. If one dataset becomes corrupted or incomplete, a redundant copy serves as the reference to repair the errors. In this way, redundancy acts like an insurance policy for data quality.

For example, financial institutions often keep redundant transaction logs so that if one record is suspect, it is validated against the duplicate in another system. Redundancy helps catch and correct inconsistencies, making the overall data more trustworthy. In a database design geared for high reliability, critical records might be stored twice on different media; the system compares the two to detect any bit rot or corruption. Complete and consistent data is especially important when interacting with customers or complying with regulations. By using redundant copies as a safeguard, companies have greater confidence in their data’s integrity.

In short, intentional redundancy bolsters reliability. It means that even if one instance of the data has an issue, there’s another intact version available to preserve continuity.

Improved data security and loss protection

Maintaining redundant data improves data security and loss protection in several ways. First, it protects against accidental or malicious data loss: If a ransomware attack encrypts or destroys your primary data, an isolated redundant copy saves the day. Storing the same information in multiple places means a breach or corruption in one repository doesn’t necessarily compromise the others.

For instance, an attacker might delete records in a database, but a separate backup copy could be untouched and ready to restore. Redundancy also mitigates the risk of user error. If someone mistakenly deletes or alters data, a redundant version helps recover it.

Additionally, data redundancy aids in meeting compliance and governance requirements. Many regulations mandate protections against data loss; redundant storage is a technique to satisfy those rules. By maintaining multiple, secure copies, potentially with one set offline or in a secure cloud, businesses add layers of defense against losing data integrity. The redundant copies become part of an overall security strategy, so one failure or breach doesn’t result in irreparable damage. In summary, redundancy makes data more secure by providing backups in case of attacks, so information isn’t solely dependent on one vulnerable store.

Regulatory compliance and data localization

In an increasingly regulated data environment, data redundancy assists with compliance and localization needs as well. Global organizations often face laws that require certain data to be stored within specific regions or to keep tamper-proof archives for long periods. By replicating data and maintaining multiple controlled copies, companies more easily segregate and manage data according to regional rules.

For example, an enterprise might keep one redundant database copy in the EU to satisfy GDPR data residency requirements, while another copy is stored in the U.S. for local access. Advanced replication techniques even allow filtering of certain fields during duplication, meaning one redundant dataset omits or anonymizes personal data to comply with privacy laws. In essence, redundancy paired with smart replication creates a localized data store that meets local regulations without affecting the primary operational data.

Moreover, regulators often demand robust data protection and disaster recovery plans. Demonstrating that you have redundant backups and failover sites helps fulfill those compliance mandates. By maintaining multiple copies of the core database that are filtered and controlled for regional laws, companies follow data sovereignty rules while still enjoying the resilience of redundant storage. This benefit is somewhat specialized, but in certain industries such as finance or healthcare, it’s crucial. Data redundancy not only keeps systems running, but also helps organizations adhere to legal requirements and avoid penalties by keeping data where and how it’s supposed to be.

Challenges and drawbacks of data redundancy

These benefits underscore why today’s data platforms include redundancy. But redundancy introduces some challenges, too. Despite its many advantages, data redundancy comes with tradeoffs organizations must address. Duplicating data inherently consumes more resources and is more complex. If not managed properly, redundancy may even undermine the data it’s meant to safeguard. Here are the drawbacks of data redundancy and why too much of a good thing becomes a problem if left unchecked.

Data inconsistency risks

The biggest problem with redundant data is the risk of faulty data consistency. This happens when copies of the same data no longer match, such as when one database copy has an updated value while another still has the old value. When the same piece of information exists in multiple places, it’s possible for them to diverge unless all copies are updated in sync. Inconsistent data is dangerous: Reports may conflict, users might get different answers depending on which system they query, and it erodes overall trust in data. This often occurs with unintentional redundancy, such as when two departments separately update a customer’s contact info in their own systems, resulting in discrepant records.

Data inconsistency leads to confusion and mistakes in decision-making, because it’s unclear which copy is correct. It also violates regulatory requirements if, say, a customer requests their data be updated or deleted, and one of the redundant stores is overlooked. Keeping redundant data sets consistent adds complexity by requiring processes or synchronization mechanisms. If updates aren’t propagated to every copy, redundant architecture becomes a liability rather than a benefit. So you must manage and audit redundant data to prevent inconsistencies that intentional replication is meant to avoid.

Propagation of data corruption or errors

Redundancy sometimes spreads problems as much as it spreads protection. If not well-designed, an error or corruption in one copy of the data propagates to others. For example, in data storage systems, if corrupted data is replicated before the corruption is detected, the backup copy will be corrupted as well.

Similarly, if a user accidentally enters invalid data and that record is promptly replicated, now all copies share the bad data. Duplicate data fields increase the chance of data corruption, meaning damage or errors in data due to issues in reading/writing or processing. In a redundant setup, a corrupted file on one server might still be unreadable on the mirror server if the corruption isn’t isolated.

There’s also the challenge of stale data; if one copy stops updating, perhaps due to a broken sync process, while others continue to change, that outdated copy becomes corrupted. The next time someone uses it, it may cause errors or incorrect operations.

In short, redundancy multiplies the instances of data, which multiplies the instances of any given flaw unless safeguards are in place. Techniques such as hashing, checksums, or verification between copies help find corruption in one location before it taints all replicas. Without such measures, redundant storage might give a false sense of security: You think you have a backup, but in reality, you have multiple copies of the same bad data. Managing redundancy means also managing the quality of each copy so an issue in one doesn’t infect all others.

Higher storage and infrastructure costs

By definition, keeping copies of data uses more storage space and hardware resources, which costs money. If you maintain three copies of a database, you’ll need roughly three times the data storage capacity, as well as possibly three times the memory processing power for servers hosting those copies. Beyond raw storage costs, redundant systems also incur costs in network bandwidth to transmit data to multiple locations, power and cooling for additional hardware, and software licenses or cloud fees for redundant environments. Small companies might struggle with these expenses if they try to replicate everything.

There’s also an efficiency cost: A larger database with redundant entries or extra tables becomes more cumbersome to manage. It may increase database size and complexity, leading to longer backup times and slower query performance.

For example, if a dataset is duplicated within one database or denormalized data, the database may become bloated and degrade in speed over time. So there is a cost-benefit trade-off at play: More redundancy is safer, but at a literal cost in dollars and potential performance drag. Companies must weigh how many copies are needed to meet their reliability goals without over-provisioning storage. Today’s cloud storage helps save money, but even cloud costs add up. Ultimately, data redundancy is not free: You’re paying for peace of mind in extra disks, servers, or cloud instances, so it needs to deliver corresponding value.

Maintenance and complexity overhead

Along with cost, operational complexity is another drawback of widespread data redundancy. Maintaining multiple copies of data means more work for IT teams: more systems to administer, monitor, back up, and secure. Every time data is updated or a schema changes, those changes need to be applied across several environments. This increases the administrative burden and the chance of mistakes.

For instance, backing up a redundant cluster is itself complex. Do you back up each replica or just one? How do you ensure all redundant sites are in sync after a recovery? Coordination is key, and that often requires advanced software or diligent procedures. Maintaining multiple copies of data grows expensive and creates server bloat. Beyond that, any data transfer takes time, and replicating data takes processing power, which introduces new performance tuning challenges. In other words, the act of keeping data redundant slows things down if not optimized.

There is also the complexity of conflict resolution in certain redundant setups, such as multi-master databases. If two copies are updated independently, the system must reconcile differences, which adds to system design complexity. All these factors mean that using data redundancy effectively requires management. Companies need to invest in robust replication tools, monitoring systems to ensure all copies are healthy, and processes for regular testing of failovers. Without those, the redundancy layer itself becomes a source of errors or outages, such as if a failover mechanism misbehaves.

Therefore, organizations should be aware that redundancy isn’t a “set and forget” solution – it introduces a layer of complexity that must be actively managed. The goal is to make the system more robust, but that comes at the price of planning and maintenance.

Implementing data redundancy effectively

To maximize the benefits of data redundancy while minimizing drawbacks, organizations should approach redundancy strategically. It’s not just about making copies of everything everywhere; it’s about planning, the right technologies, and ongoing management. Here are some best practices and considerations for implementing data redundancy in an effective, business-aligned way:

Design for redundancy, but avoid unnecessary duplication

Identify which data is mission-critical and warrants multiple copies. Use proper data modeling, such as database normalization, to eliminate unintentional duplicate data so only intentional redundancy remains. In other words, keep one source of truth for each piece of information and add redundancy on top of that, rather than having ad hoc duplicates floating around.

Eliminate single points of failure with geographic spread

Store your redundant copies in independent locations or systems. For resilience, that means different servers, racks, or even different data centers and regions. A rule of thumb is that if your data doesn’t exist in at least three places, it doesn’t really exist. Critical services survive catastrophes only by deploying on multiple sites hundreds of miles apart. Geographic redundancy, also known as geo-replication, protects you from localized disasters and offers better continuity.

Balance redundancy level with cost and needs

Plan your redundancy strategy based on business requirements and risk tolerance. There are tradeoffs between higher availability and higher costs. For example, keeping two copies might achieve your uptime goals; a third copy might add marginal benefit with more expense. Decide on factors such as replication factor or number of copies, or backup frequency by considering how much data loss is acceptable (RPO) and how quickly you need to recover (RTO). Invest in redundancy where it counts most. Use cheaper solutions, such as cold storage backups for less important data, to reduce costs.

Use reliable replication and sync technologies

Use current data replication tools or database features to keep redundant data synchronized in real time or near-real time. The goal is to automate consistency so users always get up-to-date information from any copy. Depending on your scenario, choose between synchronous replication, which copies each update to backups for strong consistency, and asynchronous replication, which may lag slightly but is more efficient. Many enterprise databases and storage systems have built-in support for replication, snapshots, or mirroring. Use these tools rather than trying to do it yourself.

Regularly test backups and failover processes

Redundancy is only as good as your ability to use it in a crisis. Perform regular data backups, even of redundant systems, and test restoring them to verify your backups aren’t corrupted. Likewise, if you have a failover cluster or secondary site, conduct periodic drills to simulate failures and check that automatic failover works as intended. This includes verifying that all your redundant copies actually take over service within the required time. Testing prevents surprises during a real incident and helps fine-tune your procedures.

Monitor and refine your redundancy setup

Treat redundancy as an evolving strategy. Monitor the health and performance of all data copies continuously. Set up alerts for lagging replication, data discrepancies, or any failed backup jobs. Over time, analyze whether certain data might be over-replicated or under-protected and adjust accordingly.

For instance, you might find that a rarely used dataset doesn’t need hourly replication and could be moved to daily backups, while a customer-facing database might need an additional live replica to handle growing traffic. Database management system policies, such as a master data management approach, help ensure that when changes happen, they propagate to all the right places. The key is to actively manage redundancy rather than “set it and forget it,” tuning the level of redundancy to what best supports your business goals.

By following these practices, organizations harness the power of data redundancy in a cost-effective, controlled manner. Effective redundancy implementation means you get the uptime, speed, and peace of mind benefits without drowning in costs or complexity. It’s about smart redundancy: Making just enough copies, in the right places, updated at the right frequency, to meet your needs for resilience.

Aerospike and data redundancy

Data redundancy is a cornerstone of resilient data architecture. In summary, maintaining redundant copies of data, from duplicate servers in a cluster to backups across the globe, is what helps today’s businesses stay always-on and safeguard their information. By duplicating important data and systems, companies keep any one failure from taking them down. The key is managing redundancy wisely so it’s an asset, not a liability. Done right, data redundancy improves uptime, performance, and trust in your data, all of which are essential for modern digital services.

Aerospike is a real-time data database platform known for its high performance and reliability. Underneath the hood, it uses data redundancy as a design principle. Aerospike’s architecture is built to eliminate single points of failure. It replicates data across multiple nodes in a cluster, using a configurable replication factor, so even if one node fails unexpectedly, the data remains available on other nodes. In fact, Aerospike uses synchronous replication, meaning a piece of data is written to multiple storage nodes before the operation is considered complete. This redundancy strategy means a sudden hardware failure won’t lose any acknowledged writes.

The platform also supports multi-site clustering, which deploys an Aerospike database across geographically dispersed data centers; data is continuously replicated across sites for an extra layer of protection against regional outages. By building these capabilities in, Aerospike delivers the benefits of data redundancy, such as high availability, durability, and fast access, without burdening the user with complexity: The database handles it behind the scenes. In other words, Aerospike uses redundancy to offer a fault-tolerant, lightning-fast data foundation for applications that can’t afford to go down.

If your organization is looking to improve reliability and scale in your data infrastructure, consider how Aerospike’s approach to data redundancy could help. Aerospike’s real-time data platform provides the redundancy, performance, and global replication features needed to keep your services running 24x7 and your data safe, all while managing explosive growth in data volume.