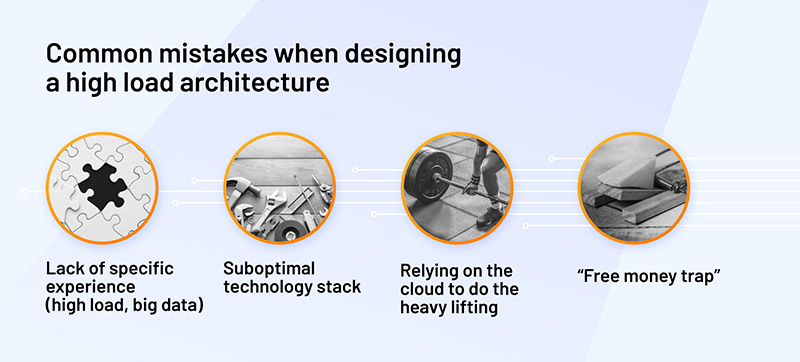

In part one of this blog series, Vova Kyrychenko, CTO & Co-founder of Xenoss, discussed the best ways to optimize your database infrastructure to be more cost-efficient. Now, let’s understand the common mistakes organizations make with their database architecture design that can lead to infrastructure cost mismanagement. There are four common errors that we often see: lack of specific experience, a suboptimal technology stack, relying too much on the cloud, and what we call the “free money trap.”

Lack of high-load/big-data experience

Scalable systems require choosing the right approach to heavy computational tasks and big data capabilities early in the development cycle. Engineers without previous experience with high-load projects lack the knowledge necessary to design high-load systems, predict database scaling patterns, and devise performance optimization strategies.

Learning new things doesn’t come without mistakes, and they will undoubtedly cost the business. To avoid these costly experiments, many early-stage startups prefer to invest in the managed service that takes care of the bulk of the database architecture problems. This strategy, while reducing the initial cost of the operation, can hurt the company in the long run.

Suboptimal technology stack

As your data volumes increase, you’d have to optimize the design of your database architecture to maintain the same product performance. For that, you’d likely require a bigger budget if you stay with a managed services provider.

In managed cloud services, the more data you process, the more machines will be plugged into your operation. You would get the load distributed over a more significant number of computing machines but would have to pay for each new addition without an option to configure or optimize this data infrastructure.

In contrast, self-serve solutions paired with DevOps expertise can yield faster data processing speeds, better database performance, and substantial cost savings compared to managed services.

Relying on the cloud to do the heavy lifting

Suppose you’re designing your database architecture for a relatively low-load project. In that case, cloud database management can be helpful as it speeds up data discovery and ETL jobs (processing and loading data) at a low cost.

However, once your platform scales and faces heavier data loads, managed services can grow into a considerable expense, as most providers charge on a per-request basis. Managed cloud services lack controls for optimizing data querying.

Popular cloud-managed solutions do not support indexes traditionally used for database performance optimization. For instance, BigQuery doesn’t offer any secondary indexes, and Redshift has no indexes at all.

In tech-based businesses, “buying” tech is rarely the best strategy. You buy into the ecosystem that will upcharge you as you scale and miss the opportunity to extend your in-house expertise and increase the flexibility of your platform.

Free-money trap

Promising startups can get infrastructural credits from cloud providers and, as a result, ignore the infrastructural costs as if they were free.

If the startup is not putting effort into optimization and cost-effective infrastructure, when cloud credit depletes, the operational costs can become unbearable and make it difficult for the enterprise to stay afloat.

Cloud services have an attractive “starter” price tag. But they might cost a lot if you deal with big data volumes.

Startups should consider infrastructure credit as real money and spend it carefully. When scaling, the product management team should routinely ask themselves, what operational cost depends on the business scale? If the business grows by 100 times, are you prepared for operating expenses to increase accordingly?

Key Takeaways

If you are using database management services like BigQuery, when your requests to the databases will grow from thousands per second to hundreds of thousands per second, your bill can quickly jump to hundreds of thousands or even millions of dollars.

If your technology deals with high traffic loads or involves computation-intensive processes, you should consider your database architecture design approach early on — or you’ll pay the price later. Always try to forecast your spending — prepare for growth.

This article was written by guest author Vova Kyrychenko, CTO & Co-founder of Xenoss, and is part 2 of a 3-part series. Read part 1 on Optimizing your database infrastructure costs and watch the full Architecting for Scale and Success webinar with Aerospike and Xenoss to hear more about their use case.

Keep reading

Feb 17, 2026

Giving database operators visibility and direct control with dynamic client configurations

Feb 9, 2026

Introducing Aerospike 8.1.1: Safer operations, more control, and better behavior under load

Feb 9, 2026

How to keep data masking from breaking in production

Feb 5, 2026

Helm charts: A comprehensive guide for Kubernetes application deployment