Programmatic advertising data flow: How each platform powers smarter RTB

Learn how optimized data pipelines across ad servers, DMPs, exchanges, and attribution platforms drive precise targeting, faster RTB decisions, and higher ROI for advertisers and publishers.

Developers drive the effectiveness of real-time bidding (RTB) by ensuring data flows seamlessly across every component of the AdTech stack. RTB engines match ads to impressions in milliseconds, but it’s the real-time movement of data through ad servers, exchanges, DMPs, and other platforms that determines how precisely advertisers reach their audiences and how efficiently publishers monetize their inventory. When you architect AdTech systems with optimized data pipelines, you enable rapid decision-making, accurate targeting, and scalable performance, all of which translate directly to measurable results for advertisers and publishers.

Data: The fuel for modern AdTech

RTB has evolved. Advertisers no longer buy impressions at face value; they buy access to audiences, insights, and measurable outcomes. Every millisecond, data moves between platforms, shaping who sees which ad, at what time, and in what context. The faster and more accurately this data flows, the more precise and effective the entire programmatic stack becomes.

Why data flow matters

Think of RTB as a high-speed train. The engine (RTB logic) drives the process, but without the right fuel (data), it can’t reach its destination efficiently. Data powers targeting through complex algorithms that analyze user behavior, demographics, and contextual signals. This intricate process relies heavily on real-time database lookups, machine learning models, and low-latency API interactions. Ensuring this data is available instantaneously necessitates a scalable NoSQL database capable of handling high-throughput transactions. When data flows seamlessly, campaigns become more relevant, efficient, and profitable. When it doesn’t, advertisers waste spend, publishers lose revenue, and users see irrelevant ads. A study by the Association of National Advertisers (ANA) estimates that approximately $22 billion is wasted each year in the open web programmatic market due to inefficiencies and errors.

Fast and accurate data flow enables:

Better targeting leads to better outcomes. Data enrichment from a variety of sources (first-, second-, third-party, and algorithmic) allows for better matching of consumer, product, placement, and context. That creates better outcomes like ROAS for advertisers and higher returns for publishers who are able to sell better inventory.

Better margins for value-added supply chains. Better matching allows better bids, creating more margin for sellers. Better outcomes justify the cost of added data from data management platforms (DMPs), customer data platforms (CDPs), and other data vendors. Strong returns help safety, viewability/fraud prevention, and attribution platforms keep and grow contracts.

More efficient operations. Automating decision-making saves time and frees up publishers, brands, and agencies to focus on core business processes instead of optimizing and buying. Indirectly, this improves future advertising, as brands improve products and publishers improve content.

Continuous improvement and optimization in real time. Brands can reallocate budgets, change their targeting approach, and even use advanced AI to generate entirely new or modified creative automatically and in real time to avoid wasted budget or pauses in delivery.

Better ad experience for users. Research shows that users actually appreciate ads that matter to them and are personalized to their needs. Real-time targeting and optimization allow them to see more relevant ads that load faster, lowering ad fatigue. Publishers can serve more ads and make more money.

The core components: Which does what?

Let’s trace data's journey through the programmatic ecosystem, highlighting why each platform matters and how it works together.

Ad servers: the delivery engine

Ad servers are the backbone of digital ad delivery. They determine which ads appear to which users, serve the creative, and meticulously track performance metrics such as impressions and clicks. Beyond simple delivery, ad servers provide real-time reporting and analytics, allowing advertisers to monitor campaigns as they happen and optimize on the fly.

Accurate tracking and reporting are critical. Ad servers integrate with other platforms, like DSPs, SSPs, and attribution tools, to ensure that every impression and click is logged and attributed correctly. This integration provides the insight and control needed for real-time campaign adjustments, helping advertisers guide prospects from consideration to conversion. Ad servers typically use key-value stores, such as Aerospike, for quick retrieval of ad creatives.

DMPs and CDPs: The audience architects

DMPs and CDPs collect and organize vast datasets. DMPs primarily aggregate third-party data and build anonymous audience segments for targeting, while CDPs unify first-party, second-party, and third-party data to create rich, persistent user profiles. DMPs typically use batch processing to update audience segments, while CDPs rely on real-time streaming data and event-driven architectures. Both enable more advanced segmentation and personalization across channels and devices.

Advertisers need to move beyond broad awareness and start personalizing messages. DMPs enable precise audience targeting and look-alike modeling, while CDPs help unify user profiles for more relevant, cross-channel engagement. This ensures that the right message reaches the right user at the right time, increasing the likelihood of moving prospects closer to conversion.

Ad exchanges: The marketplace

Ad exchanges are the marketplaces where real-time auctions happen, connecting publishers (sellers) and advertisers (buyers). They facilitate automated, instantaneous transactions between supply-side and demand-side platforms (DSP), determining which ad is shown to which user in real time. Billions of bid requests flow through exchanges daily, each carrying contextual and audience data that DSPs use to decide whether, and how much, to bid. DSPs submit bid responses in JSON format, adhering to the OpenRTB specification, including bid prices, creative URLs, and targeting parameters.

Advertisers rely on exchanges to access a wide range of placements and audiences, ensuring their consideration-driving messages appear across relevant sites and apps. Exchanges are high-throughput, low-latency systems that provide the scale and diversity of inventory essential for successful campaigns. They must process bid requests in under 100 milliseconds, route data to the right buyers, and enforce rules for transparency and fraud prevention. Building a robust exchange means optimizing for speed, reliability, and security—every millisecond counts.

Attribution platforms: The scorekeepers

Attribution platforms track conversions and assign credit to the right touchpoints. They support various models, such as last click, first click, linear, or rule-based, to help marketers understand which interactions drive results. Did a user convert after seeing a display ad, clicking a search result, or opening an email? Accurate attribution lets marketers optimize spend and creative, rewarding the channels that drive results. For developers, integrating attribution data back into the DMP or CDP closes the feedback loop for smarter targeting.

Optimizing spend and creative strategy depends on knowing which channels, creatives, or segments are most effective. Modern attribution platforms use sophisticated models, such as linear, time decay, or algorithmic, to assign value across the user journey. Developers must ensure data flows freely between attribution systems and the rest of the stack, enabling real-time optimization and reporting.

Data vendors: The signal boosters

Data vendors supply specialized datasets, such as geolocation, contextual page-level insights, purchase intent, and more. These enrichments can be layered onto bid requests to enhance targeting precision. Location data pinpoints users in specific places; contextual data analyzes page content in real time; purchase intent signals reveal who’s ready to buy.

Campaigns benefit from deeper targeting. For example, advertisers can use IP geolocation data provided via REST APIs to reach users near a physical store or contextual signals delivered through real-time webhooks to serve ads on relevant content. This enrichment ensures ads are delivered at the right moment and mindset, increasing engagement and driving users further down the funnel. Integrating third-party data requires careful validation and normalization. Developers must build pipelines that ingest, cleanse, and match external data to internal user profiles, all while respecting privacy and compliance requirements.

Viewability and brand safety: The gatekeepers

These solutions verify that ads are seen by real people in safe, brand-appropriate environments. They provide metrics on viewability (how long ads are in view) and flag potentially unsafe or fraudulent placements. These platforms weed out fraud and ensure advertisers pay only for impressions that matter. For publishers, maintaining high viewability scores attracts premium demand.

As budgets tighten and scrutiny grows, ensuring that consideration-driving ads are actually seen, and seen in suitable contexts, becomes a differentiator. High viewability and brand safety not only protect brand reputation but also ensure that advertising investments deliver measurable impact. Developers use JavaScript tags or SDKs to measure visibility and compare against IAB standards, employing machine learning to detect fraudulent activity, and provide real-time measurement and filtering. These integrations help maintain trust across the ecosystem and protect brand reputation.

Visualizing the data flow: A millisecond-by-millisecond journey

To truly understand the technical demands of RTB, let's trace a typical data flow through the ecosystem. Each step is critical and must execute within strict latency constraints, often within single-digit milliseconds.

1. User activity and initial data collection (1-2ms): A user visits a website or mobile app. The publisher's application immediately collects client-side data:

Device: Type (mobile, desktop), operating system, screen size.

Location: Geolocation coordinates (GPS or IP-based) if permission is granted.

Browser: User agent string, supported technologies (HTML5, JavaScript).

Contextual signals: Page URL, content category, keywords extracted from page text.

Example: JavaScript code collects this information and stores it temporarily for subsequent requests.

2. SSP bid request assembly (2-5ms): The SSP aggregates the collected data and constructs a bid request, typically in JSON format, adhering to the OpenRTB specification.

User ID: Anonymous identifier for the user or session.

Device ID: Mobile advertising ID (IDFA for iOS, AAID for Android) if available.

Page URL: URL of the web page or app screen where the ad will be displayed.

Publisher ID: Identifier for the publisher or network.

Example: The SSP makes a call to an internal data service to retrieve publisher-specific data, then formats the complete bid request using the OpenRTB schema.

3. Ad exchange routing and distribution (3-7ms): The ad exchange receives the bid request and performs quick routing logic:

Recipient DSP selection: Identifies DSPs based on targeting preferences and campaign setups.

Request forwarding: Sends the bid request (via HTTP or gRPC) to the selected DSP endpoints.

Example: The exchange uses a lookup table in a highly optimized database to determine which DSPs have campaigns targeting the current user profile. This lookup must be completed within a very short time frame.

4. DSP user profile retrieval and enrichment (5-15ms): The DSP receives the request and looks up the user profile.

DMP/CDP query: Queries a DMP or CDP using the User ID.

Profile enrichment: Retrieves audience segments, behavioral data, and potentially third-party enrichments.

Example: The DSP sends an asynchronous request to its internal cache or database (like Aerospike) to fetch the user profile based on the User ID. If it’s a cache miss, it queries the primary data store.

5. DSP bid evaluation and decision (10-25ms): The DSP’s core logic evaluates the bid opportunity.

Targeting check: Matches the user profile against campaign targeting criteria (demographics, interests, etc.).

Budget and pacing: Checks available budget and campaign pacing rules.

Bid price calculation: Determines the bid price based on RTB algorithms and strategies.

Creative selection: Chooses the most relevant ad creative.

Example: The DSP uses in-memory data structures and complex algorithms to score the bid opportunity. It may call an ML inference API to predict conversion probability and use that as a factor in the bid price.

6. Exchange winner selection and notification (3-7ms): The ad exchange receives bids from multiple DSPs and selects the winner.

Auction logic: Runs a first-price or second-price auction (depending on the exchange’s model).

Winner notification: Sends a notification to the winning DSP and SSP.

Example: The exchange runs the auction logic and sends a notification to the winning DSP and SSP using HTTP or gRPC.

7. Ad server delivery and logging (5-15ms): The winning ad server delivers the ad creative to the user's device.

Creative retrieval: Retrieves the ad creative from storage (CDN, local storage).

Rendering: Sends the creative data to the user’s browser for rendering.

Impression logging: Logs an impression event in a data warehouse or stream (Kafka, AWS Kinesis).

Example: The ad server responds to the user’s browser with an HTTP 200 OK, including the URL to the ad creative, and logs an impression event to an analytics service.

8. Attribution and viewability monitoring (ongoing): Attribution platforms and viewability vendors monitor the ad interaction.

Tracking pixels/tags: Client-side JavaScript tags or tracking pixels are loaded with the ad.

Event tracking: These tags send data back to the attribution and viewability platforms (e.g., "ad seen," "click," "conversion").

Example: A tracking pixel sends a GET request back to an attribution service when the ad is loaded, tracking an impression—a conversion pixel fires when the user completes a specific action on the advertiser’s site.

9. Data feedback loop to DMP/CDP (batch/near real time): Performance data and user interaction data are fed back into the DMP/CDP for future optimization.

Data aggregation: Aggregating and processing the data from the trackers.

Profile updating: Updating user profiles in the DMP/CDP with new behavioral and conversion data.

Example: Attribution data is processed in near real-time (e.g., every minute) and used to update user profiles in the DMP/CDP via APIs. This data might then be used to adjust future bidding strategies.

This entire sequence occurs within sub-300 milliseconds, with the goal being closer to 100 milliseconds or less. Each step must be highly optimized for low latency and high throughput. The processes are complex, and perfect coordination is crucial, especially given the intense millisecond timeframe.

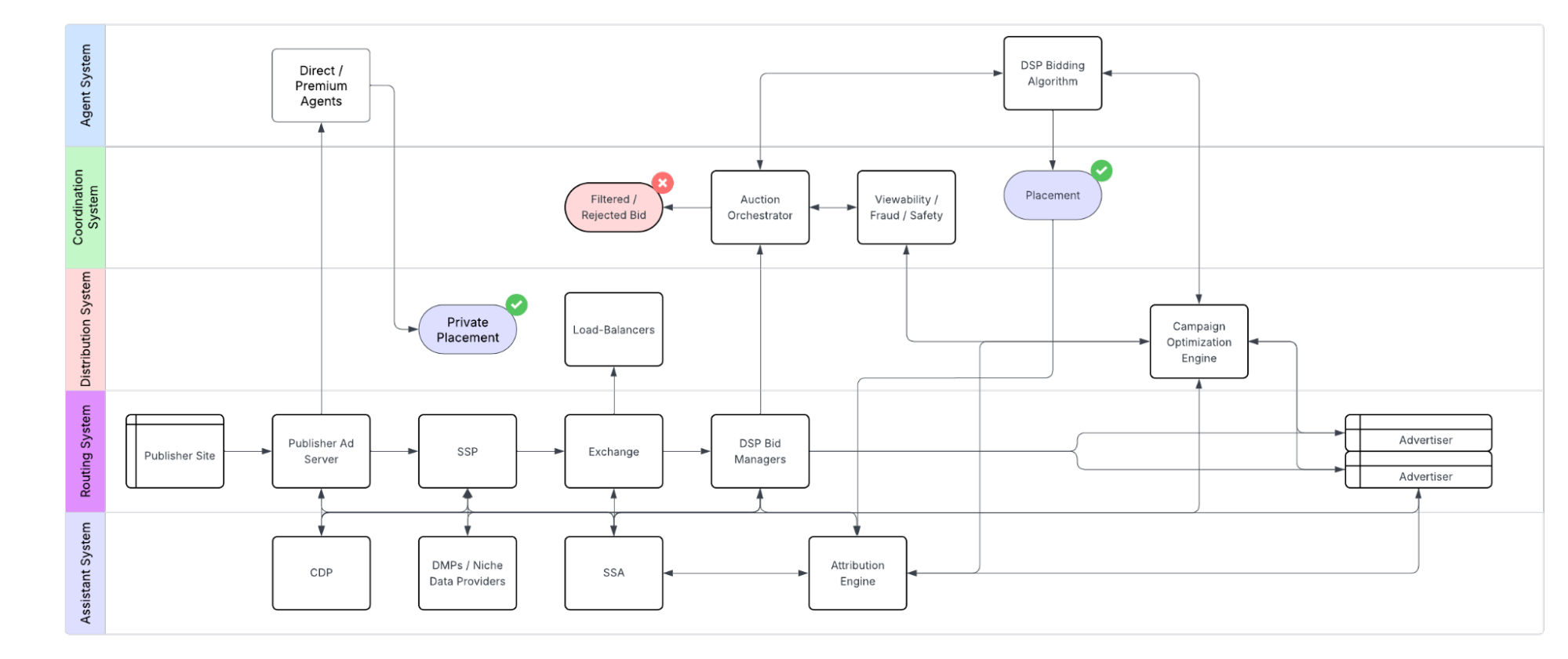

Using these steps and the components enumerated above, we can see a general systems workflow emerging:

The processes are complex and require perfect coordination, which is made more difficult by the millisecond timeframes in which auctions are completed.

Each transfer between nodes is accompanied by database transactions as data flows from one control point to the next, and these transactions can very often be the bottlenecks that break or limit an individual ad workflow. Maintaining low-latency transactions at incredibly high volumes and across a variety of data types and database formats is the key to building a better data-driven RTB platform, and the only way to get there is through careful planning.

Why data flow matters

When data flows smoothly, advertisers achieve:

Precise targeting: Combine CRM, location, and contextual data to reach the right user at the right moment.

Operational efficiency: Automated, data-driven decisioning slashes manual work and speeds up optimizations.

Real-time optimization: See which segments and creatives convert best, then adjust bids instantly.

Better user experience: Relevant ads reduce fatigue and help publishers monetize without annoying their audience.

For developers, optimizing data flow means building resilient, scalable pipelines that support low-latency decision-making and real-time feedback loops. It’s not just about speed; it’s also about accuracy, reliability, and compliance.

Data flow pitfalls and how to prevent them

When data flow issues occur, the advertising ecosystem keeps running without pause for troubleshooting. If one component lags or fails, the system either routes around it, removing it from the loop, or, in the worst case, lets the faulty component trigger a cascade of poor decisions, missed opportunities, and wasted budget. When critical routing systems fail, the impact quickly multiplies across hundreds of customers and thousands of transactions.

Developers must address the root causes of these disruptions by building a true end-to-end data platform using microservices architecture, containerization, and orchestration with Kubernetes to build decoupled, scalable components, not just connecting their programmatic stack to a database.

The most significant challenges include:

Data silos

In every ad auction, dozens of data sources contribute hundreds of signals, such as consumer traits, brand data, and platform metrics, each layered with metadata like safety scores and performance indicators. This flood of information introduces three core challenges: it slows auction processing as components queue for input, it introduces contradictions or duplicates that confuse decision-making, and it increases the risk of missing key signals, leading to poor outcomes like repetitive or irrelevant ad delivery.

To address this, supply-side actors (SSAs) began centralizing signal ingestion and evaluation into unified sources of truth. While this reduces redundancy and accelerates decisions, it creates new scaling problems, especially when handling complex, high-volume relationship data like identity graphs. Identity resolution demands merging thousands of data points and real-time signals across systems that often lack shared identifiers. Graph databases can model this complexity, but they struggle with performance at scale. For example, querying large identity graphs to resolve a user with multiple identifiers within the 100-millisecond RTB window requires highly optimized graph traversals and potentially distributed graph processing.

To fix fragmentation across platforms, for example, where a DMP might have rich behavioral data but doesn’t sync with an ad server or DSP, developers need to integrate systems through APIs, data lakes, or unified data layers. Multi-model data platforms help by ingesting structured and unstructured data into a single datastore. One common approach is using RESTful APIs that adhere to the OpenRTB specification, which defines the standard data formats and protocols for RTB. This includes defining the structure of bid requests and responses, along with supported attributes like user IDs, device information, and contextual data.

Data lakes built on technologies like Apache Hadoop or AWS S3 can store vast amounts of raw data for batch processing and analysis. Unified data layers can be implemented using message queues like Apache Kafka or RabbitMQ to facilitate real-time data streaming and synchronization between systems. However, this is not the right architecture to provide millisecond performance at scale. Multi-model data platforms, such as Aerospike, help here by ingesting structured and unstructured data into a single datastore. For example, you could store user profiles as key-value pairs in Aerospike, while storing clickstream data as JSON documents in the same database. This architecture enables fast cross-referencing, context-aware queries, and more reliable personalization and targeting without bottlenecks or blind spots.

Privacy and compliance

As privacy regulations like GDPR and CCPA evolve, developers can no longer treat compliance as an afterthought. These frameworks demand precise control over how user data is collected, processed, and shared. And with programmatic RTB systems moving data at millisecond speeds across global infrastructure, the risks have never been greater.

You must implement robust consent management, which often involves using Consent Management Platforms (CMPs) that adhere to the IAB TCF (Transparency & Consent Framework). These CMPs use JavaScript APIs to capture and manage user consent preferences. Ensure data anonymization where possible using techniques like data masking or tokenization, and tightly control data flows to only authorized systems, possibly leveraging OAuth 2.0 for API authentication and authorization. Use AES-256 encryption for data in storage, and TLS 1.3 for API communication.

But that’s just the beginning. Regulatory oversight is rapidly expanding and diverging. Different regions enforce different rules, and the mere act of routing data through a particular server can trigger jurisdictional liability.

To keep up, developers must design systems that dynamically adapt to where and how data is being used, without sacrificing performance. That means:

1. Modifying records or query responses on the fly to show or hide sensitive data depending on the applicable regulations without duplicating records, altering the source data, or relying on manual intervention. This might involve building dynamic SQL queries or using serverless functions at the API gateway layer to filter or redact data fields based on the user’s geographic location or consent status.

2. Rerouting data transfers intelligently, bypassing problem jurisdictions while maintaining low latency. This can be achieved using techniques like DNS-based routing, service meshes such as Istio, or database-level replication tools like Aerospike XDR. While Istio enables traffic management and policy enforcement based on routing rules, such as directing requests from users in the EU to servers located within the EU for GDPR compliance, Aerospike XDR complements this by replicating data across geographically distributed clusters. With XDR, you can ensure that user data remains within specific regional boundaries, supporting data residency requirements and compliance efforts like GDPR. Together, Istio and Aerospike XDR provide a robust solution for both real-time request routing and cross-regional data governance.

3. Replicating data across regions in a way that complies with local laws, without bloating infrastructure or sacrificing consistency. This might involve setting up multi-region deployments of your database using technologies like Aerospike's Cross Datacenter Replication. This ensures data is physically stored within the relevant region while maintaining eventual consistency, or strong consistency depending on configuration, across all replicas.

These features might sound familiar. Many data platforms claim to support them, but few can actually deliver at the scale and speed that RTB demands.

Some solutions rely on caching, which is fast but often lacks persistence and bi-directional syncing. However, maintaining cache coherence and ensuring data consistency between the cache and the primary datastore requires careful implementation. Others go with full replication, which slows down updates and creates cold storage bottlenecks that kill real-time responsiveness.

What you need is a smart edge data architecture that transforms and filters records into jurisdiction-compliant forms before sending them to the service most likely to use them, while keeping the master record complete and unaltered in a central store.

The most scalable pattern emerging in the industry combines three principles:

Store data in the jurisdiction where it was generated to simplify compliance.

Maintain a central data store for holistic customer views and reporting.

Apply real-time, jurisdiction-specific data transformations at the edge to enable fast, compliant access across systems.

This privacy-first, compliance-aware architecture lets developers focus on delivering fast, relevant ads without exposing their platforms to unnecessary regulatory risk.

Attribution gaps

Attribution makes or breaks programmatic advertising. If the system can’t show outcomes tied to spend, the rest doesn’t matter. Advertisers won’t keep funding campaigns without proof they deliver.

Last-click attribution misses most of the customer journey. Top-funnel engagement gets ignored. To fix this, developers need to implement multi-touch attribution. Every touchpoint matters. Track them across channels and devices. Push attribution data back into DMPs and CDPs. Make sure signals reflect real performance.

The hard part is timing. Auctions run in milliseconds, yet attribution updates land in slow batches every 10 or 30 minutes. That disconnect breaks real-time decisioning. Dynamic bidding and personalized creatives lose their edge when based on stale or missing data.

It gets worse when systems don’t agree on how attribution works. Middleware sticks to simple last-click rules. Brands want full-funnel visibility. Identity gets fragmented. Compliance rules block data sharing. Platforms protect proprietary data. Architecture decisions go in different directions. These issues pile up.

This is probably the most challenging problem to solve, as it's primarily not a technical issue. You can get a jump on it by choosing the right infrastructure. Use a data platform that ingests auction and attribution data at high volume. Sync updates in near real time. Scale as signals multiply. Eliminate the lag between event and insight.

SSAs and DSPs are already folding attribution into their bidding and reporting flows. They want tighter control. A fast, flexible data layer makes it possible. Attribution results surface faster, and outcomes get connected to actions.

Follow a few core rules. Close the gap between auction and attribution timing. Build attribution directly into your data flow. Use infrastructure that handles velocity and scale.

Attribution isn’t a reporting tool. It’s part of the product. Build it like one.

Real-world example: The Trade Desk’s programmatic stack turbocharged by Aerospike

The Trade Desk, a leading global advertising technology company, leverages Aerospike's real-time data platform as a critical component of its programmatic advertising infrastructure. Aerospike enables The Trade Desk to orchestrate high-velocity data flow across the entire AdTech ecosystem, seamlessly connecting ad servers, DMPs, exchanges, attribution platforms, data vendors, and viewability solutions.

By integrating Aerospike, The Trade Desk achieves sub-millisecond latency at massive scale, processing billions of transactions daily while maintaining precise data consistency and availability. Let's delve into a real-world campaign scenario to see Aerospike's role in action:

Ad requests and initial processing (10-150ms): When a user loads a publisher’s page, an ad request is typically initiated by a header bidding wrapper (like Prebid.js) or directly by the publisher’s ad server. This wrapper gathers user and contextual signals and data points, like device type, precise geolocation (if permitted), browser details, and contextual signals, and formats them as a JSON object adhering to OpenRTB standards and rapidly prepares for RTB.

DMP/CDP user profile retrieval (5-10ms): The Trade Desk's platform immediately queries its DMP, powered by Aerospike, using the user ID. This lookup, which involves querying terabytes of data, must be completed within milliseconds. Aerospike's Hybrid Memory Architecture (HMA), utilizing both DRAM and fast storage such as SSD, enables The Trade Desk to retrieve and analyze detailed user profiles at extraordinary speed. First-party and third-party data (e.g., frequent traveler status, loyalty membership, purchase history) are seamlessly merged, allowing for highly granular audience segmentation in real-time. Aerospike's ability to store and retrieve complex data structures, including JSON documents, allows flexible, efficient storage of user data.

Ad exchange bid evaluation (3-7ms): The Trade Desk sends the bid request to multiple ad exchanges, where Aerospike powers the real-time bid evaluation and decisioning logic. The system analyzes available inventory against targeting criteria, budget constraints, and predicted conversion rates using sophisticated bidding algorithms. These algorithms access dynamic, up-to-the-minute user profiles in Aerospike, ensuring each bid decision is based on the latest data.

Attribution and performance updates (near real time): As users interact with ads (impressions, clicks, conversions), these events are captured and streamed into The Trade Desk's platform. Attribution data, including conversion paths and touchpoints, is rapidly updated in Aerospike. Aerospike’s high-throughput write capabilities ensure that these updates happen in near real-time, allowing for dynamic adjustments to bidding strategies. User-defined functions (UDFs) and expressions within Aerospike can be used to perform real-time data transformations and computations for advanced attribution models.

Data vendor enrichment (on-demand): Third-party data vendors provide additional enrichment, such as precise geolocation or page-level contextual insights, via REST APIs or real-time webhooks. This data is rapidly ingested into Aerospike, where it is correlated with user profiles. Aerospike's ability to handle high-volume data ingestion ensures that The Trade Desk can leverage real-time contextual information for highly targeted bids.

Viewability and brand safety integration (continuous monitoring): Viewability and brand safety metrics, obtained through JavaScript tags and SDKs, are continuously monitored and fed back into Aerospike. This real-time feedback loop allows The Trade Desk to dynamically adjust bidding and targeting strategies to ensure compliance and prevent fraud. Aerospike’s data retention policies can be used to manage the historical viewability and brand safety data for auditing purposes.

Quantifiable impact of Aerospike: By leveraging Aerospike, The Trade Desk experiences significant performance gains:

Latency reduction: Achieves consistent sub-millisecond data access, critical for the 100ms RTB window.

Throughput increase: Handles billions of transactions per day, ensuring scalability and resilience.

Data consistency: Maintains strong data consistency, or eventual consistency when necessary, across distributed nodes.

Real-time optimization: Enables real-time data updates for immediate campaign adjustments and improved ROI.

Aerospike's high-speed, scalable, and consistent data platform enables The Trade Desk to deliver precisely targeted, relevant ads at massive scale, optimizing campaigns in real time, breaking down data silos, and responding instantly to market shifts. This solidifies The Trade Desk’s position as a leader in the programmatic advertising space by delivering exceptional results for advertisers while ensuring a premium user experience.

How Aerospike accelerates the flow

Aerospike’s real-time database anchors high-performance AdTech stacks. It ingests and analyzes data from multiple sources in mere milliseconds, scales globally without bottlenecks, and unifies user profiles for precise targeting and attribution. Developers shatter data silos, streamline compliance, and deliver actionable insights at the very pace RTB demands.

In the hyper-competitive RTB arena, every millisecond counts. Aerospike consistently achieves sub-millisecond latency to provide a crucial edge within the strict 100ms RTB window. Its HMA blends DRAM for blazing-fast read/write operations with cost-effective flash storage, eradicating I/O bottlenecks. Optimized data structures and indexing ensure rapid data retrieval and queries. Unlike caching-reliant systems with erratic performance, Aerospike guarantees predictable, sub-millisecond response times under any load.

AdTech requires infrastructure that withstands explosive growth. Aerospike delivers massive horizontal scalability, allowing users to effortlessly scale out by adding nodes. This enables processing millions of transactions per second with linear scaling, ensuring systems handle the torrent of bid requests, user profile lookups, and attribution updates in real time. Aerospike’s distributed design supports multi-region deployments, maintaining low latency and complying with regional data residency demands for global reach. Cross Datacenter Replication (XDR) provides robust disaster recovery and data synchronization across geographies. Dynamic data rebalancing automatically distributes data across clusters, minimizing overhead and assuring constant availability.

Data silos cripple performance and insights. Aerospike unifies data, seamlessly integrating diverse types, such as structured (key-value pairs, numerical data), semi-structured (JSON), and unstructured (BLOBs). This fusion enables comprehensive 360-degree user profiles for accurate targeting, personalized ads, and precise attribution. Powerful querying, including secondary indexes and User-Defined Functions (UDFs), allows for complex data analysis and real-time insights.

Meeting stringent privacy regulations demands robust tools. Aerospike provides advanced compliance and data governance, simplifying GDPR, CCPA, and other rule adherence. Fine-grained access control policies define user roles and permissions, restricting data access. Data retention policies automate expiration, minimizing storage costs and risk. Data masking and encryption protect sensitive user data at rest and in transit.

This high-performance, scalable, and compliant platform empowers developers to create next-generation AdTech systems. Users gain enhanced real-time decisioning where accurate, current data leads to instantaneous bidding and personalized content. Powerful queries support advanced analytics, delivering vital insights into campaign performance and user behavior. Advertisers achieve higher ROI via improved targeting and reduced waste. Publishers maximize revenue with relevant ads. Users experience personalized, less intrusive, and more valuable ad experiences. Aerospike propels AdTech platforms to new heights of global performance and scalability.

Build for data flow and excel in RTB

Getting data to flow smoothly across the advertising system is key to success. Developers who do this right help advertisers target the right people, let websites make more money, and show users ads they actually like. Building these strong data systems isn’t just tech work; it’s a smart business move. When developers connect all the pieces, use data in real-time, and track what works, they make the whole ad system work better for everyone. Ultimately, those who make sure data flows quickly and accurately in online advertising are the ones who win.