Understanding database sharding for scalable systems

Learn how database sharding enables horizontal scaling across multiple nodes. Understand shard keys, common strategies, tradeoffs, and how Aerospike applies these concepts.

As applications and data grow, traditional single-server databases become a bottleneck. Database sharding is a technique for scaling your database horizontally by splitting data across multiple servers, helping the system handle large volumes and high traffic. But what is sharding, and how does it work? And how does Aerospike help?

What is database sharding?

Database sharding is the process of storing a large database on multiple machines instead of one server. It works by splitting a large dataset into smaller chunks, called “shards,” and distributing those shards across separate database nodes. Each shard holds a portion of the data, such as a subset of rows in a table, but uses the same schema as the original database. By dividing the data this way, all shards collectively represent the entire dataset, and the workload is shared among multiple servers.

It’s useful to distinguish between horizontal and vertical partitioning in this context. Sharding usually refers to horizontal partitioning, where each shard contains a subset of the rows of a table, using the same columns and different rows. There is also vertical partitioning, where you split a database by columns and each shard holds a different subset of columns for all rows, but this is less commonly what people mean by “sharding.” In practice, sharding implies horizontal distribution across multiple servers (a form of “shared-nothing” partitioning) to overcome the limits of a single machine.

In summary, sharding converts one monolithic database into several smaller database shards on separate nodes. These shards work together to serve the application’s data needs in parallel. By doing so, a sharded database stores more data and handles higher throughput than any one node could.

Why is database sharding important?

As an application’s user base and data volume grow, the database becomes a performance and capacity bottleneck. Beyond a certain point, adding more CPU or RAM to one machine, which is called vertical scaling, yields diminishing returns or may hit physical limits. Sharding scales out horizontally: Instead of one powerful server, you use many commodity servers to share the load. This offers several important benefits:

Scalability and capacity

Sharding lets a database scale beyond the limits of one machine. By splitting data across shards, you add more shards across servers to accommodate growth. There is effectively no hard ceiling on data size because each new shard brings additional storage and processing power. Horizontal scaling is more flexible than vertical scaling, which is limited by the maximum hardware specs of one server. Organizations keep scaling out by adding nodes at runtime without downtime, so the database handles ever-increasing workloads.

Improved performance and throughput

Because each shard contains only part of the total data, queries are processed faster on each individual shard. Response times improve when the database needs to search only through a smaller shard rather than a huge monolithic dataset. In a sharded architecture, many shards handle queries in parallel, multiplying the overall throughput. Massively parallel processing means an application serves more users and transactions with low latency, as each node works on its portion of the data simultaneously. In short, sharding keeps performance high even as data grows, by avoiding the slowdown that one large database would encounter.

High availability and fault tolerance

Sharding also improves availability and fault tolerance. In a traditional monolithic database, if that one server goes down, the entire application is offline. In a sharded database, failure of one shard doesn’t bring down the whole system. Other shards continue operating, so only part of the data on the failed node is temporarily inaccessible. Moreover, sharding is often combined with replication for each shard, meaning each piece of data has copies on multiple nodes. If one shard’s node fails, a replica takes over, avoiding downtime. This distributed design reduces the risk of a total service outage and makes the database more resilient.

How database sharding works

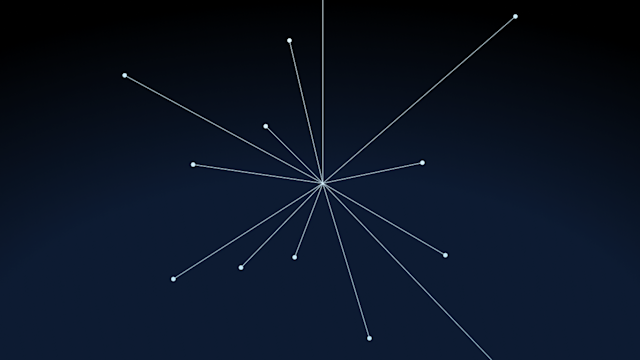

When you shard a database, you are fundamentally doing two things: data partitioning uses a shard key, and distributes those partitions or shards across separate nodes. The architecture that enables this is usually a shared-nothing cluster of database nodes, meaning each node runs independently with its own memory and disk and does not share state with others. Here’s how it works in practice:

Shard keys and data partitioning

To split a dataset into shards, the database designer needs to decide which rows go to which shard. This is determined by a shard key, also known as a partition key. A shard key is one or more fields or columns of each record that the system uses to assign it to a shard.

For example, you might choose “customer_id” as the shard key for a user database: User records could be divided such that customers with IDs in certain ranges or with certain hash values reside in different shards.

When a new record is written, the system looks at the shard key and applies a rule or formula, depending on the sharding method, to determine which shard or node should store that data. Likewise, when reading/querying, the system uses the shard key in the query to know which shard to fetch the data from.

Choosing an appropriate shard key is critical because it influences how evenly data is distributed and how efficiently queries are routed. A good shard key spreads data roughly uniformly across shards, preventing any one shard from becoming a hotspot. It also aligns with common query patterns to reduce cross-shard operations.

Shared-nothing architecture and routing

Most sharded databases use a shared-nothing architecture, so each shard is a fully independent database node responsible for its own subset of data. The shards do not share memory or storage, and each runs on its own hardware. This architecture means adding more shards adds more capacity almost linearly because there is little coordination overhead on each transaction beyond locating the right shard.

Because data is partitioned, there needs to be a way to route queries to the correct shard. There are two general approaches: Some database systems have automatic sharding built in, while others require the application or a middleware layer to handle the routing. In an automatic or “transparent” sharding setup, you interact with the database cluster as a whole; the system itself figures out where each piece of data resides, often via a distributed hash table or metadata map, and directs read/write requests to the right node. Many NoSQL databases today, as well as some NewSQL relational systems, do this for you behind the scenes.

If the database does not support sharding natively, developers might implement sharding in application code or use an external proxy/router that knows the sharding scheme. Either way, the goal is that the sharding is mostly transparent to the end user or client: you issue a query, and the infrastructure sends it to the correct shard and then combines results if necessary.

In a well-designed sharded system, only the shards that contain relevant data execute a given query, and they do so in parallel. This parallelism and distribution are what give sharding its scaling power. However, it also means extra complexity in query routing and result merging for operations that span multiple shards.

Common sharding strategies

How exactly data is divided among shards depends on the sharding strategy or scheme. The strategy defines the rule that maps each data item to a shard based on its shard key. Choosing the right sharding method affects both performance and data balance. Here are some common sharding strategies:

Range-based sharding

Range sharding splits data based on contiguous ranges of the shard key’s values.

For example, if sharding by an integer ID, shard 1 might store IDs 1-1,000,000, shard 2 stores IDs 1,000,001-2,000,000, and so on. Or if sharding by alphabetic name, you might put A–I in one shard, J–S in the second, and T–Z in the third. It’s a straightforward approach because it groups similar values together and is intuitive, especially for values like date ranges or numeric ranges.

Pros: Range sharding is simple to implement and understand. It is efficient for range-based queries because all data in a range is on the same shard. For instance, a query for “customers with names starting A–I” contacts only one shard.

Cons: A big downside is the potential for uneven data distribution. If the data or access patterns aren’t uniform, one shard becomes a hotspot. In our name-based example, perhaps a disproportionate number of customers have last names beginning with “A” or “B,” overloading that shard with more data and traffic than others. This skew undermines the benefits of sharding, as one “large” shard becomes a new bottleneck. Mitigating this requires periodically rebalancing the ranges or splitting hot ranges into multiple shards, which is complex.

Hash-based sharding

Hash sharding assigns data to shards based on a hash function applied to the shard key. Instead of a human-meaningful range, the shard key, such as a user ID, is run through a hash algorithm that produces a hash value. That value is used to pick a shard. For example, if you have four shards, you might take hash(key) mod 4 to decide the shard number. This tends to spread data evenly in a pseudo-random way.

Pros: Hash-based sharding is excellent for achieving balanced distribution. A good hash function sends entries to shards more uniformly, avoiding the hot-spot issue that range sharding might cause. It’s great when your goal is to share the load evenly and you don’t have a particular access pattern to optimize beyond general uniformity.

Cons: The drawback is that hash sharding destroys the natural ordering of data. Queries that need an ordered range of records, such as all IDs between 1000 and 2000, aren’t satisfied by looking at one shard; those records will be spread out. Hash sharding also makes adding or removing shards tricky: If you increase the number of shards, the hash formula changes, meaning you might have to relocate a lot of data to new shard assignments, unless you use consistent hashing techniques. Rehashing and redistributing data on cluster changes causes operational problems. In short, hash sharding improves balance at the cost of flexibility in querying and resharding.

Directory or lookup table sharding

Directory-based sharding uses an explicit lookup table or mapping to assign each data item to a shard. In this approach, there might be a separate service or table that maps a key or a category to a specific shard number.

For example, you maintain a table that says “Customers from USA -> Shard 1, Customers from Europe -> Shard 2, Customers from Asia -> Shard 3,” or even an arbitrary mapping such as “UserIDs 1,5,9 -> Shard A; UserIDs 2,6,10 -> Shard B; etc.”

Pros: This method is flexible. Define any rule you want in the lookup table; it’s not constrained to simple ranges or a hash function. You can even move entries around by updating the mapping. Directory sharding is useful if your sharding needs are complex or if you need to handle exceptions, such as certain VIP customers all go to a specific shard. It’s also conceptually easy to understand: just a mapping of keys to shard locations.

Cons: Relying on a central lookup table is a weakness. If the directory becomes a single point of failure or if it’s not efficiently managed, the system is compromised. The directory must be kept consistent and accessible. Additionally, maintaining and querying this lookup adds an extra layer of indirection that adds latency. Directory sharding is powerful but introduces an additional component that must be reliable and fast.

Geographical (geo-based) sharding

Geo-sharding is essentially sharding by location or region. In this strategy, data is partitioned based on a geographical attribute, such as the user’s region or data center. For instance, you might keep European customers’ data in an EU-based shard and American customers’ data in a US-based shard. This is common in applications that serve a globally distributed user base or need to comply with data locality regulations.

Pros: The main benefits are lower latency and better performance for users when they are served by a nearby data store. If a user in Asia queries data, and the shard containing their data is also in Asia, the network latency is lower compared with reaching a database across the world. Geo-sharding also makes it easier to meet regulatory requirements by keeping data within certain jurisdictions, such as EU user data on EU servers.

Cons: Like range sharding, geo-based shards may become uneven. If one region has most of the users or traffic, its shard will be larger or busier than others. You might also run into cases where the distribution of activity isn’t proportional to the distribution of users, such as if one region’s users are more active. Additionally, if a user base shifts or grows unpredictably in one region, rebalancing might be needed. In sum, geo-sharding is great for localization but needs capacity planning to avoid hotspot regions.

Choosing from these strategies depends on the application’s needs and data characteristics. Sometimes, hybrid approaches are used, such as first sharding by region and then by hash within each region. The key is to choose a scheme that keeps data evenly distributed and queries are efficient for your particular workload.

Challenges of database sharding

Sharding improves database scalability and performance, but it also comes with challenges and tradeoffs. It’s not a silver bullet; essentially, you are swapping the limitations of one big system for the complexity of coordinating many smaller ones. Anyone considering sharding should be aware of the following challenges.

Uneven data distribution and hotspots

One fundamental challenge is spreading data and workload evenly across shards. If the sharding key or strategy is not well-chosen, you end up with hotspots of shards that carry a disproportionate amount of data or traffic.

For example, consider an alphabetic range sharding: One shard might handle all users with last names starting A–I, but if most of your users have last names in that range, that shard becomes overloaded while others are underutilized. This reintroduces a bottleneck, undermining the whole point of sharding. Even with a hashing strategy, certain usage patterns might lead to uneven load on shards. To address this, design your shard key and consider monitoring and rebalancing shards periodically.

In practice, solving hotspot issues might involve resharding by splitting a hot shard into two, which can be complicated to do without downtime.

Increased operational and application complexity

Running one database is hard enough; running many of them as shards is harder. Sharding introduces complexity in maintenance, monitoring, and administration. You now have multiple databases to back up, index, tune, and update. From the application development side, if the sharding is not transparent, developers have to incorporate sharding logic in the code, such as directing reads/writes to the correct shard and aggregating results from multiple shards. Even with transparent sharding, understanding the data distribution is important for writing efficient queries. There is also more to break, such as network issues between shards and inconsistencies between distributed pieces.

Overall, the environment is more complex than a single-node setup. Analytics and reporting become more difficult, too, because you might need to gather data from all shards and combine it, which is slower and requires additional engineering. All these factors mean higher operational overhead and skill required to manage a sharded database system.

Rebalancing and resharding complexity

Sharding is not a one-and-done setup. Your sharding plan might need to evolve as your data grows. A classic challenge is resharding, such as adding or removing shards, or changing the sharding key. When you add a new shard, perhaps to get more capacity, you have to move some data from existing shards to the new shard. This data migration and rebalancing process is complex and time-consuming if the database doesn’t do it automatically.

In many setups, adding a shard might involve taking some of the system offline, or temporarily reducing performance while data is shuffled around. If you initially shard by a certain key and later realize that wasn’t optimal, re-partitioning the whole dataset by a new key is a major effort. Manual sharding schemes are especially brittle, making changes to the hashing algorithm or range boundaries invalidates caches and requires data redistribution. Resharding with minimal downtime requires planning and tools. Some of today’s systems use techniques such as consistent hashing to ease adding and removing nodes, but there’s still overhead in moving data.

The bottom line is that scaling out further or reconfiguring shards is much more involved than turning a knob on a single-instance database.

Data consistency and distributed transactions

When your data is spread across multiple shards, maintaining strong consistency and performing transactions that span shards becomes challenging. In one database, transactions easily enforce ACID properties. In a sharded database, a transaction that affects rows in different shards is a distributed transaction, which means it may need two-phase commit or other complex protocols so all shards either commit or roll back together. Many sharded NoSQL databases avoid multi-shard transactions or joins by design, or provide only limited guarantees, to keep performance high. If your application requires, say, a join between data that resides on different shards, the system has to fetch data from both shards and then combine it, either at the application level or via a coordinator. Cross-shard query processing may be slow and complex to implement.

Likewise, maintaining referential integrity or unique constraints across shards is difficult. In practice, developers sometimes denormalize data or adjust requirements to reduce cross-shard operations. Some distributed SQL databases offer transparent cross-shard transactions, but often at the cost of higher latency. In summary, sharding limits certain capabilities of your database or forces you to handle them in application logic, which adds complexity and potential consistency issues.

Higher infrastructure costs

Sharding typically means you will be running more servers or instances than before. Each shard is an additional database process, possibly on its own machine or virtual instance. Operating more shards costs more in hardware, cloud storage, and power than running one server. There is also extra overhead in network communication between shards or between the application and multiple shard endpoints.

If you’re on-premises, more machines mean more rack space and maintenance. If on the cloud, you’re paying for multiple database instances. This increases infrastructure and operational costs. This cost may be justified by the scale and performance gains over an overloaded server that can’t handle the load at all, but it’s a factor to consider. Find out whether the extra capacity gained from sharding is worth the expense, and whether utilization on each shard is high enough to justify its existence.

Despite these challenges, many large-scale systems successfully use sharding. It’s a cornerstone of scaling databases with big data and big user loads. The key is to weigh these tradeoffs and implement sharding with planning, good tooling, and an architecture that reduces some of the problems, such as by choosing databases that handle some of the complexity for you.

Sharding vs. other scaling methods

Before committing to sharding, it’s worth understanding alternative or complementary approaches to scaling a database. Sharding is a form of horizontal scaling, but there are other strategies, such as vertical scaling and replication. Often, real-world systems use a combination of these methods.

Vertical scaling (scale-up) vs. sharding

Vertical scaling means improving the capacity of one server, such as a machine with more RAM, a faster CPU, or an SSD. It’s essentially making the server more powerful to handle more load. This is a good first step because it doesn’t require any changes to the application or database design. However, vertical scaling hits a point of diminishing returns: There is a physical limit to how much you can beef up one machine, and costs increase rapidly for high-end hardware.

Sharding, on the other hand, is horizontal scaling, which is done by adding more servers instead. While initially more complex to implement, horizontal scaling provides virtually unlimited capacity by just adding nodes. It’s scaling out instead of up.

In practice, you might vertically scale until it no longer makes economic or technical sense, and then adopt sharding for further growth. To illustrate: Buy a server with double the RAM to handle the next load doubling, but beyond that, sharding across multiple commodity servers might be more practical. In summary, vertical scaling is simpler and sometimes less costly at small scale, but has hard limits, while sharding scales more by using machines in parallel.

Replication (read replicas) vs. sharding

Replication involves keeping full copies of the database on multiple servers. Typically, one server is the primary for writes, and one or more secondary replicas sync the data and handle read queries. Replication is mainly a strategy for high availability and read scaling, not for scaling data volume or write throughput. It doesn’t increase the total capacity of your database to store unique data, because each replica has the same data. It also doesn’t speed up writes, because all writes still have to go to the primary in most setups. But allows more reads by distributing reads across replicas and provides failover if the primary goes down, because a replica takes over.

Sharding and replication actually solve different problems. Sharding partitions the dataset to handle more data and more write load, while replication duplicates the dataset to handle more read load and provide redundancy. These techniques can be used together. For instance, many distributed databases use sharding for scale and then also replicate each shard to another node for fault tolerance.

In some systems, such as MongoDB, each shard is actually a replica set or group of replicas, combining both approaches. If your application’s main issue is too many read requests, adding replicas might be sufficient, but if your data size or write load is beyond one machine’s capacity, sharding is the way to go, perhaps with some replication on each shard.

Caching with a product such as Redis or Memcached is another method to alleviate database load by storing frequently accessed data in memory. Caching and replication, however, don’t remove the single-master bottleneck for writes; only sharding or a multi-master distributed database architecture does that. Ultimately, evaluate the system’s needs: Use replication for availability and read scaling, sharding for write scaling and data volume, or a combination for both.

Sharding with Aerospike

Sharding is a powerful technique to scale databases horizontally, but implementing it from scratch can be daunting. This is where database platforms like Aerospike come into play. Aerospike’s real-time data platform was built with sharding by horizontal partitioning at its core, so developers and architects don’t have to reinvent that wheel.

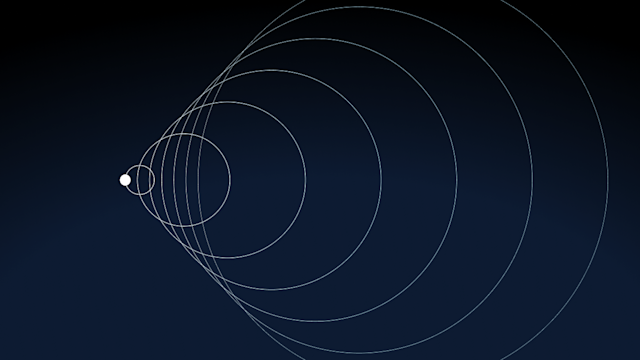

An Aerospike database cluster partitions data into 4,096 shards called partitions and distributes data evenly across nodes using a hash algorithm. There is no need for manual sharding or complex reconfiguration when you add nodes because the cluster rebalances data and continues running without downtime. This means you get the scaling benefits of sharding without the typical operational headaches. Aerospike’s shared-nothing architecture means there are no single points of failure and no hotspots, as data is evenly hashed across all nodes. It also replicates data internally for high availability, so your application remains always-on.

In essence, Aerospike handles the heavy lifting of sharding behind the scenes. Focus on your application logic while the database scales transparently. With its automatic data distribution, fast in-memory indexes, and patented hybrid memory-storage design, Aerospike delivers predictable low latency performance at scale, from gigabytes to petabytes of data, all on a simple cluster that expands as needed. If you’re building a system that needs to process large amounts of data in real time, use Aerospike for painless sharding at scale.

Keep reading

Sep 24, 2024

Vertical vs. horizontal scaling explained

Oct 2, 2025

Why database upgrades feel scary and how to make them safe

Jan 15, 2025

Understanding elasticity and scalability in cloud computing

Aug 13, 2025

Aerospike Database 8.1: New expression indexes allow developers to simplify code and reduce memory overhead for data-intensive applications