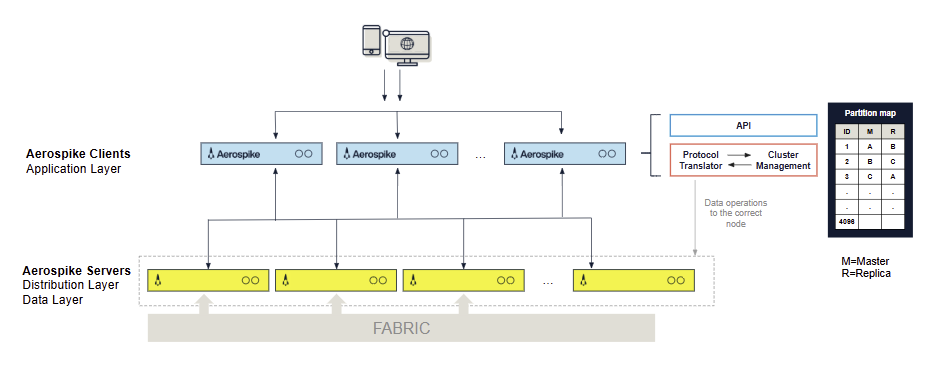

Client architecture

This page describes Aerospike’s distributed client-server architecture and the capabilities of the Aerospike client API.

Client library capabilities

The following Aerospike client API capabilities provide high performance and make building with Aerospike simple:

- Tracks cluster states

- At any instant, the client uses the

infoprotocol to communicate with the cluster and maintain a list of cluster nodes. - The Aerospike partitions algorithm determines which node stores a particular data partition.

- Automatically tracks cluster size changes—entirely transparent to the application—to ensure that transactions do not fail during transitions, and applications do not need to restart on node arrival and departure.

- At any instant, the client uses the

- Implements connection pooling so you don’t have to code, configure, or manage a pool of connections for the cluster.

- Manages transactions, monitors timeouts, and retransmits requests.

- Thread safe—only one instance is required in a process.

Figure 1: Aerospike client layer

Besides basic put(), get(), and delete() operations, Aerospike supports the following:

- CAS (safe read-modify-write) operations

- In-database counters

- Batch

get()operations - Scan operations

- List and Map element operations such as

removeByKey()orgetByValueRange() - Queries—bin values are indexed and the database searched by equality or range

- User-Defined Functions (UDFs) extend database processing by executing application code in Aerospike

- Aggregation—Use UDFs on a collection of records to return aggregate values

Client feature support

The Client matrix provides the following client details:

- Full client/server feature compatibility

- Language-specific compatibility notes

- Minimum usable client versions

- Major server changes

- Client/Server Features