XDR record shipment lifecycle

This page describes the stages of Aerospike’s Cross Datacenter Replication (XDR) record shipment lifecycle, the configuration parameters that control that lifecycle, and the metrics that monitor the stages.

XDR delivers data asynchronously from an Aerospike source cluster to a variety of destinations.

- You can start writing records as soon as you enable a namespace.

- The system logs and collects statistics on the progress through the in-memory XDR transaction queue even if the datacenter is not yet connected.

- The system starts shipping as soon as the connection to the datacenter is established.

To learn more about XDR, see XDR architecture.

XDR record shipment lifecycle

The XDR shipment lifecycle begins when a record is successfully written in the source partition, then submitted to the per-partition, in-memory XDR transaction queue of each datacenter.

Version changes

XDR does not impose any version requirement across datacenters. You can ship between connected DCs as long as each is running Database 6.0.0 or later. Different versions of Aerospike Database, however, have the following variations with respect to XDR:

- Starting with Database 8.1.1,

recovery-threadsconfigures the number of threads per datacenter (1-32) for use in parallel recovery. This improves recovery performance for large backlogs. - Starting with Database 7.2.0,

ship-versions-policycontrols how XDR ships versions of modified records when facing lag between the source cluster and a destination. ship-versions-intervalspecifies a time window in seconds within which XDR is allowed to skip versions.- Starting with Database 5.7.0, the XDR subsystem always accepts dynamic configuration commands because it is always initialized even if XDR is not configured statically.

- Prior to Database 5.7.0, the XDR subsystem must be configured statically with at least one datacenter.

XDR transaction queue

The size of the transaction queue is controlled by transaction-queue-limit.

If the transaction queue fills faster than XDR can ship to the remote destination, Aerospike switches to recovery mode. After XDR catches up, it switches back to using the transaction queue.

Each datacenter, namespace, and partition combination has its own distinct XDR transaction queue.

A modified record is placed into the correct transaction queue based on its partition. If a namespace ships to multiple destinations, the record’s metadata is simultaneously placed into multiple transaction queues.

Transaction threads

The various transaction threads manage a record throughout the lifecycle. The threads include:

- The

dcthread processes all the partitions sequentially at the source node for a specific remote datacenter. This thread processes all pending entries in the XDR transaction queues and retry threads. - The service thread receives a record from

dcthread, reads it locally, and prepares it for shipment. - Recovery threads read updates from the primary index when XDR is in recovery mode.

- In interleaved recovery mode (default), a single recovery thread processes multiple partitions in an interleaved fashion.

- In parallel recovery mode, multiple recovery threads operate simultaneously, with each thread dedicated to a single partition at a time. You configure recovery threads with the

recovery-threadsparameter.

XDR recovery mode

When the XDR transaction queue is full, the queue is dropped and XDR goes into recovery mode. In this mode, XDR scans the full primary index to compare a record’s last ship time (LST) to its last update time (LUT) instead of using the transaction queue.

XDR supports two recovery modes, controlled by the max-recoveries-interleaved parameter:

Parallel partition-dedicated recovery

Introduced in Database 8.1.1, parallel partition-dedicated recovery spawns multiple recovery threads that operate in parallel and are configured by the recovery-threads parameter. Each recovery thread picks up a partition from the recovery queue and works exclusively on that partition until its recovery is 100% complete. Only after finishing the entire partition does the thread pick up the next partition from the queue. Multiple threads work simultaneously on different partitions, with each thread fully dedicated to one partition at a time.

The recovery-threads parameter controls the number of parallel recovery threads per datacenter. The default value is 1. The range is 1-32 threads per datacenter. Increasing the number of recovery threads can improve recovery performance for large backlogs but consumes more CPU and I/O resources during catch-up.

Single-threaded interleaved recovery

Single-threaded interleaved recovery uses a single recovery thread that processes multiple partitions in an interleaved fashion. The max-recoveries-interleaved parameter specifies the maximum number of partitions to recover concurrently to provide fairness and prevent partition starvation. Recovery jobs are inserted at position mri-1 in the recovery queue rather than at the end, preventing large partitions from monopolizing recovery time.

Configuration constraints:

The recovery-threads and max-recoveries-interleaved parameters are mutually exclusive:

- If

recovery-threadsis greater than 1, thenmax-recoveries-interleavedmust be 0. - If

max-recoveries-interleavedis greater than 0,recovery-threadsmust be 1.

Dynamic configuration constraints:

-

When switching from parallel to interleaved mode, to enable interleaving when

max-recoveries-interleaved= 0, first setrecovery-threadsto 1, then changemax-recoveries-interleavedto the desired value. -

When switching from interleaved to parallel mode, to change

recovery-threadswhenmax-recoveries-interleaved> 0, first setmax-recoveries-interleavedto 0, then changerecovery-threadsto the desired value.

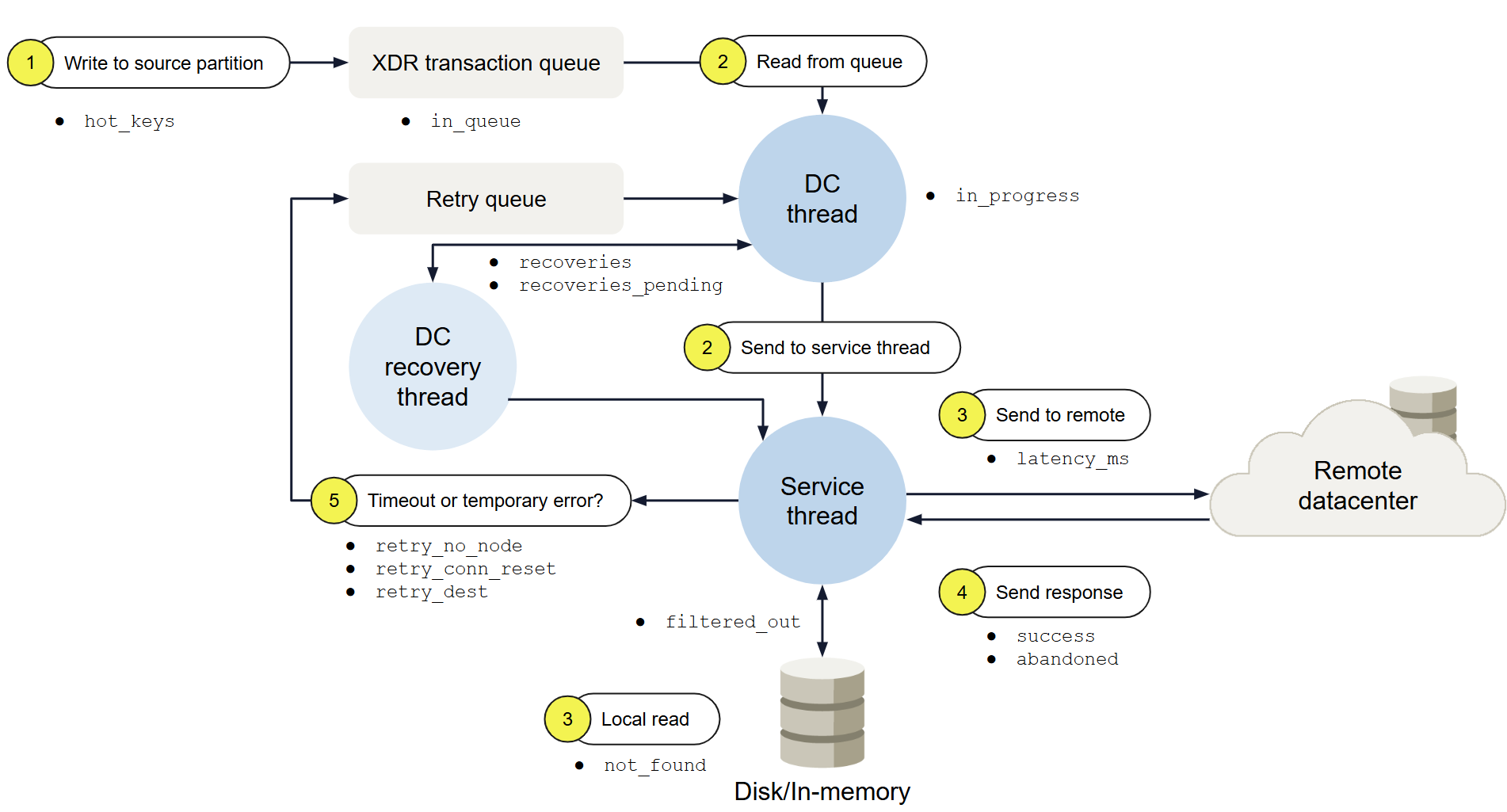

XDR record shipment diagram

The following diagram shows the lifecycle of a record from a single partition in a single namespace in a single datacenter. These processes occur for all partitions and namespaces in a live environment.

- Dashed boxes indicate a process stage.

- Bullets indicate how the metric or stage names are logged.

Each stage is described immediately following the diagram.

Figure 1: XDR record shipment lifecycle, with metrics

-

Write to source partition.

After a successful write in the source partition, the record is submitted to the per-partition, in-memory transaction queue of each datacenter.

- If a duplicate key is found in the XDR transaction queue within the time specified in

hot-key-ms, the duplicate is not inserted into the queue. This reduces the number of times the same version of a hot key ships.

- If a duplicate key is found in the XDR transaction queue within the time specified in

-

Read from queue.

The

dcthread picks the record from the transaction queue and forwards it to the service thread.- The run period of the

dcthread is controlled byperiod-ms. If there is a delay in shipping a write transaction,period-msis the likely source. - If the record’s last update time (LUT) is within the time specified in

delay-msfrom the current time, thedcthread skips it, and does not yet hand it to a service thread. - In strong consistency (SC) mode, the

sc-replication-wait-msparameter provides a default delay so XDR doesn’t ship records before their initial replication is complete.

- The run period of the

-

Send to service thread and remote destination.

The service thread reads the record locally, prepares the record for shipment, and ships it to the remote destination. The

max-throughputto the remote destination is applied on a per-datacenter, per-namespace basis.- In SC mode, if the record is unreplicated, XDR triggers a re-replication by inserting a transaction into the internal transaction queue. A service thread picks up the transaction from the internal transaction queue and checks if the transaction timed out.

- If the transaction timed out, it does not re-replicate and XDR does not ship the record to the destination. A future client read/write will trigger re-replication and may succeed in shipping to the destination.

- If the transaction did not time out, the service thread re-replicates the record. The re-replication makes an entry into the XDR in-memory transaction queue. This re-replication may also time out and leave the record unreplicated. However, the entry in the XDR in-memory transaction queue immediately triggers another round of re-replication.

- In SC mode, if the record is unreplicated, XDR triggers a re-replication by inserting a transaction into the internal transaction queue. A service thread picks up the transaction from the internal transaction queue and checks if the transaction timed out.

-

Send response.

The remote destination attempts to write the record and returns the completion state of the transaction to the source datacenter. The completion state can be success, temporary failure (for example, key busy or device overload), or permanent error (for example, record too big).

-

Retry if necessary.

If there is a timeout or temporary error, the service thread sends the record to a retry queue.

Transaction delays

XDR configuration parameters control various stages of the record lifecycle where delays may be necessary because XDR is not able to keep up with writes.

The following table lists the configuration parameters that control the record lifecycle.

| Parameter | Description | Notes |

|---|---|---|

transaction-queue-limit | Maximum number of elements allowed in XDR’s in-memory transaction queue per partition, per namespace, per datacenter. | Default: 16*1024 = 16384 Minimum: 1024 Maximum: 1048576 |

hot-key-ms | Period in milliseconds to wait in between shipping hotkeys. Controls the frequency at which hot-keys are processed. Good in situations where the XDR transaction queue is potentially large. This avoids having to check across the whole queue for a potential previous entry corresponding to the current incoming transaction. | Minimum: 0, Maximum: 5000. |

period-ms | Period in milliseconds at which the dc-thread processes partitions for XDR shipment. | Default: 100ms. |

delay-ms | Period in milliseconds as an artificial delay on shipment of all records, including hotkeys and records that are not hot. Forces records to wait the configured period before processing. | Minimum: 0, Maximum: 5000. Must be less than or equal to the value of hot-key-ms. |

sc-replication-wait-ms | Number of milliseconds that XDR waits before dequeuing a record from the in-memory transaction queue of a namespace configured for SC. Prevents records from shipping before fully replicated. | Minimum: 5, Maximum: 1000 |

max-throughput | Number of records per second to ship using XDR. | Must be in increments of 100 (such as 100, 200, 1000) |

XDR version shipping control

Two configuration parameters dynamically control how XDR ships versions of records.

ship-versions-policycontrols how XDR ships versions of modified records between the source cluster and a destination.ship-versions-intervalspecifies a time window in seconds within which XDR is allowed to skip versions.

XDR tracks the unique identifier (digest) of records that are modified (inserted, updated, deleted), expire, or are evicted. When it’s time to ship a record, XDR reads the record from storage and ships it to the destination. If there are multiple updates to the same record in a short period of time, XDR maximizes throughput by shipping only the latest version of the record. Shipping only the latest record keeps two remote Aerospike Database clusters in sync and prevents XDR from shipping all versions of a record. This may be necessary when Aerospike is shipping data to a destination through a connector, such as Kafka, Pulsar, or JMS.

ship-versions-policy: latest

This is the default policy. It allows the latest write to be shipped to the destination. This is the behavior in server versions prior to 7.2.0.

ship-versions-policy: all

This policy ships all writes to the destination. Subsequent writes of the record are delayed or blocked until the

previous write is shipped to the destination. For blocked writes, the server returns AS_ERR_XDR_KEY_BUSY 32 error to the client.

Similar to the interval policy, in low-throughput use cases this policy can ensure that the destination receives every update to every record. The operator does need to consider the backpressure implications when lag between source and destination increases.

If ship-versions-policy is all, delay-ms must be 0. delay-ms cannot exceed the time window specified by ship-versions-interval.

This configuration guarantees that there are no two writes on the record at the same time, so there won’t be any write hotkeys in the system.

ship-versions-policy: interval

This policy guarantees shipping of at least one version in this interval. If there are multiple writes within an interval, the last write in the interval is definitely shipped.

The value of the interval is set in ship-versions-interval in the XDR’s DC namespace subsection. ship-versions-interval takes value between 1 and 3600 seconds. The default value is 60 seconds.

ship-versions-policy is a relaxation over the all policy. It allows the write on the record to proceed even if the previous write is not shipped to the destination, if they belong to the same interval. The interval policy is a good balance between the latest and all policies. It allows the writes to proceed even if the previous write is not shipped to the destination if they belong to the same interval.

Potential issues and workarounds

The following sections detail potential write blocking behavior and data shipping failures related to specific XDR configurations.

Writes blocked by ship-versions-policy (all)

Writes may be blocked or delayed until the previous version of a record is shipped. This is most noticeable with hot keys when the ship-versions-policy is set to all, as XDR needs to ship the prior version before allowing a subsequent write to the same key.

- Workaround: Change the

ship-versions-policyto interval with an appropriate time granularity.

Rewind may block writes

If ship-versions-policy is set during a namespace rewind, it can block all writes until the first round of recovery is complete. See Rewind a shipment for details.

Global impact of ship-versions-policy on multi-DC

When configuring multiple datacenters (DCs) for XDR, writes may be blocked if the ship-versions-policy is set to either all or interval in any of the datacenters. This occurs because the Aerospike server processes writes globally, not independently per DC.

If a previous version is pending shipment to one DC due to the policy, subsequent writes to that record are blocked globally until the pending version is shipped to that single delayed DC.

XDR fails to ship deletes (tombstone issue)

In certain scenarios, XDR may fail to ship deletes even when the feature is enabled. For example, this can happen if a record is expired or evicted, and then the record is rewritten before the NSUP thread can convert the deleted record into an XDR tombstone. When the tombstone is not created, the non-durable delete is not shipped because the primary index element of the expired/evicted record is reused by the new write.

Metrics of XDR processes

| Lifecycle stage | Metric name | Description |

|---|---|---|

| Waiting for processing and processing started | in_queuein_progress | Record is in the XDR in-memory queue and is awaiting shipment.-XDR is actively shipping the record. |

| Retrying | retry_conn_resetretry_dest retry_no_node | Transient stage. The shipment is being retried due to some error. Retries continue until one of the completed states is achieved. |

| Recovering | recoveriesrecoveries_pending | Transient stage that indicates internal-to-XDR “housekeeping”. If XDR cannot find record keys in its in-memory transaction queue, it reads the primary index to recover those keys for processing. |

| Completed | successabandonednot_foundfiltered_out | Shipment is complete. The state of shipment is either success or one that indicates a non-success result. |